torchquad

torchquad: Numerical Integration in Arbitrary Dimensions with PyTorch - Published in JOSS (2021)

Science Score: 100.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

✓CITATION.cff file

Found CITATION.cff file -

✓codemeta.json file

Found codemeta.json file -

✓.zenodo.json file

Found .zenodo.json file -

✓DOI references

Found 2 DOI reference(s) in README and JOSS metadata -

✓Academic publication links

Links to: joss.theoj.org -

✓Committers with academic emails

2 of 13 committers (15.4%) from academic institutions -

○Institutional organization owner

-

✓JOSS paper metadata

Published in Journal of Open Source Software

Keywords

Repository

Numerical integration in arbitrary dimensions on the GPU using PyTorch / TF / JAX

Basic Info

- Host: GitHub

- Owner: esa

- License: gpl-3.0

- Language: Python

- Default Branch: main

- Homepage: https://www.esa.int/gsp/ACT/open_source/torchquad/

- Size: 10.9 MB

Statistics

- Stars: 207

- Watchers: 9

- Forks: 42

- Open Issues: 14

- Releases: 10

Topics

Metadata Files

README.md

torchquad

High-performance numerical integration on the GPU with PyTorch, JAX and Tensorflow

Explore the docs »

Report Bug

·

Request Feature

Table of Contents

About The Project

The torchquad module allows utilizing GPUs for efficient numerical integration with PyTorch and other numerical Python3 modules. The software is free to use and is designed for the machine learning community and research groups focusing on topics requiring high-dimensional integration.

Built With

This project is built with the following packages:

- autoray, which means the implemented quadrature supports NumPy and can be used for machine learning with modules such as PyTorch, JAX and Tensorflow, where it is fully differentiable

- conda, which will take care of all requirements for you

If torchquad proves useful to you, please consider citing the accompanying paper.

Goals

- Supporting science: Multidimensional numerical integration is needed in many fields, such as physics (from particle physics to astrophysics), in applied finance, in medical statistics, and others. torchquad aims to assist research groups in such fields, as well as the general machine learning community.

- Withstanding the curse of dimensionality: The curse of dimensionality makes deterministic methods in particular, but also stochastic ones, computationally expensive when the dimensionality increases. However, many integration methods are embarrassingly parallel, which means they can strongly benefit from GPU parallelization. The curse of dimensionality still applies but the improved scaling alleviates the computational impact.

- Delivering a convenient and functional tool: torchquad is built with autoray, which means it is fully differentiable if the user chooses, for example, PyTorch as the numerical backend. Furthermore, the library of available and upcoming methods in torchquad offers high-effeciency integration for any need.

Getting Started

This is a brief guide for how to set up torchquad.

Prerequisites

We recommend using conda, especially if you want to utilize the GPU. With PyTorch it will automatically set up CUDA and the cudatoolkit for you, for example. Note that torchquad also works on the CPU; however, it is optimized for GPU usage. torchquad's GPU support is tested only on NVIDIA cards with CUDA. We are investigating future support for AMD cards through ROCm.

For a detailed list of required packages and packages for numerical backends, please refer to the conda environment files environment.yml and environmentallbackends.yml. torchquad has been tested with JAX 0.2.25, NumPy 1.19.5, PyTorch 1.10.0 and Tensorflow 2.7.0 on Linux; other versions of the backends should work as well but some may require additional setup on other platforms such as Windows.

Installation

The easiest way to install torchquad is simply to

sh

conda install torchquad -c conda-forge

Alternatively, it is also possible to use

sh

pip install torchquad

The PyTorch backend with CUDA support can be installed with

sh

conda install "cudatoolkit>=11.1" "pytorch>=1.9=*cuda*" -c conda-forge -c pytorch

Note that since PyTorch is not yet on conda-forge for Windows, we have explicitly included it here using -c pytorch.

Note also that installing PyTorch with pip may not set it up with CUDA support. Therefore, we recommend to use conda.

Here are installation instructions for other numerical backends:

sh

conda install "tensorflow>=2.6.0=cuda*" -c conda-forge

pip install "jax[cuda]>=0.4.17" --find-links https://storage.googleapis.com/jax-releases/jax_cuda_releases.html # linux only

conda install "numpy>=1.19.5" -c conda-forge

More installation instructions for numerical backends can be found in environmentallbackends.yml and at the backend documentations, for example https://pytorch.org/get-started/locally/, https://github.com/google/jax/#installation and https://www.tensorflow.org/install/gpu, and often there are multiple ways to install them.

Test

After installing torchquad and PyTorch through conda or pip,

users can test torchquad's correct installation with:

py

import torchquad

torchquad._deployment_test()

After cloning the repository, developers can check the functionality of torchquad by running

sh

pip install -e .

pytest

Usage

This is a brief example how torchquad can be used to compute a simple integral with PyTorch. For a more thorough introduction please refer to the tutorial section in the documentation.

The full documentation can be found on readthedocs.

```Python3

To avoid copying things to GPU memory,

ideally allocate everything in torch on the GPU

and avoid non-torch function calls

import torch from torchquad import MonteCarlo, setupbackend

Enable GPU support if available and set the floating point precision

setupbackend("torch", data_type="float32")

The function we want to integrate, in this example

f(x0,x1) = sin(x0) + e^x1 for x0=[0,1] and x1=[-1,1]

Note that the function needs to support multiple evaluations at once (first

dimension of x here)

Expected result here is ~3.2698

def some_function(x): return torch.sin(x[:, 0]) + torch.exp(x[:, 1])

Declare an integrator;

here we use the simple, stochastic Monte Carlo integration method

mc = MonteCarlo()

Compute the function integral by sampling 10000 points over domain

integralvalue = mc.integrate( somefunction, dim=2, N=10000, integration_domain=[[0, 1], [-1, 1]], backend="torch", ) ```

Logging Configuration

By default, torchquad disables its internal logging when installed from PyPI to avoid interfering with other loggers in your application. To enable logging change TORCHQUAD_DISABLE_LOGGING in __init__.py:

Set the log level: Use the

TORCHQUAD_LOG_LEVELenvironment variable:bash export TORCHQUAD_LOG_LEVEL=DEBUG # For detailed debugging export TORCHQUAD_LOG_LEVEL=INFO # For general information export TORCHQUAD_LOG_LEVEL=WARNING # For warnings only (default when enabled)Enable logging programmatically:

python import torchquad torchquad.set_log_level("DEBUG") # This will enable and configure logging

Multi-GPU Usage

torchquad supports multi-GPU systems through standard PyTorch practices. The recommended approach is to use the CUDA_VISIBLE_DEVICES environment variable to control GPU selection:

```bash

Use specific GPU

export CUDAVISIBLEDEVICES=0 # Use GPU 0 python your_script.py

export CUDAVISIBLEDEVICES=1 # Use GPU 1

python your_script.py

Use multiple GPUs with separate processes

export CUDAVISIBLEDEVICES=0 && python integrationscript.py & export CUDAVISIBLEDEVICES=1 && python integrationscript.py & ```

For parallel processing across multiple GPUs, we recommend spawning separate processes rather than trying to coordinate multiple GPUs within a single process. This approach:

- Provides clean separation between GPU processes

- Avoids complex device management

- Follows PyTorch best practices

- Enables easy load balancing and error handling

For detailed examples and advanced multi-GPU patterns, see the Multi-GPU Usage section in our documentation.

You can find all available integrators here.

Roadmap

See the open issues for a list of proposed features (and known issues).

Performance

Using GPUs, torchquad scales particularly well with integration methods that offer easy parallelization. The benchmarks below demonstrate performance across challenging functions from 1D to 15D, comparing torchquad's GPU-accelerated methods against scipy's CPU implementations.

Convergence Analysis

Convergence comparison across challenging test functions from 1D to 15D. GPU-accelerated torchquad methods demonstrate great performance, particularly for high-dimensional integration where scipy's nquad becomes computationally infeasible. Beyond 1D, torchquad significantly outperforms scipy in efficiency.

Convergence comparison across challenging test functions from 1D to 15D. GPU-accelerated torchquad methods demonstrate great performance, particularly for high-dimensional integration where scipy's nquad becomes computationally infeasible. Beyond 1D, torchquad significantly outperforms scipy in efficiency.

Runtime vs Error Efficiency

Runtime-error trade-offs across dimensions. Lower-left positions indicate better performance. While scipy's traditional methods are competitive for simple 1D problems, torchquad's GPU acceleration provides orders of magnitude better performance for multi-dimensional integration, achieving both faster computation and lower errors.

Runtime-error trade-offs across dimensions. Lower-left positions indicate better performance. While scipy's traditional methods are competitive for simple 1D problems, torchquad's GPU acceleration provides orders of magnitude better performance for multi-dimensional integration, achieving both faster computation and lower errors.

Scaling Performance

Scaling investigation across problem sizes and dimensions of the different methods in torchquad.

Scaling investigation across problem sizes and dimensions of the different methods in torchquad.

Vectorized Integration Speedup

Strong performance gains when evaluating multiple integrands simultaneously. The vectorized approach shows exponential speedup (up to 200x) compared to sequential evaluation, making torchquad ideal for parameter sweeps, uncertainty quantification, and machine learning applications requiring batch integration.

Strong performance gains when evaluating multiple integrands simultaneously. The vectorized approach shows exponential speedup (up to 200x) compared to sequential evaluation, making torchquad ideal for parameter sweeps, uncertainty quantification, and machine learning applications requiring batch integration.

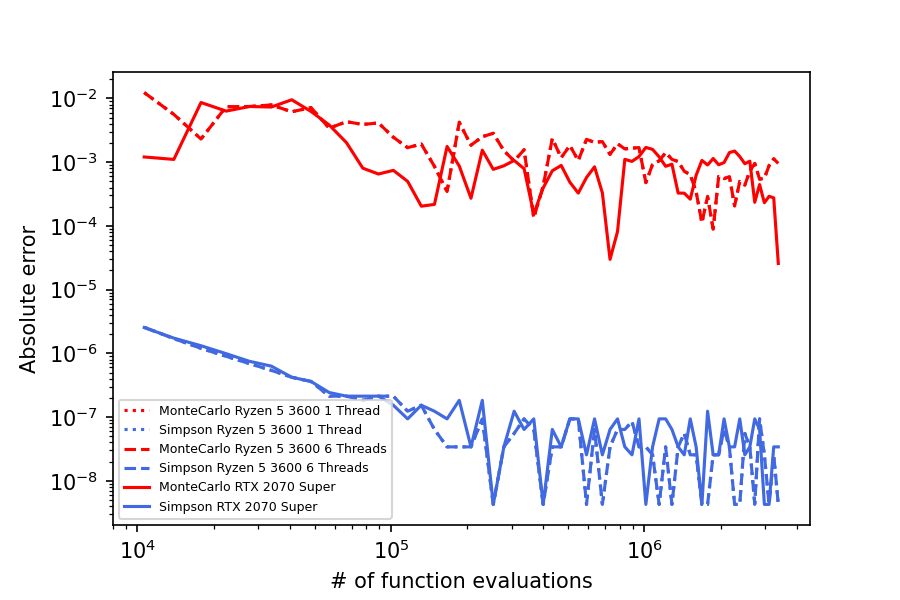

Framework Comparison

Cross-framework performance comparison for 1D integration using Monte Carlo and Simpson methods. Demonstrates torchquad's consistent API across PyTorch, TensorFlow, JAX, and NumPy backends, with GPU acceleration providing significant performance advantages for large number of function evaluations. All frameworks achieve similar accuracy while showcasing the computational benefits of GPU acceleration for parallel integration methods.

Cross-framework performance comparison for 1D integration using Monte Carlo and Simpson methods. Demonstrates torchquad's consistent API across PyTorch, TensorFlow, JAX, and NumPy backends, with GPU acceleration providing significant performance advantages for large number of function evaluations. All frameworks achieve similar accuracy while showcasing the computational benefits of GPU acceleration for parallel integration methods.

Running Benchmarks

To reproduce these benchmarks or test performance on your hardware:

```bash

Run all benchmarks (convergence, framework comparison, scaling, vectorized)

python benchmarking/modular_benchmark.py --dimensions 1,3,7,15

Run specific benchmark types

python benchmarking/modularbenchmark.py --convergence-only --dimensions 1,3,7,15 python benchmarking/modularbenchmark.py --scaling-only python benchmarking/modular_benchmark.py --framework-only

Generate all plots from results

python benchmarking/plot_results.py

Configure benchmark parameters

Edit benchmarking/benchmarking_cfg.toml to adjust:

- Evaluation point ranges

- Framework backends to test

- Timeout limits

- Method selection

- scipy integration tolerances

```

New Features: - Analytic Reference Values: Uses SymPy for exact analytic solutions where possible, providing highly accurate reference values for error calculations - Enhanced Test Functions: Analytically tractable but numerically challenging functions that better demonstrate convergence behavior - Framework Comparison: Cross-backend performance benchmarking across PyTorch, TensorFlow, JAX, and NumPy with GPU/CPU device comparisons

Hardware: RTX 4060 Ti 16GB, i5-13400F, Precision: float32

Contributing

The project is open to community contributions. Feel free to open an issue or write us an email if you would like to discuss a problem or idea first.

If you want to contribute, please

- Fork the project on GitHub.

- Get the most up-to-date code by following this quick guide for installing torchquad from source:

- Get miniconda or similar

- Clone the repo

sh git clone https://github.com/esa/torchquad.git - With the default configuration, all numerical backends with CUDA

support are installed.

If this should not happen, comment out unwanted packages in

environment_all_backends.yml. - Set up the environment. This creates a conda environment called

torchquadand installs the required dependencies.sh conda env create -f environment_all_backends.yml conda activate torchquad

Once the installation is done, you are ready to contribute.

Please note that PRs should be created from and into the develop branch. For each release the develop branch is merged into main.

- Create your Feature Branch (

git checkout -b feature/AmazingFeature) - Commit your Changes (

git commit -m 'Add some AmazingFeature') - Push to the Branch (

git push origin feature/AmazingFeature) - Open a Pull Request on the

developbranch, notmain

and we will have a look at your contribution as soon as we can.

Furthermore, please make sure that your PR passes all automated tests, you can ping @gomezzz to run the CI. Review will only happen after that.

Only PRs created on the develop branch with all tests passing will be considered. The only exception to this rule is if you want to update the documentation in relation to the current release on conda / pip. In that case you open a PR directly into main.

License

Distributed under the GPL-3.0 License. See LICENSE for more information.

FAQ

- Q:

Error enabling CUDA. cuda.is_available() returned False. CPU will be used.

A: This error indicates that PyTorch could not find a CUDA-compatible GPU. Either you have no compatible GPU or the necessary CUDA requirements are missing. Usingconda, you can install them withconda install cudatoolkit. For more detailed installation instructions, please refer to the PyTorch documentation.

Contact

Created by ESA's Advanced Concepts Team

- Pablo Gómez -

pablo.gomez at esa.int - Gabriele Meoni -

gabriele.meoni at esa.int - Håvard Hem Toftevaag

Project Link: https://github.com/esa/torchquad

Owner

- Name: European Space Agency

- Login: esa

- Kind: organization

- Location: Europe

- Website: http://www.esa.int

- Repositories: 67

- Profile: https://github.com/esa

The European Space Agency (ESA) is Europe’s gateway to space. Its mission is to shape the development of Europe’s space capability.

JOSS Publication

torchquad: Numerical Integration in Arbitrary Dimensions with PyTorch

Authors

Tags

n-dimensional numerical integration GPU automatic differentiation PyTorch high-performance computing machine learningCitation (CITATION.cff)

cff-version: 1.2.0

message: "If you use this software, please cite it as below."

title: "torchquad: Numerical Integration in Arbitrary

Dimensions with PyTorch, TensorFlow & JAX"

authors:

- given-names: Pablo

family-names: Gómez

email: pablo.gomez@esa.int

affiliation: >-

Advanced Concepts Team, European Space Agency,

Noordwijk, The Netherlands

orcid: "https://orcid.org/0000-0002-5631-8240"

- given-names: Håvard

family-names: Hem Toftevaag

affiliation: >-

Advanced Concepts Team, European Space Agency,

Noordwijk, The Netherlands

orcid: "http://orcid.org/0000-0003-4692-5722"

- given-names: Gabriele

family-names: Meoni

affiliation: >-

Advanced Concepts Team, European Space Agency,

Noordwijk, The Netherlands

orcid: "http://orcid.org/0000-0001-9311-6392"

repository-code: "https://github.com/esa/torchquad/"

url: "https://www.esa.int/gsp/ACT/open_source/torchquad/"

repository-artifact: "https://anaconda.org/conda-forge/torchquad"

license: GPL-3.0

preferred-citation:

type: article

authors:

- given-names: Pablo

family-names: Gómez

email: pablo.gomez@esa.int

affiliation: >-

Advanced Concepts Team, European Space Agency,

Noordwijk, The Netherlands

orcid: "https://orcid.org/0000-0002-5631-8240"

- given-names: Håvard

family-names: Hem Toftevaag

affiliation: >-

Advanced Concepts Team, European Space Agency,

Noordwijk, The Netherlands

orcid: "http://orcid.org/0000-0003-4692-5722"

- given-names: Gabriele

family-names: Meoni

affiliation: >-

Advanced Concepts Team, European Space Agency,

Noordwijk, The Netherlands

orcid: "http://orcid.org/0000-0001-9311-6392"

doi: "10.21105/joss.03439"

journal: "Journal of Open Source Software"

title: "torchquad: Numerical Integration in Arbitrary

Dimensions with PyTorch"

issue: 6

volume: 64

year: 2021

GitHub Events

Total

- Create event: 11

- Release event: 2

- Issues event: 10

- Watch event: 27

- Delete event: 6

- Issue comment event: 26

- Push event: 35

- Pull request review event: 2

- Pull request event: 30

- Fork event: 4

Last Year

- Create event: 11

- Release event: 2

- Issues event: 10

- Watch event: 27

- Delete event: 6

- Issue comment event: 26

- Push event: 35

- Pull request review event: 2

- Pull request event: 30

- Fork event: 4

Committers

Last synced: 7 months ago

Top Committers

| Name | Commits | |

|---|---|---|

| Pablo Gómez | c****t@p****t | 274 |

| Håvard Hem Toftevaag | h****g@o****m | 159 |

| FHof | g****v@m****e | 105 |

| ilan-gold | i****d@g****m | 45 |

| GabrieleMeoni | g****i@e****t | 44 |

| Gabriele Meoni | 7****i | 10 |

| Rui-Zhi Li (李睿智) | 6****e | 2 |

| Albern S | 6****a | 1 |

| Daniel Kelshaw | d****w@g****m | 1 |

| bubel | m****l@i****e | 1 |

| elastufka | e****a@g****m | 1 |

| javier-garcia-tilburg | 1****g | 1 |

| GabrieleMeoni | g****i@k****n | 1 |

Committer Domains (Top 20 + Academic)

Issues and Pull Requests

Last synced: 6 months ago

All Time

- Total issues: 47

- Total pull requests: 105

- Average time to close issues: 5 months

- Average time to close pull requests: 19 days

- Total issue authors: 23

- Total pull request authors: 17

- Average comments per issue: 3.3

- Average comments per pull request: 1.7

- Merged pull requests: 82

- Bot issues: 0

- Bot pull requests: 0

Past Year

- Issues: 7

- Pull requests: 19

- Average time to close issues: about 2 months

- Average time to close pull requests: 18 days

- Issue authors: 4

- Pull request authors: 4

- Average comments per issue: 1.14

- Average comments per pull request: 0.89

- Merged pull requests: 12

- Bot issues: 0

- Bot pull requests: 0

Top Authors

Issue Authors

- gomezzz (19)

- FHof (3)

- htoftevaag (2)

- javier-garcia-tilburg (2)

- Yuer-1218 (2)

- dsmic (1)

- mawright (1)

- akshay-ghalsasi (1)

- julian-urban (1)

- mahmoud-atwi (1)

- punyidea (1)

- nbereux (1)

- Karbo123 (1)

- jk4011 (1)

- omashkova (1)

Pull Request Authors

- gomezzz (55)

- htoftevaag (17)

- abnsy (9)

- ilan-gold (5)

- GabrieleMeoni (5)

- FHof (4)

- javier-garcia-tilburg (4)

- Astro-Lee (2)

- arkinn47 (2)

- alberthli (2)

- aurelio-amerio (2)

- elastufka (1)

- corepattern (1)

- wirrell (1)

- MrGweep (1)

Top Labels

Issue Labels

Pull Request Labels

Packages

- Total packages: 2

-

Total downloads:

- pypi 568 last-month

-

Total dependent packages: 0

(may contain duplicates) -

Total dependent repositories: 4

(may contain duplicates) - Total versions: 11

- Total maintainers: 1

pypi.org: torchquad

Package providing numerical integration methods for PyTorch, jax, TensorFlow and numpy.

- Homepage: https://github.com/esa/torchquad

- Documentation: https://torchquad.readthedocs.io/

- License: GPL-3.0

-

Latest release: 0.5.0

published 7 months ago

Rankings

Maintainers (1)

conda-forge.org: torchquad

This project allows utilizing GPUs for efficient numerical integration with PyTorch. It is designed for the machine learning community and research groups focusing on topics requiring high-dimensional integration. By using PyTorch backpropagation through the integrals is possible.

- Homepage: https://github.com/esa/torchquad/

- License: GPL-3.0-only

-

Latest release: 0.3.0

published almost 4 years ago

Rankings

Dependencies

- autoray >=0.2.5

- loguru >=0.5.3

- matplotlib >=3.3.3

- scipy >=1.6.0

- tqdm >=4.56.0

- autoray >=0.2.5

- loguru >=0.5.3

- matplotlib >=3.3.3

- scipy >=1.6.0

- tqdm >=4.56.1

- actions/checkout v1 composite

- actions/setup-python v1 composite

- actions/checkout v2 composite

- actions/setup-python v2 composite

- actions/checkout v2 composite

- actions/setup-python v2 composite

- actions/checkout v2 composite

- actions/upload-artifact v1 composite

- openjournals/openjournals-draft-action master composite

- actions/checkout v2 composite

- actions/setup-python v2 composite

- mamba-org/provision-with-micromamba main composite