sc2-benchmark

[TMLR] "SC2 Benchmark: Supervised Compression for Split Computing"

Science Score: 64.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

✓CITATION.cff file

Found CITATION.cff file -

✓codemeta.json file

Found codemeta.json file -

✓.zenodo.json file

Found .zenodo.json file -

○DOI references

-

✓Academic publication links

Links to: arxiv.org -

✓Committers with academic emails

1 of 2 committers (50.0%) from academic institutions -

○Institutional organization owner

-

○JOSS paper metadata

-

○Scientific vocabulary similarity

Low similarity (10.6%) to scientific vocabulary

Keywords

Repository

[TMLR] "SC2 Benchmark: Supervised Compression for Split Computing"

Basic Info

- Host: GitHub

- Owner: yoshitomo-matsubara

- License: mit

- Language: Python

- Default Branch: main

- Homepage: https://yoshitomo-matsubara.net/sc2-benchmark/

- Size: 6.48 MB

Statistics

- Stars: 30

- Watchers: 1

- Forks: 12

- Open Issues: 0

- Releases: 5

Topics

Metadata Files

README.md

SC2 Benchmark: Supervised Compression for Split Computing

This is the official repository of sc2bench package and our TMLR paper, "SC2 Benchmark: Supervised Compression for Split Computing".

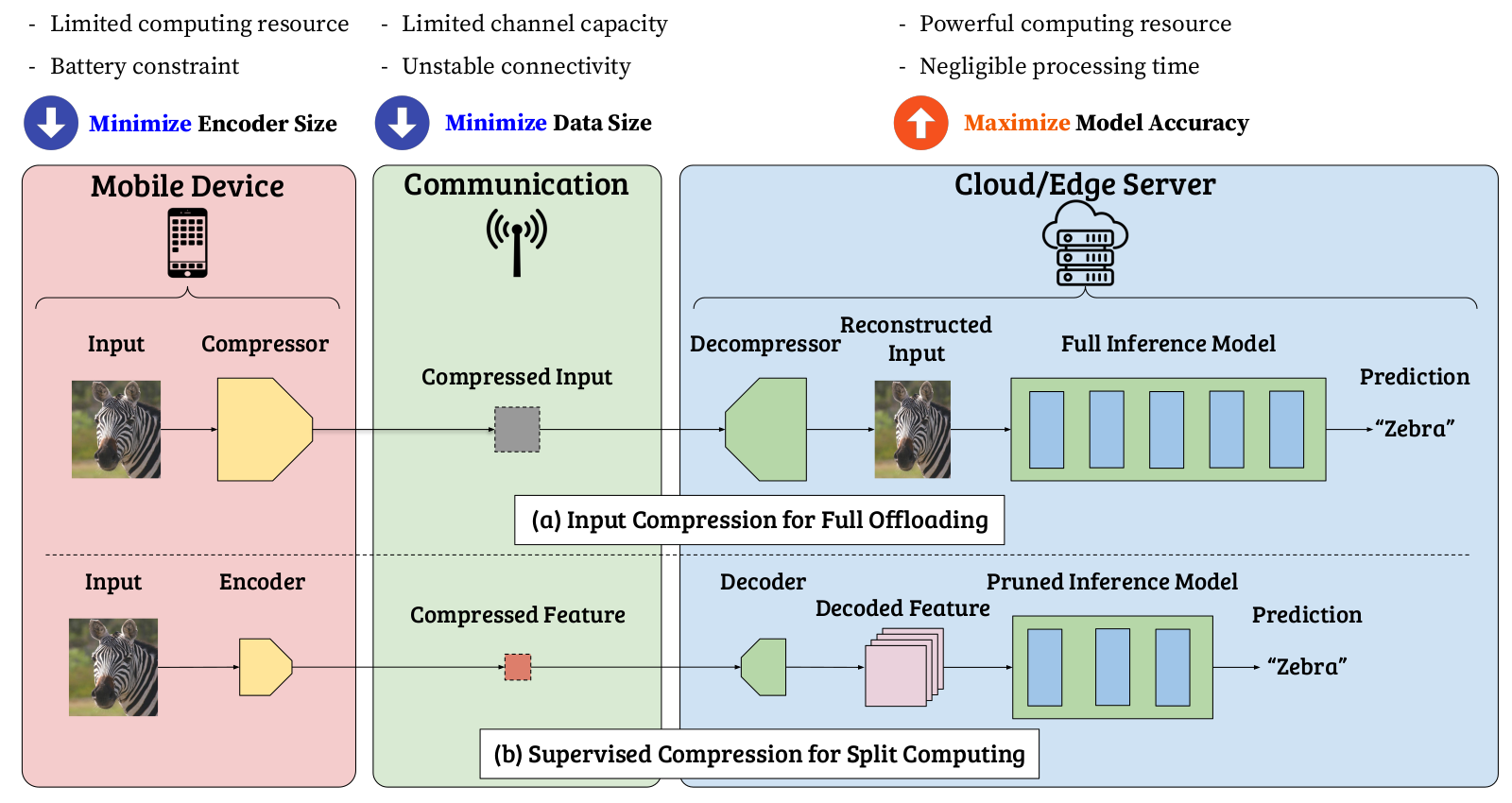

As an intermediate option between local computing and edge computing (full offloading), split computing has been attracting considerable attention from the research communities.

In split computing, we split a neural network model into two sequences so that some elementary feature transformations are applied by the first sequence of the model on a weak mobile (local) device. Then, intermediate, informative features are transmitted through a wireless communication channel to a powerful edge server that processes the bulk part of the computation (the second sequence of the model).

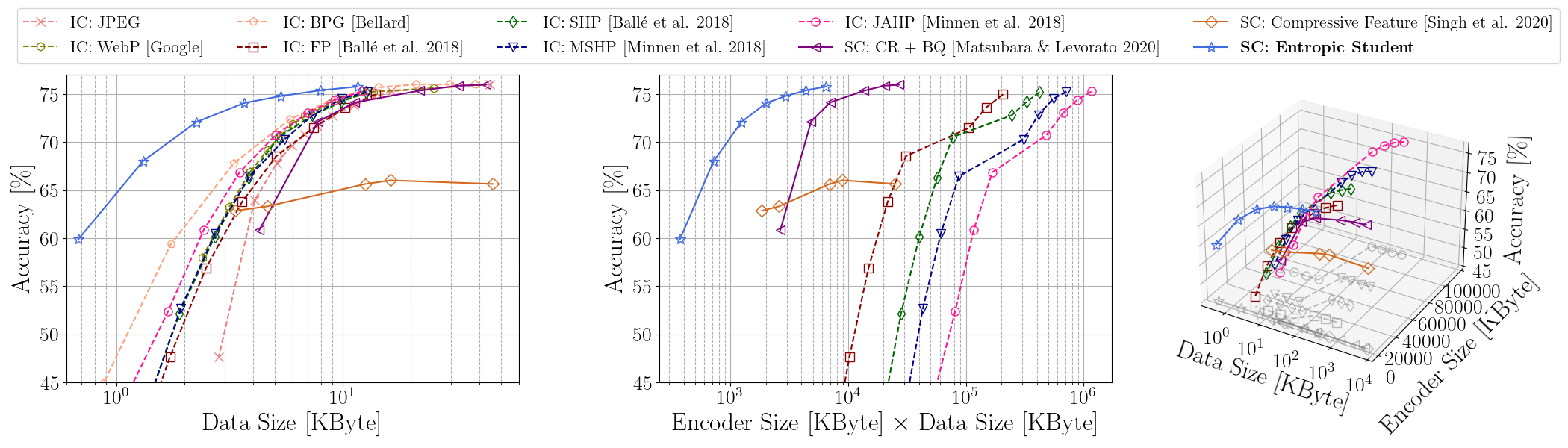

ImageNet (ILSVRC 2012): R-D (rate-distortion), ExR-D, and three-way tradeoffs for input compression and supervised compression with ResNet-50 as a reference model

ImageNet (ILSVRC 2012): R-D (rate-distortion), ExR-D, and three-way tradeoffs for input compression and supervised compression with ResNet-50 as a reference model

Input compression is an approach to save transmitted data, but it leads to transmitting information irrelevant to the supervised task. To achieve better supervised rate-distortion tradeoff, we define supervised compression as learning compressed representations for supervised downstream tasks such as classification, detection, or segmentation. Specifically for split computing, we term the problem setting SC2 (Supervised Compression for Split Computing).

Note that the training process can be done offline (i.e., on a single device without splitting), and it is different from "split learning".

SC2 Metrics

1. Encoder Size (to be minimized)

Local processing cost should be minimized as local (mobile) devices usually have battery constraints and limited computing power. As a simple proxy for the computing costs, we measure the number of encoder parameters and define the encoder size as the total number of bits to represent the parameters of the encoder.

2. Data Size (to be minimized)

We want to penalize large data being transferred from the mobile device to the edge server while the BPP does not penalize it when feeding higher resolution images to downstream models for achieving higher model accuracy.

3. Model Accuracy (to be maximized)

While minimizing the two metrics, we want to maximize model accuracy (minimize supervised distortion). Example supervised distortions are accuracy, mean average precision (mAP), and mean intersection over union (mIoU) for image classification, object detection, and semantic segmentation, respectively.

Installation

shell

pip install sc2bench

Virtual Environments

For pipenv users, ```shell pipenv install --python 3.9

or create your own pipenv environment

pipenv install sc2bench ```

Datasets

See instructions here

Checkpoints

You can download our checkpoints including trained model weights here.

Unzip the downloaded zip files under ./, then there will be ./resource/ckpt/.

Supervised Compression

- CR + BQ: "Neural Compression and Filtering for Edge-assisted Real-time Object Detection in Challenged Networks"

- End-to-End: "End-to-end Learning of Compressible Features"

- Entropic Student: "Supervised Compression for Resource-Constrained Edge Computing Systems"

README.md explains how to train/test implemented supervised compression methods.

Baselines: Input Compression

- Codec-based input compression: JPEG, WebP, BPG

- Neural input compression: Factorized Prior, Scale Hyperprior, Mean-scale Hyperprior, and Joint Autoregressive Hierarchical Prior

Each README.md gives instructions to run the baseline experiments.

Codec-based Feature Compression

```shell

JPEG

python script/task/imageclassification.py -testonly --config configs/ilsvrc2012/feature_compression/jpeg-resnet50.yaml

WebP

python script/task/imageclassification.py -testonly --config configs/ilsvrc2012/feature_compression/webp-resnet50.yaml ```

Citation

[Paper] [Preprint]

bibtex

@article{matsubara2023sc2,

title={{SC2 Benchmark: Supervised Compression for Split Computing}},

author={Matsubara, Yoshitomo and Yang, Ruihan and Levorato, Marco and Mandt, Stephan},

journal={Transactions on Machine Learning Research},

issn={2835-8856},

year={2023},

url={https://openreview.net/forum?id=p28wv4G65d}

}

Note

For measuring data size per sample precisely, it is important to keep test batch size of 1 when testing.

E.g., some baseline modules may expect larger batch size if you have multiple GPUs.

Then, add CUDA_VISIBLE_DEVICES=0 before your execution command (e.g., sh, bash, python)

so that you can force the script to use one GPU (use GPU: 0 in this case).

For instance, an input compression experiment using factorized prior (pretrained input compression model)

and ResNet-50 (pretrained classifier)

shell

CUDA_VISIBLE_DEVICES=0 sh script/neural_input_compression/ilsvrc2012-image_classification.sh factorized_prior-resnet50 8

Issues / Questions / Requests

The documentation is work-in-progress. In the meantime, feel free to create an issue if you find a bug.

If you have either a question or feature request, start a new discussion here.

References

- PyTorch (torchvision)

- PyTorch Image Models (timm)

- CompressAI

- torchdistill

- Johannes Ballé, David Minnen, Saurabh Singh, Sung Jin Hwang and Nick Johnston. "Variational image compression with a scale hyperprior" (ICLR 2018)

- David Minnen, Johannes Ballé and George D. Toderici. "Joint Autoregressive and Hierarchical Priors for Learned Image Compression" (NeurIPS 2018)

- Yoshitomo Matsubara and Marco Levorato. "Neural Compression and Filtering for Edge-assisted Real-time Object Detection in Challenged Networks" (ICPR 2020)

- Saurabh Singh, Sami Abu-El-Haija, Nick Johnston, Johannes Ballé, Abhinav Shrivastava and George Toderici. "End-to-end Learning of Compressible Features" (ICIP 2020)

- Yoshitomo Matsubara, Ruihan Yang, Marco Levorato and Stephan Mandt. "Supervised Compression for Resource-Constrained Edge Computing Systems" (WACV 2022)

Owner

- Name: Yoshitomo Matsubara

- Login: yoshitomo-matsubara

- Kind: user

- Location: Washington, United States

- Website: http://labusers.net/~ymatsubara/

- Twitter: yoshitomo_cs

- Repositories: 10

- Profile: https://github.com/yoshitomo-matsubara

Applied Scientist at Amazon Alexa AI, Ph.D. in Computer Science

Citation (CITATION.bib)

@article{matsubara2023sc2,

title={{SC2 Benchmark: Supervised Compression for Split Computing}},

author={Matsubara, Yoshitomo and Yang, Ruihan and Levorato, Marco and Mandt, Stephan},

journal={Transactions on Machine Learning Research},

issn={2835-8856},

year={2023},

url={https://openreview.net/forum?id=p28wv4G65d}

}

GitHub Events

Total

- Release event: 1

- Watch event: 7

- Issue comment event: 2

- Push event: 80

- Pull request event: 46

- Fork event: 3

- Create event: 1

Last Year

- Release event: 1

- Watch event: 7

- Issue comment event: 2

- Push event: 80

- Pull request event: 46

- Fork event: 3

- Create event: 1

Committers

Last synced: about 1 year ago

Top Committers

| Name | Commits | |

|---|---|---|

| Yoshitomo Matsubara | y****m@u****u | 347 |

| cdyrhjohn | c****n@g****m | 1 |

Committer Domains (Top 20 + Academic)

Issues and Pull Requests

Last synced: 6 months ago

All Time

- Total issues: 4

- Total pull requests: 149

- Average time to close issues: 3 days

- Average time to close pull requests: about 13 hours

- Total issue authors: 4

- Total pull request authors: 4

- Average comments per issue: 2.5

- Average comments per pull request: 0.03

- Merged pull requests: 148

- Bot issues: 0

- Bot pull requests: 0

Past Year

- Issues: 0

- Pull requests: 30

- Average time to close issues: N/A

- Average time to close pull requests: 3 days

- Issue authors: 0

- Pull request authors: 3

- Average comments per issue: 0

- Average comments per pull request: 0.13

- Merged pull requests: 29

- Bot issues: 0

- Bot pull requests: 0

Top Authors

Issue Authors

- andreamigliorati (2)

- lixinghe1999 (1)

- yoshitomo-matsubara (1)

- aliasboink (1)

- ZqCATCAT (1)

Pull Request Authors

- yoshitomo-matsubara (157)

- milinzhang (2)

- MatteoMendula (2)

- buggyyang (1)

Top Labels

Issue Labels

Pull Request Labels

Dependencies

- actions/checkout v2 composite

- actions/setup-python v2 composite

- pypa/gh-action-pypi-publish 27b31702a0e7fc50959f5ad993c78deac1bdfc29 composite

- compressai >=1.1.9

- cython *

- matplotlib *

- numpy *

- pycocotools >=2.0.2

- sc2bench *

- scipy *

- seaborn *

- timm *

- torch >=1.10.0

- torchdistill >=0.3.1

- torchvision >=0.11.1

- compressai >=1.1.8

- cython *

- numpy *

- pycocotools >=2.0.2

- pyyaml >=5.4.1

- scipy *

- timm >=0.4.12

- torch >=1.10.0

- torchdistill >=0.2.7

- torchvision >=0.11.1

- actions/checkout v3 composite

- actions/setup-python v2 composite

- peaceiris/actions-gh-pages v3 composite

- _libgcc_mutex 0.1

- _openmp_mutex 4.5

- ca-certificates 2021.10.8

- certifi 2021.10.8

- cycler 0.11.0

- cython 0.29.24

- freetype 2.10.4

- icu 67.1

- kiwisolver 1.3.1

- ld_impl_linux-64 2.35.1

- libblas 3.9.0

- libcblas 3.9.0

- libffi 3.3

- libgcc-ng 9.3.0

- libgfortran-ng 11.2.0

- libgfortran5 11.2.0

- libgomp 9.3.0

- liblapack 3.9.0

- libopenblas 0.3.17

- libpng 1.6.37

- libstdcxx-ng 9.3.0

- matplotlib-base 3.2.2

- ncurses 6.3

- numpy 1.20.3

- openssl 1.1.1l

- pip 21.2.4

- pycocotools 2.0.2

- pyparsing 3.0.6

- python 3.8.12

- python-dateutil 2.8.2

- python_abi 3.8

- readline 8.1

- six 1.16.0

- sqlite 3.36.0

- tk 8.6.11

- tornado 6.1

- wheel 0.37.0

- xz 5.2.5

- zlib 1.2.11