https://github.com/fgnt/meeteval

MeetEval - A meeting transcription evaluation toolkit

Science Score: 67.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

○CITATION.cff file

-

✓codemeta.json file

Found codemeta.json file -

✓.zenodo.json file

Found .zenodo.json file -

✓DOI references

Found 8 DOI reference(s) in README -

✓Academic publication links

Links to: arxiv.org, ieee.org -

✓Committers with academic emails

1 of 9 committers (11.1%) from academic institutions -

✓Institutional organization owner

Organization fgnt has institutional domain (nt.uni-paderborn.de) -

○JOSS paper metadata

-

○Scientific vocabulary similarity

Low similarity (9.6%) to scientific vocabulary

Keywords

Repository

MeetEval - A meeting transcription evaluation toolkit

Basic Info

Statistics

- Stars: 114

- Watchers: 8

- Forks: 15

- Open Issues: 6

- Releases: 0

Topics

Metadata Files

README.md

MeetEval

A meeting transcription evaluation toolkit

Features

Metrics for meeting transcription evaluation

- Standard WER for single utterances (Called SISO WER in MeetEval)

meeteval-wer wer -r ref -h hyp - Concatenated minimum-Permutation Word Error Rate (cpWER)

meeteval-wer cpwer -r ref.stm -h hyp.stm - Optimal Reference Combination Word Error Rate (ORC WER)

meeteval-wer orcwer -r ref.stm -h hyp.stm - Fast Greedy Approximation of Optimal Reference Combination Word Error Rate (greedy ORC WER)

meeteval-wer greedy_orcwer -r ref.stm -h hyp.stm - Multi-speaker-input multi-stream-output Word Error Rate (MIMO WER)

meeteval-wer mimower -r ref.stm -h hyp.stm - Time-Constrained Multi-speaker-input multi-stream-output Word Error Rate (tcMIMO WER)

meeteval-wer tcmimower -r ref.stm -h hyp.stm --collar 5 - Time-Constrained minimum-Permutation Word Error Rate (tcpWER)

meeteval-wer tcpwer -r ref.stm -h hyp.stm --collar 5 - Time-Constrained Optimal Reference Combination Word Error Rate (tcORC WER)

meeteval-wer tcorcwer -r ref.stm -h hyp.stm --collar 5 - Fast Greedy Approximation of Time-Constrained Optimal Reference Combination Word Error Rate (greedy tcORC WER)

meeteval-wer greedy_tcorcwer -r ref.stm -h hyp.stm --collar 5 - Diarization-Invariant cpWER (DI-cpWER)

meeteval-wer greedy_dicpwer -r ref.stm -h hyp.stm - Diarization Error Rate (DER) by wrapping mdeval like dscore (see https://github.com/fgnt/meeteval/issues/97#issuecomment-2508140402)

meeteval-der dscore -r ref.stm -h hyp.stm --collar .25 - Diarization Error Rate (DER) by wrapping mdeval

meeteval-der md_eval_22 -r ref.stm -h hyp.stm --collar .25

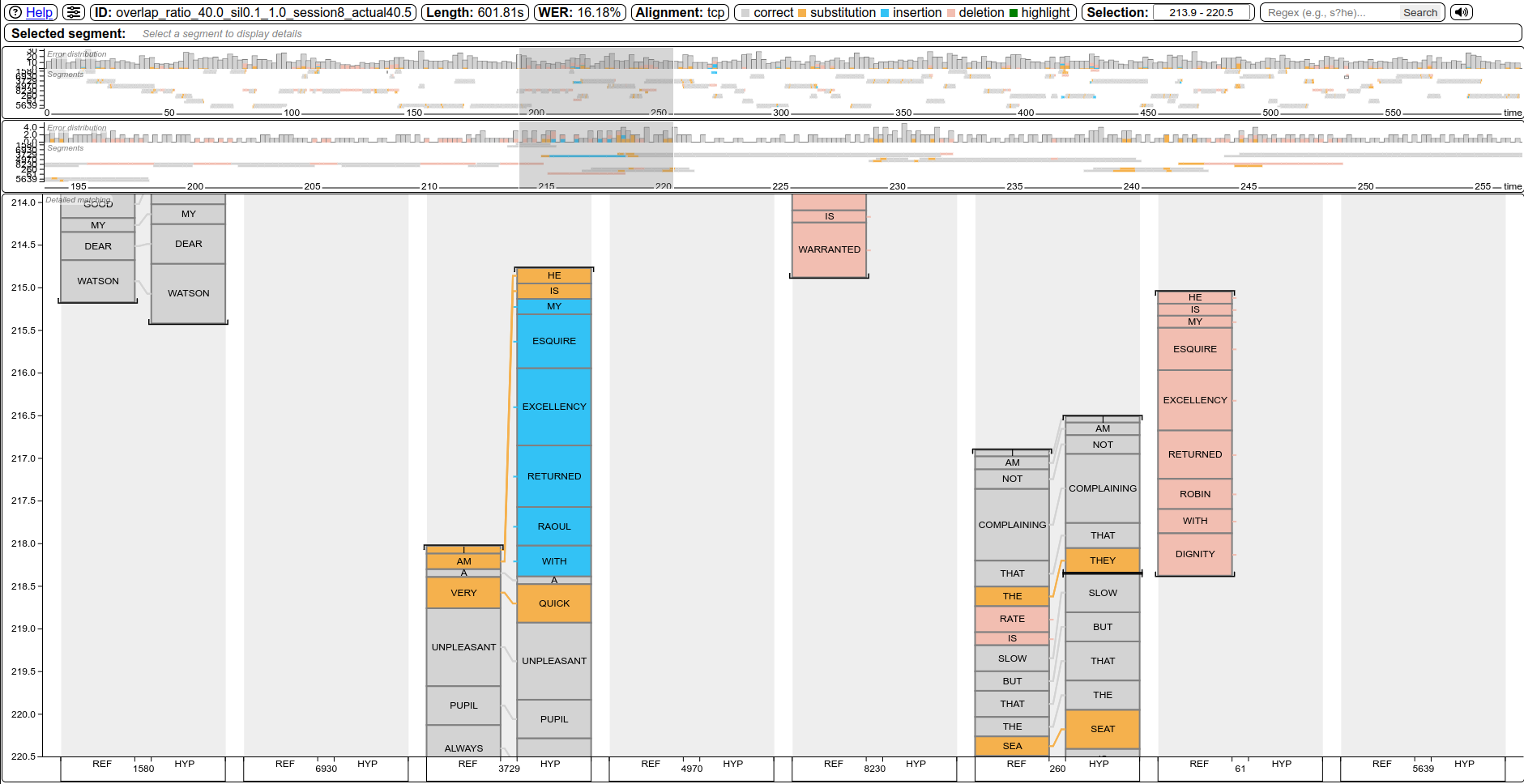

Error visualization

An alignment visualization tool for system analysis. Supports most WER definitions from above. Helpful for spotting errors. View examples!

File format conversion

MeetEval's meeteval-io command converts between all supported file types. See docs below.

Installation

From PyPI

shell

pip install meeteval

From source

shell

git clone https://github.com/fgnt/meeteval

pip install -e ./meeteval

Command-line interface

[!TIP] Useful shell aliases are defined in shell_aliases.

MeetEval supports the following file formats as input:

- Segmental Time Mark (STM)

- Time Marked Conversation (CTM)

- SEGment-wise Long-form Speech Transcription annotation (SegLST), the file format used in the CHiME challenges

- Rich Transcription Time Marked (RTTM) files (only for Diarization Error Rate)

Conversion between formats is supported via the meeteval-io command.

[!NOTE]

MeetEvaldoes not support alternate transcripts (e.g.,"i've { um / uh / @ } as far as i'm concerned").

The command-line interface is available as meeteval-wer or python -m meeteval.wer with the following signature:

```shell python -m meeteval.wer [orcwer|mimower|cpwer|tcpwer|tcorcwer] -h examplefiles/hyp.stm -r examplefiles/ref.stm

or

meeteval-wer [orcwer|mimower|cpwer|tcpwer|tcorcwer] -h examplefiles/hyp.stm -r examplefiles/ref.stm ```

You can add --help to any command to get more information about the available options.

The command name orcwer, mimower, cpwer and tcpwer selects the metric to use.

By default, the hypothesis files is used to create the template for the average

(e.g. hypothesis.json) and perreco `hypothesisper_reco.jsonfile.

They can be changed with--average-outand--per-reco-out.

.jsonand.yaml` are the supported suffixes.

More examples can be found in tests/test_cli.py.

File Formats

SEGment-wise Long-form Speech Transcription annotation (SegLST)

The SegLST format was used in the CHiME-7 challenge and is the default format for MeetEval.

The SegLST format is stored in JSON format and contains a list of segments.

Each segment should have a minimum set of keys "session_id" and "words".

Depending on the metric, additional keys may be required ("speaker", "start_time", "end_time").

An example is shown below:

python

[

{

"session_id": "recordingA", # Required

"words": "The quick brown fox jumps over the lazy dog", # Required for WER metrics

"speaker": "Alice", # Required for metrics that use speaker information (cpWER, ORC WER, MIMO WER)

"start_time": 0, # Required for time-constrained metrics (tcpWER, tcORC-WER, DER, ...)

"end_time": 1, # Required for time-constrained metrics (tcpWER, tcORC-WER, DER, ...)

"audio_path": "path/to/recordingA.wav" # Any additional keys can be included

},

...

]

Another example can be found here.

Segmental Time Mark (STM)

Each line in an STM file represents one "utterance" and is defined as

STM :== <filename> <channel> <speaker_id> <begin_time> <end_time> <transcript>

where

- filename: name of the recording

- channel: ignored by MeetEval

- speaker_id: ID of the speaker or system output stream/channel (not microphone channel)

- begin_time: in seconds, used to find the order of the utterances

- end_time: in seconds

- transcript: space-separated list of words

for example:

STM

recording1 1 Alice 0 0 Hello Bob.

recording1 1 Bob 1 0 Hello Alice.

recording1 1 Alice 2 0 How are you?

recording2 1 Alice 0 0 Hello Carol.

;; ...

An example STM file can be found in here.

Time Marked Conversation (CTM)

The CTM format is defined as

CTM :== <filename> <channel> <begin_time> <duration> <word> [<confidence>]

for the hypothesis (one file per speaker).

You have to supply one CTM file for each system output channel using multiple -h arguments since CTM files don't encode speaker or system output channel information (the channel field has a different meaning: left or right microphone).

For example:

shell

meeteval-wer orcwer -h hyp1.ctm -h hyp2.ctm -r reference.stm

[!NOTE] Note that the

LibriCSSbaseline recipe produces oneCTMfile which merges the speakers, so that it cannot be applied straight away. We recommend to useSTMorSegLSTfiles.

File format conversion

MeetEval's meeteval-io command converts between all supported file types, for example:

meeteval-io seglst2stm example_files/hyp.seglst.json -meeteval-io stm2rttm example_files/hyp.stm -(words are omitted)meeteval-io ctm2stm example_files/hyp*.ctm -(caution: one segment is created for every word!)

meeteval-io --help lists all supported conversions. shell_aliases contains a set of aliases for faster access to these commands.

Copy what you need into your .bashrc or .zshrc.

In Python code, you can modify the data however you like and convert to a different file format in a few lines:

```python import meeteval data = meeteval.io.load('examplefiles/hyp.stm').toseglst()

for s in data: # Add or modify the data in-placee s['speaker'] = ...

Dump in any format

meeteval.io.dump(data, 'hyp.rttm') ```

Python interface

For all metrics a Low-level and high-level interface is available.

[!TIP] You want to use the high-level for computing metrics over a full dataset.

You want to use the low-level interface for computing metrics for single examples or when your data is represented as Python structures, e.g., nested lists of strings.

Low-level interface

All WERs have a low-level interface in the meeteval.wer module that allows computing the WER for single examples.

The functions take the reference and hypothesis as input and return an ErrorRate object.

The ErrorRate bundles statistics (errors, total number of words) and potential auxiliary information (e.g., assignment for ORC WER) together with the WER.

```python import meeteval

SISO WER

wer = meeteval.wer.wer.siso.sisoworderror_rate( reference='The quick brown fox jumps over the lazy dog', hypothesis='The kwick brown fox jump over lazy ' ) print(wer)

ErrorRate(error_rate=0.4444444444444444, errors=4, length=9, insertions=0, deletions=2, substitutions=2)

cpWER

wer = meeteval.wer.wer.cp.cpworderror_rate( reference=['The quick brown fox', 'jumps over the lazy dog'], hypothesis=['The kwick brown fox', 'jump over lazy '] ) print(wer)

CPErrorRate(errorrate=0.4444444444444444, errors=4, length=9, insertions=0, deletions=2, substitutions=2, missedspeaker=0, falarmspeaker=0, scoredspeaker=2, assignment=((0, 0), (1, 1)))

ORC-WER

wer = meeteval.wer.wer.orc.orcworderror_rate( reference=['The quick brown fox', 'jumps over the lazy dog'], hypothesis=['The kwick brown fox', 'jump over lazy '] ) print(wer)

OrcErrorRate(error_rate=0.4444444444444444, errors=4, length=9, insertions=0, deletions=2, substitutions=2, assignment=(0, 1))

```

The input format can be a (list of) strings or an object representing a file format from meeteval.io:

```python import meeteval wer = meeteval.wer.wer.cp.cpworderror_rate( reference = meeteval.io.STM.parse('recordingA 1 Alice 0 1 The quick brown fox jumps over the lazy dog'), hypothesis = meeteval.io.STM.parse('recordingA 1 spk-1 0 1 The kwick brown fox jump over lazy ') ) print(wer)

CPErrorRate(errorrate=0.4444444444444444, errors=4, length=9, insertions=0, deletions=2, substitutions=2, referenceselfoverlap=SelfOverlap(overlaprate=Decimal('0'), overlaptime=0, totaltime=Decimal('1')), hypothesisselfoverlap=SelfOverlap(overlaprate=Decimal('0'), overlaptime=0, totaltime=Decimal('1')), missedspeaker=0, falarmspeaker=0, scoredspeaker=1, assignment=(('Alice', 'spk-1'), ))

```

All low-level interfaces come with a single-example function (as show above) and a batch function that computes the WER for multiple examples at once.

The batch function is postfixed with _multifile and is similar to the high-level interface without fancy input format handling.

To compute the average over multiple ErrorRates, use meeteval.wer.combine_error_rates.

Note that the combined WER is not the average over the error rates, but the error rate that results from combining the errors and lengths of all error rates.

combine_error_rates also discards any information that cannot be aggregated over multiple examples (such as the ORC WER assignment).

For example with the cpWER: ```python import meeteval.wer.wer.siso

wers = meeteval.wer.wer.cp.cpworderrorratemultifile( reference={ 'recordingA': {'speakerA': 'First example', 'speakerB': 'First example second speaker'}, 'recordingB': {'speakerA': 'Second example'}, }, hypothesis={ 'recordingA': ['First example with errors', 'First example second speaker'], 'recordingB': ['Second example', 'Overestimated speaker'], } ) print(wers)

{

'recordingA': CPErrorRate(errorrate=0.3333333333333333, errors=2, length=6, insertions=2, deletions=0, substitutions=0, missedspeaker=0, falarmspeaker=0, scoredspeaker=2, assignment=(('speakerA', 0), ('speakerB', 1))),

'recordingB': CPErrorRate(errorrate=1.0, errors=2, length=2, insertions=2, deletions=0, substitutions=0, missedspeaker=0, falarmspeaker=1, scoredspeaker=1, assignment=(('speakerA', 0), (None, 1)))

}

Use combineerrorrates to compute an "overall" WER over multiple examples

avg = meeteval.wer.combineerrorrates(wers) print(avg)

CPErrorRate(errorrate=0.5, errors=4, length=8, insertions=4, deletions=0, substitutions=0, missedspeaker=0, falarmspeaker=1, scoredspeaker=3)

```

High-level interface

All WERs have a high-level Python interface available directly in the meeteval.wer module that mirrors the Command-line interface and accepts the formats from meeteval.io as input.

All of these functions require the input format to contain a session-ID and output a dict mapping from session-ID to the result of that session

```python import meeteval

File Paths

wers = meeteval.wer.tcpwer('examplefiles/ref.stm', 'examplefiles/hyp.stm', collar=5)

Loaded files

wers = meeteval.wer.tcpwer(meeteval.io.load('examplefiles/ref.stm'), meeteval.io.load('examplefiles/hyp.stm'), collar=5)

Objects

wers = meeteval.wer.tcpwer( reference=meeteval.io.STM.parse(''' recordingA 1 Alice 0 1 The quick brown fox jumps over the lazy dog recordingB 1 Bob 0 1 The quick brown fox jumps over the lazy dog '''), hypothesis=meeteval.io.STM.parse(''' recordingA 1 spk-1 0 1 The kwick brown fox jump over lazy recordingB 1 spk-1 0 1 The kwick brown fox jump over lazy '''), collar=5, ) print(wers)

{

'recordingA': CPErrorRate(errorrate=0.4444444444444444, errors=4, length=9, insertions=0, deletions=2, substitutions=2, referenceselfoverlap=SelfOverlap(overlaprate=Decimal('0'), overlaptime=0, totaltime=Decimal('1')), hypothesisselfoverlap=SelfOverlap(overlaprate=Decimal('0'), overlaptime=0, totaltime=Decimal('1')), missedspeaker=0, falarmspeaker=0, scoredspeaker=1, assignment=(('Alice', 'spk-1'),)),

'recordingB': CPErrorRate(errorrate=0.4444444444444444, errors=4, length=9, insertions=0, deletions=2, substitutions=2, referenceselfoverlap=SelfOverlap(overlaprate=Decimal('0'), overlaptime=0, totaltime=Decimal('1')), hypothesisselfoverlap=SelfOverlap(overlaprate=Decimal('0'), overlaptime=0, totaltime=Decimal('1')), missedspeaker=0, falarmspeaker=0, scoredspeaker=1, assignment=(('Bob', 'spk-1'),))

}

avg = meeteval.wer.combineerrorrates(wers) print(avg)

CPErrorRate(errorrate=0.4444444444444444, errors=8, length=18, insertions=0, deletions=4, substitutions=4, referenceselfoverlap=SelfOverlap(overlaprate=Decimal('0'), overlaptime=0, totaltime=Decimal('2')), hypothesisselfoverlap=SelfOverlap(overlaprate=Decimal('0'), overlaptime=0, totaltime=Decimal('2')), missedspeaker=0, falarmspeaker=0, scoredspeaker=2)

```

Aligning sequences

Sequences can be aligned, similar to kaldialign.align, using the tcpWER matching:

```python

import meeteval

meeteval.wer.wer.timeconstrained.align([{'words': 'a b', 'starttime': 0, 'endtime': 1}], [{'words': 'a c', 'starttime': 0, 'endtime': 1}, {'words': 'd', 'starttime': 2, 'endtime': 3}], collar=5)

alignment = meeteval.wer.wer.timeconstrained.align([{'words': 'a b', 'starttime': 0, 'endtime': 1}], [{'words': 'a c', 'starttime': 0, 'endtime': 1}, {'words': 'd', 'starttime': 2, 'endtime': 3}], collar=5)

print(alignment)

[('a', 'a'), ('b', 'c'), ('*', 'd')]

```

meeteval.wer.wer.time_constrained.print_alignment pretty prints an an alignment with timestmaps:

```python import meeteval alignment = meeteval.wer.wer.timeconstrained.align( [ {"words": "hi", "starttime": 0.93, "endtime": 2.03}, {"words": "good how are you", "starttime": 3.15, "endtime": 5.36}, {"words": "i'm leigh adams", "starttime": 7.24, "endtime": 8.36}, {"words": "pretty good now and you", "starttime": 9.44, "endtime": 12.27}, {"words": "yeah", "starttime": 15.49, "endtime": 16.95}, ], [ {"words": "hi", "starttime": 0.93, "endtime": 2.03}, {"words": "are you", "starttime": 3.15, "endtime": 5.36}, {"words": "leigh adams", "starttime": 7.24, "endtime": 8.36}, {"words": "good now and", "starttime": 9.44, "endtime": 12.27}, {"words": "yep", "starttime": 15.49, "end_time": 16.95}, ], style='seglst', collar=5, )

meeteval.wer.wer.timeconstrained.printalignment(alignment)

0.93 2.03 hi - hi 1.48 1.48

3.15 3.83 good + *

3.83 4.34 how + *

4.34 4.85 are - are 3.70 3.70

4.85 5.36 you - you 4.81 4.81

7.24 7.50 i'm + *

7.50 7.93 leigh - leigh 7.52 7.52

7.93 8.36 adams - adams 8.08 8.08

9.44 10.33 pretty + *

10.33 10.93 good - good 10.01 10.01

10.93 11.38 now - now 11.00 11.00

11.38 11.82 and - and 11.85 11.85

11.82 12.27 you + yep 16.22 16.22

15.49 16.95 yeah + *

```

You can use meeteval.wer.wer.time_constrained.format_alignment to obtain a formatted string without printing.

Visualization

[!TIP] Try it in the browser! https://fgnt.github.io/meeteval_viz

Command-line interface

| Description | Preview |

|------------|-------|

| Standard callmeeteval-viz html --alignment tcp -r ref.stm -h hyp.stm

Replace tcp with cp, orc, greedy_orc, tcorc, greedy_tcorc, greedy_dicp, or greedy_ditcp to use another WER for the alignment. |  |

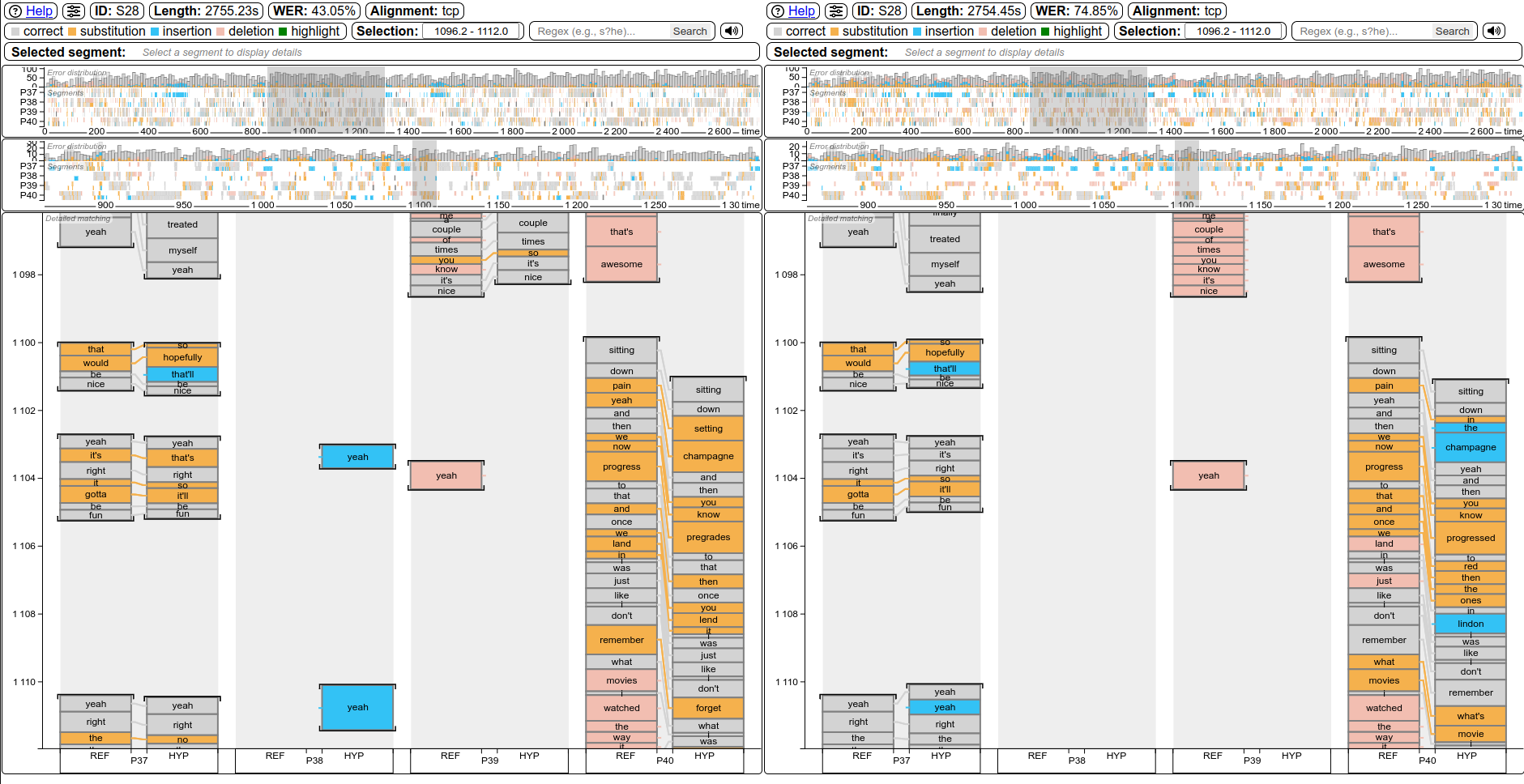

| Synced side-by-side system comparison

|

| Synced side-by-side system comparison

Same reference, but different hypothesismeeteval-viz html --alignment tcp -r ref.stm -h hyp1.stm -h hyp2.stm |  |

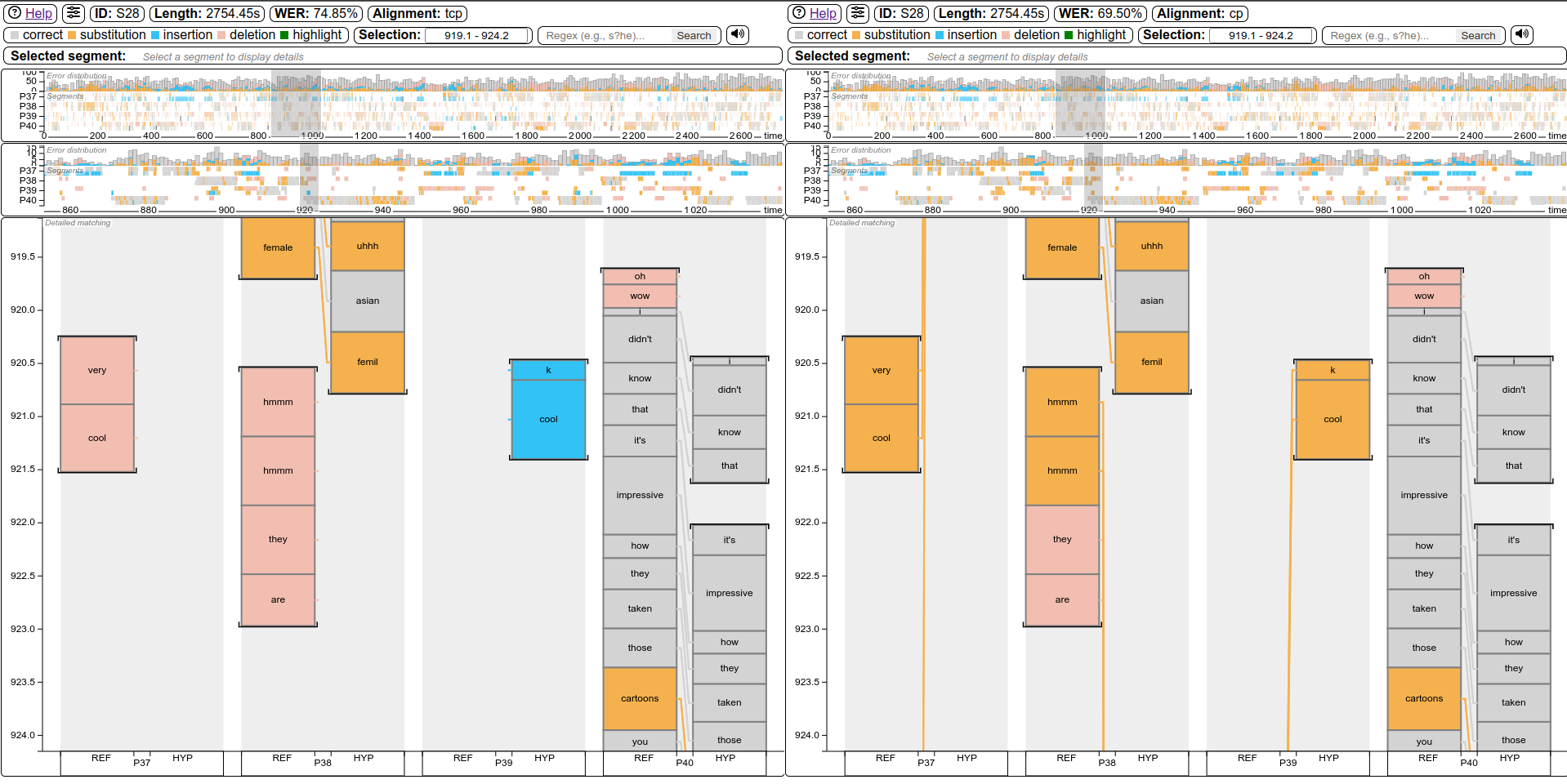

| Synced side-by-side alignment comparison

|

| Synced side-by-side alignment comparisonmeeteval-viz html --alignment tcp cp -r ref.stm -h hyp.stm |  |

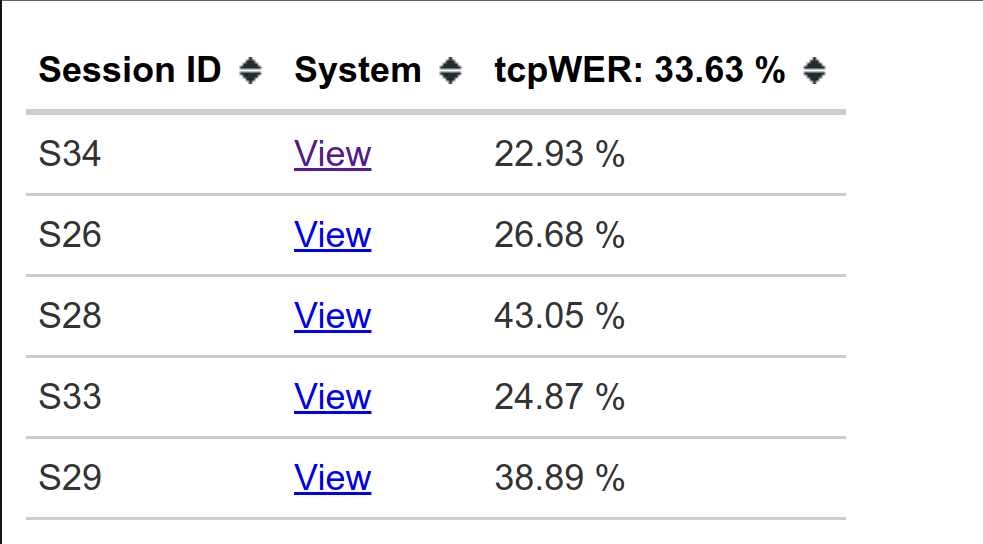

| Session browser will be created with each call |

|

| Session browser will be created with each call |  |

|

Each command will generate a viz folder (customize with -o OUT), that contains an index.html (session browser), side_by_side_sync.html (used by index.html, can be ignored) and for each session, system and alignment a standalone/shareable HTML file.

Python / Jupyter interface

```python import meeteval from meeteval.viz.visualize import AlignmentVisualization

folder = r'https://raw.githubusercontent.com/fgnt/meeteval/main/' av = AlignmentVisualization( meeteval.io.load(folder + 'examplefiles/ref.stm').groupby('filename')['recordingA'], meeteval.io.load(folder + 'examplefiles/hyp.stm').groupby('filename')['recordingA'] )

display(av) # Jupyter

av.dump('viz.html') # Create standalone HTML file

```

Cite

The toolkit and the tcpWER were presented at the CHiME-2023 workshop (Computational Hearing in Multisource Environments) with the paper "MeetEval: A Toolkit for Computation of Word Error Rates for Meeting Transcription Systems".

bibtex

@InProceedings{MeetEval23,

author = {von Neumann, Thilo and Boeddeker, Christoph and Delcroix, Marc and Haeb-Umbach, Reinhold},

title = {{MeetEval}: A Toolkit for Computation of Word Error Rates for Meeting Transcription Systems},

year = {2023},

booktitle = {Proc. 7th International Workshop on Speech Processing in Everyday Environments (CHiME 2023)},

pages = {27--32},

doi = {10.21437/CHiME.2023-6}

}

The MIMO WER and efficient implementation of ORC WER are presented in the paper "On Word Error Rate Definitions and their Efficient Computation for Multi-Speaker Speech Recognition Systems".

bibtex

@InProceedings{MIMO23,

author = {von Neumann, Thilo and Boeddeker, Christoph and Kinoshita, Keisuke and Delcroix, Marc and Haeb-Umbach, Reinhold},

title = {On Word Error Rate Definitions and their Efficient Computation for Multi-Speaker Speech Recognition Systems},

booktitle = {ICASSP 2023 - 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

year = {2023},

doi = {10.1109/ICASSP49357.2023.10094784}

}

Owner

- Name: Department of Communications Engineering University of Paderborn

- Login: fgnt

- Kind: organization

- Location: Paderborn, Germany

- Website: http://nt.uni-paderborn.de

- Repositories: 37

- Profile: https://github.com/fgnt

GitHub Events

Total

- Issues event: 9

- Watch event: 34

- Delete event: 6

- Issue comment event: 34

- Push event: 60

- Pull request event: 34

- Pull request review event: 46

- Pull request review comment event: 35

- Fork event: 1

- Create event: 10

Last Year

- Issues event: 9

- Watch event: 34

- Delete event: 6

- Issue comment event: 34

- Push event: 60

- Pull request event: 34

- Pull request review event: 46

- Pull request review comment event: 35

- Fork event: 1

- Create event: 10

Committers

Last synced: 5 months ago

Top Committers

| Name | Commits | |

|---|---|---|

| Thilo von Neumann | t****n@m****e | 485 |

| Christoph Boeddeker | c****j@m****e | 219 |

| Christoph Boeddeker | b****r@u****m | 11 |

| Thilo von Neumann | t****n@l****e | 3 |

| Robin | 1****3@u****m | 2 |

| vieting | 4****g@u****m | 2 |

| Hervé BREDIN | h****n@u****m | 1 |

| Jakob Kienegger | 1****r@u****m | 1 |

| Soheyl | 1****l@u****m | 1 |

Committer Domains (Top 20 + Academic)

Issues and Pull Requests

Last synced: 5 months ago

All Time

- Total issues: 13

- Total pull requests: 168

- Average time to close issues: 5 days

- Average time to close pull requests: 5 days

- Total issue authors: 10

- Total pull request authors: 8

- Average comments per issue: 3.08

- Average comments per pull request: 0.57

- Merged pull requests: 154

- Bot issues: 0

- Bot pull requests: 0

Past Year

- Issues: 5

- Pull requests: 36

- Average time to close issues: 7 days

- Average time to close pull requests: 3 days

- Issue authors: 4

- Pull request authors: 4

- Average comments per issue: 3.8

- Average comments per pull request: 0.86

- Merged pull requests: 30

- Bot issues: 0

- Bot pull requests: 0

Top Authors

Issue Authors

- desh2608 (2)

- mubingshen (2)

- boeddeker (2)

- m-wiesner (1)

- m-bain (1)

- SamuelBroughton (1)

- mdeegen (1)

- s0h3yl (1)

- AntoineBlanot (1)

- D-VR (1)

Pull Request Authors

- thequilo (111)

- boeddeker (47)

- allrob23 (2)

- popcornell (2)

- hbredin (2)

- jkienegger (2)

- s0h3yl (1)

- vieting (1)

Top Labels

Issue Labels

Pull Request Labels

Packages

- Total packages: 1

-

Total downloads:

- pypi 2,385 last-month

- Total dependent packages: 0

- Total dependent repositories: 0

- Total versions: 9

- Total maintainers: 1

pypi.org: meeteval

- Homepage: https://github.com/fgnt/meeteval/

- Documentation: https://meeteval.readthedocs.io/

- License: MIT

-

Latest release: 0.4.3

published 6 months ago

Rankings

Maintainers (1)

Dependencies

- actions/checkout v3 composite

- actions/setup-python v4 composite

- editdistance *

- hypothesis *

- pytest *

- editdistance *

- scipy *

- typing_extensions *

- actions/checkout v3 composite

- actions/setup-python v4 composite

- actions/checkout master composite

- actions/setup-python v4 composite

- pypa/gh-action-pypi-publish release/v1 composite