crosslingual-coreference

A multi-lingual approach to AllenNLP CoReference Resolution along with a wrapper for spaCy.

https://github.com/davidberenstein1957/crosslingual-coreference

Science Score: 44.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

✓CITATION.cff file

Found CITATION.cff file -

✓codemeta.json file

Found codemeta.json file -

✓.zenodo.json file

Found .zenodo.json file -

○DOI references

-

○Academic publication links

-

○Committers with academic emails

-

○Institutional organization owner

-

○JOSS paper metadata

-

○Scientific vocabulary similarity

Low similarity (10.4%) to scientific vocabulary

Keywords

Repository

A multi-lingual approach to AllenNLP CoReference Resolution along with a wrapper for spaCy.

Basic Info

Statistics

- Stars: 108

- Watchers: 4

- Forks: 19

- Open Issues: 10

- Releases: 12

Topics

Metadata Files

README.md

Crosslingual Coreference

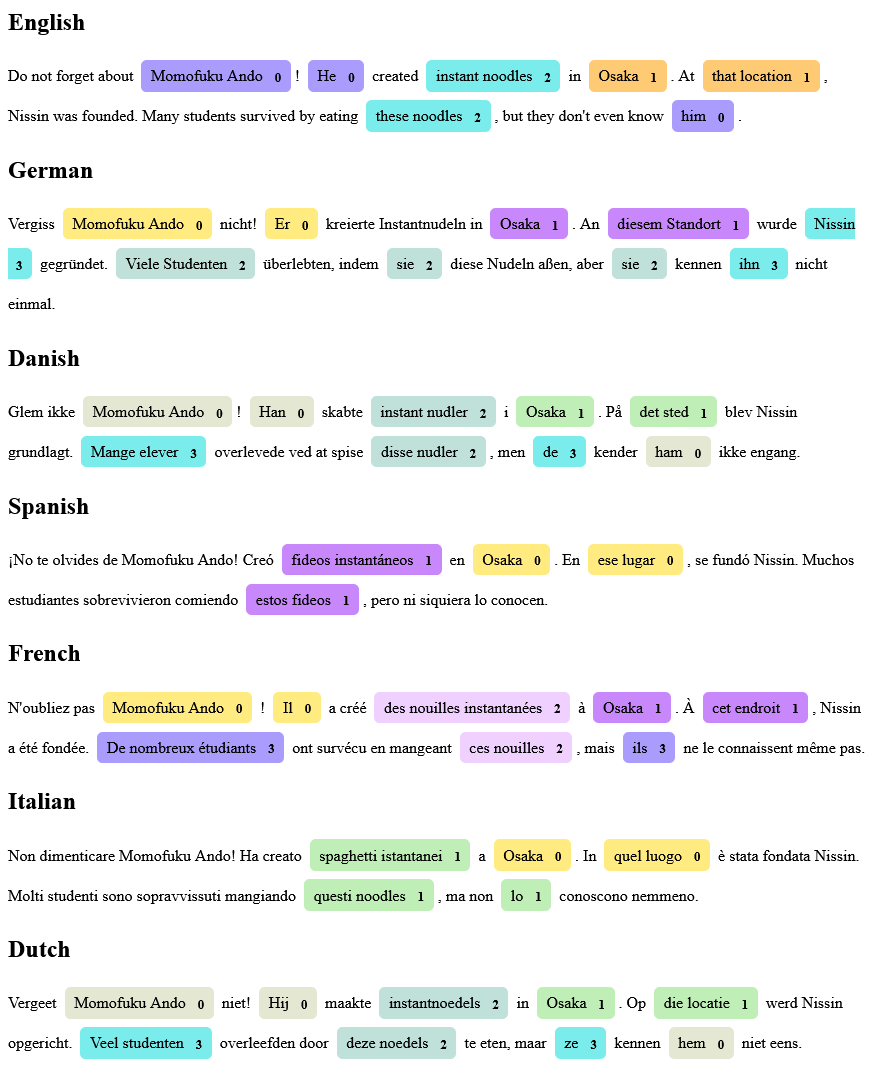

Coreference is amazing but the data required for training a model is very scarce. In our case, the available training for non-English languages also proved to be poorly annotated. Crosslingual Coreference, therefore, uses the assumption a trained model with English data and cross-lingual embeddings should work for languages with similar sentence structures.

Install

pip install crosslingual-coreference

Quickstart

```python from crosslingual_coreference import Predictor

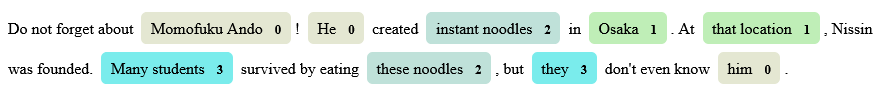

text = ( "Do not forget about Momofuku Ando! He created instant noodles in Osaka. At" " that location, Nissin was founded. Many students survived by eating these" " noodles, but they don't even know him." )

choose minilm for speed/memory and info_xlm for accuracy

predictor = Predictor( language="encorewebsm", device=-1, modelname="minilm" )

print(predictor.predict(text)["resolved_text"]) print(predictor.pipe([text])[0]["resolved_text"])

Note you can also get 'cluster_heads' and 'clusters'

Output

Do not forget about Momofuku Ando!

Momofuku Ando created instant noodles in Osaka.

At Osaka, Nissin was founded.

Many students survived by eating instant noodles,

but Many students don't even know Momofuku Ando.

```

Models

As of now, there are two models available "spanbert", "infoxlm", "xlmroberta", "minilm", which scored 83, 77, 74 and 74 on OntoNotes Release 5.0 English data, respectively. - The "minilm" model is the best quality speed trade-off for both mult-lingual and english texts. - The "info_xlm" model produces the best quality for multi-lingual texts. - The AllenNLP "spanbert" model produces the best quality for english texts.

Chunking/batching to resolve memory OOM errors

```python from crosslingual_coreference import Predictor

predictor = Predictor( language="encorewebsm", device=0, modelname="minilm", chunksize=2500, chunkoverlap=2, ) ```

Use spaCy pipeline

```python import spacy

text = ( "Do not forget about Momofuku Ando! He created instant noodles in Osaka. At" " that location, Nissin was founded. Many students survived by eating these" " noodles, but they don't even know him." )

nlp = spacy.load("encorewebsm") nlp.addpipe( "xxcoref", config={"chunksize": 2500, "chunk_overlap": 2, "device": 0} )

doc = nlp(text) print(doc..corefclusters)

Output

[[[4, 5], [7, 7], [27, 27], [36, 36]],

[[12, 12], [15, 16]],

[[9, 10], [27, 28]],

[[22, 23], [31, 31]]]

print(doc..resolvedtext)

Output

Do not forget about Momofuku Ando!

Momofuku Ando created instant noodles in Osaka.

At Osaka, Nissin was founded.

Many students survived by eating instant noodles,

but Many students don't even know Momofuku Ando.

print(doc..clusterheads)

Output

{Momofuku Ando: [5, 6],

instant noodles: [11, 12],

Osaka: [14, 14],

Nissin: [21, 21],

Many students: [26, 27]}

```

Visualize spacy pipeline

This only works with spacy >= 3.3. ```python import spacy from spacy.tokens import Span from spacy import displacy

text = ( "Do not forget about Momofuku Ando! He created instant noodles in Osaka. At" " that location, Nissin was founded. Many students survived by eating these" " noodles, but they don't even know him." )

nlp = spacy.load("nlcorenewssm") nlp.addpipe("xxcoref", config={"modelname": "minilm"}) doc = nlp(text) spans = [] for idx, cluster in enumerate(doc..corefclusters): for span in cluster: spans.append( Span(doc, span[0], span[1]+1, str(idx).upper()) )

doc.spans["custom"] = spans

displacy.render(doc, style="span", options={"spans_key": "custom"}) ```

More Examples

Owner

- Name: David Berenstein

- Login: davidberenstein1957

- Kind: user

- Location: Madrid

- Company: @argilla-io

- Website: https://www.linkedin.com/in/david-berenstein-1bab11105/

- Repositories: 2

- Profile: https://github.com/davidberenstein1957

👨🏽🍳 Cooking, 👨🏽💻 Coding, 🏆 Committing Developer Advocate @argilla-io

Citation (CITATION.cff)

cff-version: 1.0.0

message: "If you use this software, please cite it as below."

authors:

- family-names: David

given-names: Berenstein

title: "Crosslingual Coreference - a multi-lingual approach to AllenNLP CoReference Resolution along with a wrapper for spaCy."

version: 0.2.9

date-released: 2022-09-24

GitHub Events

Total

- Watch event: 5

- Pull request review event: 1

- Fork event: 2

Last Year

- Watch event: 5

- Pull request review event: 1

- Fork event: 2

Committers

Last synced: 9 months ago

Top Committers

| Name | Commits | |

|---|---|---|

| David Berenstein | d****n@p****m | 24 |

| David Berenstein | d****n@g****m | 14 |

| Mathijs Boezer | m****r@p****m | 7 |

| Daniel Vila Suero | d****l@r****i | 2 |

| Martin Kirilov | m****v@g****m | 1 |

Committer Domains (Top 20 + Academic)

Issues and Pull Requests

Last synced: 6 months ago

All Time

- Total issues: 6

- Total pull requests: 1

- Average time to close issues: N/A

- Average time to close pull requests: N/A

- Total issue authors: 6

- Total pull request authors: 1

- Average comments per issue: 1.17

- Average comments per pull request: 0.0

- Merged pull requests: 0

- Bot issues: 0

- Bot pull requests: 0

Past Year

- Issues: 1

- Pull requests: 0

- Average time to close issues: N/A

- Average time to close pull requests: N/A

- Issue authors: 1

- Pull request authors: 0

- Average comments per issue: 0.0

- Average comments per pull request: 0

- Merged pull requests: 0

- Bot issues: 0

- Bot pull requests: 0

Top Authors

Issue Authors

- dimitristaufer (1)

- joa-spec (1)

- osehmathias (1)

- rainergo (1)

- arslanahmad90 (1)

- vaibhava-vylabs (1)

Pull Request Authors

- matesaki (2)

Top Labels

Issue Labels

Pull Request Labels

Dependencies

- 216 dependencies

- black ~22.3 develop

- flake8 ~4.0 develop

- flake8-bugbear ~22.3 develop

- flake8-docstrings ~1.6 develop

- isort ^5.10 develop

- pep8-naming ^~0.12 develop

- pre-commit ~2.17 develop

- pytest ~7.0 develop

- Pillow >9.1

- allennlp ~2.8

- allennlp-models ~2.8

- checklist ^0.0.11

- python ^3.7.1

- spacy ~3.1

- actions/checkout v3 composite

- actions/setup-python v3 composite

- actions/checkout v3 composite

- actions/setup-python v3 composite

- pypa/gh-action-pypi-publish 27b31702a0e7fc50959f5ad993c78deac1bdfc29 composite