wsdmcup2023

Toloka Visual Question Answering Challenge at WSDM Cup 2023

Science Score: 67.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

✓CITATION.cff file

Found CITATION.cff file -

✓codemeta.json file

Found codemeta.json file -

✓.zenodo.json file

Found .zenodo.json file -

✓DOI references

Found 4 DOI reference(s) in README -

✓Academic publication links

Links to: arxiv.org -

○Academic email domains

-

○Institutional organization owner

-

○JOSS paper metadata

-

○Scientific vocabulary similarity

Low similarity (8.3%) to scientific vocabulary

Keywords

Repository

Toloka Visual Question Answering Challenge at WSDM Cup 2023

Basic Info

- Host: GitHub

- Owner: Toloka

- License: apache-2.0

- Language: Jupyter Notebook

- Default Branch: main

- Homepage: https://toloka.ai/challenges/wsdm2023/

- Size: 5.24 MB

Statistics

- Stars: 32

- Watchers: 3

- Forks: 7

- Open Issues: 1

- Releases: 1

Topics

Metadata Files

README.md

Toloka Visual Question Answering Challenge at WSDM Cup 2023

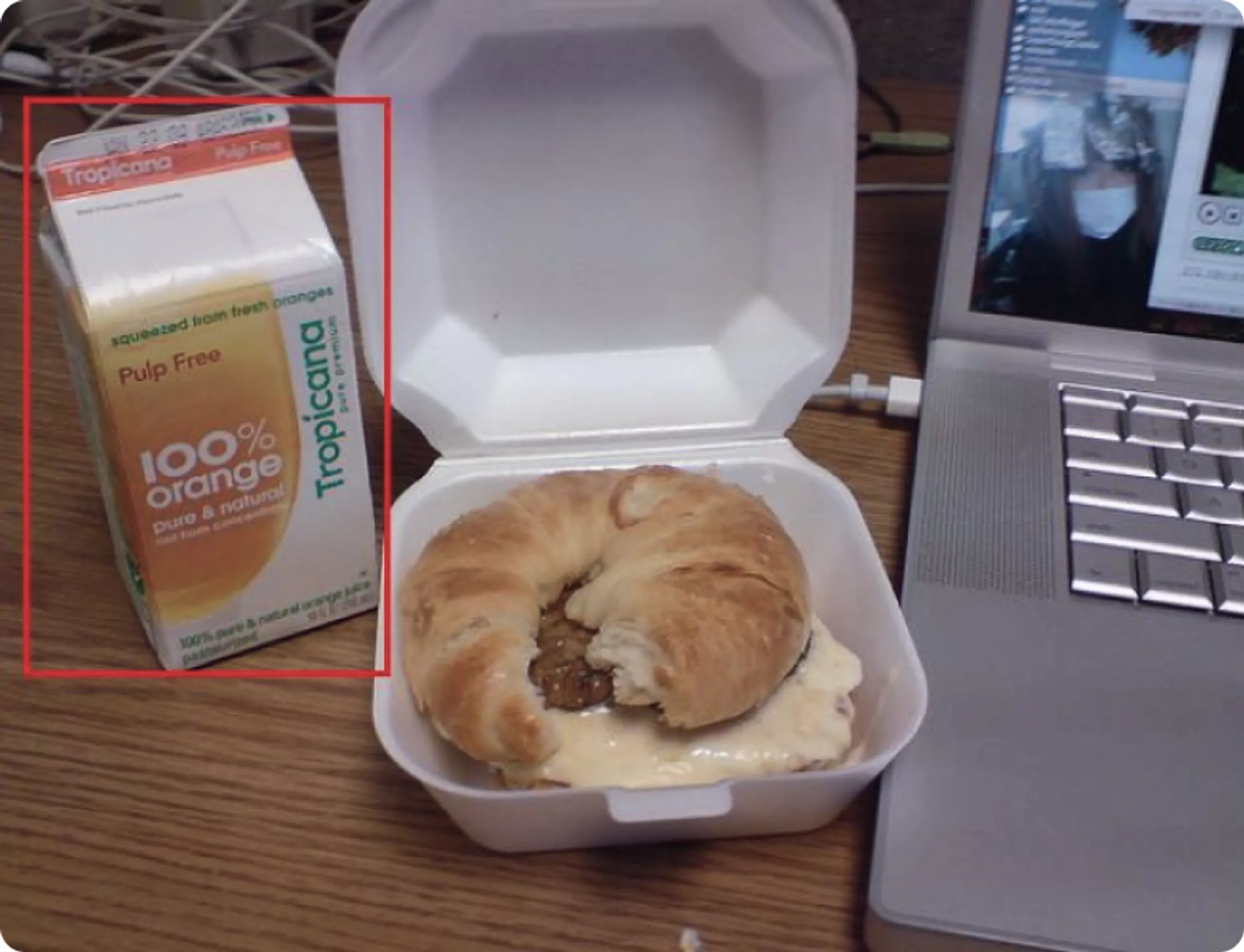

We challenge you with a visual question answering task! Given an image and a textual question, draw the bounding box around the object correctly responding to that question.

| Question | Image and Answer |

| --- | --- |

| What do you use to hit the ball? |  |

| What do people use for cutting? |

|

| What do people use for cutting? |  |

| What do we use to support the immune system and get vitamin C? |

|

| What do we use to support the immune system and get vitamin C? |  |

|

Links

- Competition: https://toloka.ai/challenges/wsdm2023

- CodaLab: https://codalab.lisn.upsaclay.fr/competitions/7434

- Dataset: https://doi.org/10.5281/zenodo.7057740

Citation

Please cite the challenge results or dataset description as follows.

- Ustalov D., Pavlichenko N., Koshelev S., Likhobaba D., and Smirnova A. Toloka Visual Question Answering Benchmark. 2023. arXiv: 2309.16511 [cs.CV].

bibtex

@inproceedings{TolokaWSDMCup2023,

author = {Ustalov, Dmitry and Pavlichenko, Nikita and Koshelev, Sergey and Likhobaba, Daniil and Smirnova, Alisa},

title = {{Toloka Visual Question Answering Benchmark}},

year = {2023},

eprint = {2309.16511},

eprinttype = {arxiv},

eprintclass = {cs.CV},

language = {english},

}

Dataset

Our dataset consists of the images associated with textual questions. One entry (instance) in our dataset is a question-image pair labeled with the ground truth coordinates of a bounding box containing the visual answer to the given question. The images were obtained from a CC BY-licensed subset of the Microsoft Common Objects in Context dataset, MS COCO. All data labeling was performed on the Toloka crowdsourcing platform, https://toloka.ai/. We release the entire dataset under the CC BY license:

- Zenodo: https://doi.org/10.5281/zenodo.7057740

- Hugging Face Hub: https://huggingface.co/datasets/toloka/WSDMCup2023

- Kaggle: https://www.kaggle.com/datasets/dustalov/toloka-wsdm-cup-2023-vqa

- GitHub Packages: https://github.com/Toloka/WSDMCup2023/pkgs/container/wsdmcup2023

Licensed under the Creative Commons Attribution 4.0 License. See LICENSE-CC-BY.txt file for more details.

Zero-Shot Baselines

We provide zero-shot baselines in zeroshot_baselines folder. All notebooks are made to run in Colab

YOLOR + CLIP

This baseline was provided to participants of WSDM Cup 2023 Challenge. First, it uses a detection model, YOLOR, to generate candidate rectangles. Then, it applies CLIP to measure the similarity between the question and a part of the image bounded by each candidate rectangle. To make a prediction, it uses the candidate with the highest similarity. This baseline method achieves IoU = 0.21 on private test subset.

Licensed under the Apache License, Version 2.0. See LICENSE-APACHE.txt file for more details.

OVSeg + SAM

Another zero-shot baseline, called OVSeg, utilizes SAM as a proposal generator instead of MaskFormer in the original setup. This approach achieves IoU = 0.35 on the private test subset.

Licensed under the Creative Commons Attribution 4.0 License.

OFA + SAM

Last one is primarily based on OFA, combined with bounding box correction using SAM. To solve the task, we followed a two-step zero-shot setup.

First, we address the Visual Question Answering, where the model is given a prompt {question} Name an object in the picture along with an image. The model provides the name of a clue object to the question.

In the second step, an object corresponding to the answer from the previous step is annotated using the prompt which region does the text "{answer}" describe?, resulting in IoU = 0.42.

Subsequently, with the obtained bounding boxes, SAM generates the corresponding masks for the annotated object, which are then transformed into bounding boxes. This enabled us to achieve IoU = 0.45 with this baseline.

Licensed under the Apache License, Version 2.0.

Crowdsourcing Baseline

We evaluated how well non-expert human annotators can solve our task by running a dedicated round of crowdsourcing annotations on the Toloka crowdsourcing platform. We found them to tackle this task successfully without knowing the ground truth. On all three subsets of our data, the average IoU value was 0.87 ± 0.01, which we consider as a strong human baseline for our task. Krippendorff's α coefficients for the public test was 0.68 and for the private test was 0.66, showing the decent agreement between the responses; we used 1 − IoU as the distance metric when calculating the α coefficient. We selected the bounding boxes which were the most similar to the ground truth data to indicate the upper bound of non-expert annotation quality; *_crowd_baseline.csv files contain these responses.

Licensed under the Creative Commons Attribution 4.0 License. See LICENSE-CC-BY.txt file for more details.

Reproduction

The final score will be evaluated on the private test dataset during Reproduction phase. We kindly ask you to create a docker image and share it with us by December 19th 23:59 AoE in this form. We put an instruction how to create a docker image in reproduction directory.

We will run your solution on a machine with one Nvidia A100 80 GB GPU, 16 CPU cores, and 200 GB of RAM. Your Docker image must perform the inference in at most 3 hours on this machine. In other words, the docker run command must finish in 3 hours.

Don't hesitate to contact us at research@toloka.ai if you have any questions or suggestions.

Owner

- Name: Toloka

- Login: Toloka

- Kind: organization

- Email: github@toloka.ai

- Website: https://toloka.ai

- Repositories: 11

- Profile: https://github.com/Toloka

Data labeling platform for ML

Citation (CITATION.cff)

cff-version: 1.2.0

title: Toloka Visual Question Answering Dataset

message: >-

If you use this dataset, please cite the paper from

preferred-citation.

type: dataset

authors:

- family-names: Ustalov

given-names: Dmitry

orcid: "https://orcid.org/0000-0002-9979-2188"

- family-names: Pavlichenko

given-names: Nikita

orcid: "https://orcid.org/0000-0002-7330-393X"

- family-names: Likhobaba

given-names: Daniil

orcid: "https://orcid.org/0000-0002-0322-3774"

- family-names: Smirnova

given-names: Alisa

orcid: "https://orcid.org/0000-0002-7108-9917"

identifiers:

- type: doi

value: 10.5281/zenodo.7057740

description: Zenodo

- type: url

value: "https://huggingface.co/datasets/toloka/WSDMCup2023"

description: Hugging Face

- type: url

value: "https://github.com/Toloka/WSDMCup2023"

description: GitHub

- type: url

value: "https://ceur-ws.org/Vol-3357/invited1.pdf"

description: Paper

repository-code: "https://github.com/Toloka/WSDMCup2023"

url: "https://toloka.ai/challenges/wsdm2023/"

repository-artifact: >-

https://github.com/Toloka/WSDMCup2023/pkgs/container/wsdmcup2023

license: CC-BY-4.0

preferred-citation:

type: conference-paper

authors:

- family-names: Ustalov

given-names: Dmitry

- family-names: Pavlichenko

given-names: Nikita

- family-names: Likhobaba

given-names: Daniil

- family-names: Smirnova

given-names: Alisa

title: WSDM Cup 2023 Challenge on Visual Question Answering

year: 2023

collection-title: Proceedings of the 4th Crowd Science Workshop on Collaboration of Humans and Learning Algorithms for Data Labeling

conference:

name: "4th Crowd Science Workshop: Collaboration of Humans and Learning Algorithms for Data Labeling"

country: SG

city: Singapore

date-start: "2023-03-03"

date-end: "2023-03-03"

start: 1

end: 7

url: "http://ceur-ws.org/Vol-3357/invited1.pdf"

issn: 1613-0073

GitHub Events

Total

- Watch event: 2

Last Year

- Watch event: 2