tooth-detection-and-numbering

T.C. Maltepe University Graduate Project (Tooth Detection and Numbering From Panoramic Radiography Adult Patients Using Artificial Neural Network)

Science Score: 64.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

✓CITATION.cff file

Found CITATION.cff file -

✓codemeta.json file

Found codemeta.json file -

✓.zenodo.json file

Found .zenodo.json file -

○DOI references

-

✓Academic publication links

Links to: arxiv.org -

✓Committers with academic emails

6 of 346 committers (1.7%) from academic institutions -

○Institutional organization owner

-

○JOSS paper metadata

-

○Scientific vocabulary similarity

Low similarity (17.5%) to scientific vocabulary

Keywords

Keywords from Contributors

Repository

T.C. Maltepe University Graduate Project (Tooth Detection and Numbering From Panoramic Radiography Adult Patients Using Artificial Neural Network)

Basic Info

Statistics

- Stars: 1

- Watchers: 1

- Forks: 0

- Open Issues: 0

- Releases: 0

Topics

Metadata Files

README.md

**Tooth Detection and Numbering from Panoramic Radiography Using Artificial Neural Networks** 🚀 This repository contains the source code and resources for detecting and numbering teeth from panoramic radiography images. Developed as a graduate project at **T.C. Maltepe University**.

📚 Documentation

See See below for detailed information on setup, training, testing, and usage. See below for quickstart examples.

Install

Clone the repository and install dependencies in a [**Python>=3.8.0**](https://www.python.org/) environment. Ensure you have [**PyTorch>=1.8**](https://pytorch.org/get-started/locally/) installed. ```bash # Clone the YOLOv5 repository git clone https://github.com/tunisch/tooth-detection-and-numbering # Navigate to the cloned directory cd yolov5 # Install required packages pip install -r requirements.txt ```Inference with PyTorch Hub

Use YOLOv5 via [PyTorch Hub](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading/) for inference. [Models](https://github.com/ultralytics/yolov5/tree/master/models) are automatically downloaded from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases). ```python import torch # Load the trained model for tooth detection and numbering model = torch.hub.load("ultralytics/yolov5", "custom", path="path/to/your_trained_model.pt") # Replace with your trained model path # Define the input image source (URL, local file, PIL image, OpenCV frame, numpy array, or list) img = "path/to/your/test_image.jpg" # Replace with the path to your test image # Perform inference (handles batching, resizing, normalization automatically) results = model(img) # Process the results (options: .print(), .show(), .save(), .crop(), .pandas()) results.print() # Print results to console results.show() # Display results in a window results.save() # Save results to runs/detect/exp ```Inference with detect.py

The `detect.py` script runs inference on various sources. It automatically downloads [models](https://github.com/ultralytics/yolov5/tree/master/models) from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases) and saves the results to the `runs/detect` directory. ```bash # Run inference on an image or directory python detect.py --weights best_model.pt --source data/test_images/ # Run inference on a text file listing image paths python detect.py --weights yolov5s.pt --source list.txtTraining

The commands below demonstrate how to reproduce YOLOv5 [COCO dataset](https://docs.ultralytics.com/datasets/detect/coco/) results. Both [models](https://github.com/ultralytics/yolov5/tree/master/models) and [datasets](https://github.com/ultralytics/yolov5/tree/master/data) are downloaded automatically from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases). Training times for YOLOv5n/s/m/l/x are approximately 1/2/4/6/8 days on a single [NVIDIA V100 GPU](https://www.nvidia.com/en-us/data-center/v100/). Using [Multi-GPU training](https://docs.ultralytics.com/yolov5/tutorials/multi_gpu_training/) can significantly reduce training time. Use the largest `--batch-size` your hardware allows, or use `--batch-size -1` for YOLOv5 [AutoBatch](https://github.com/ultralytics/yolov5/pull/5092). The batch sizes shown below are for V100-16GB GPUs. # Train the model on your dataset python train.py --data data/dataset.yaml --epochs 100 --weights '' --cfg yolov5s.pt --batch-size 16 # Train YOLOv5n on COCO for 300 epochs python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5n.yaml --batch-size 128 # Train YOLOv5s on COCO for 300 epochs python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5s.yaml --batch-size 64 # Train YOLOv5m on COCO for 300 epochs python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5m.yaml --batch-size 40 # Train YOLOv5l on COCO for 300 epochs python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5l.yaml --batch-size 24 # Train YOLOv5x on COCO for 300 epochs python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5x.yaml --batch-size 16 ```

🧩 Integrations

Our key integrations with leading AI platforms extend the functionality of Ultralytics' offerings, enhancing tasks like dataset labeling, training, visualization, and model management.

| Make Sense AI 🌟 | Weights & Biases | TensorBoard

| :-----------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------------------------------------------: |

| Streamline YOLO workflows: Label, train, and deploy effortlessly with Make Sense AI. Try now! | Track experiments, hyperparameters, and results with Weights & Biases. | Free forever, TensorBoard lets you save YOLO models, resume training, and interactively visualize predictions.

🤔 Why YOLOv5?

YOLOv5 is designed for simplicity and ease of use. We prioritize real-world performance and accessibility.

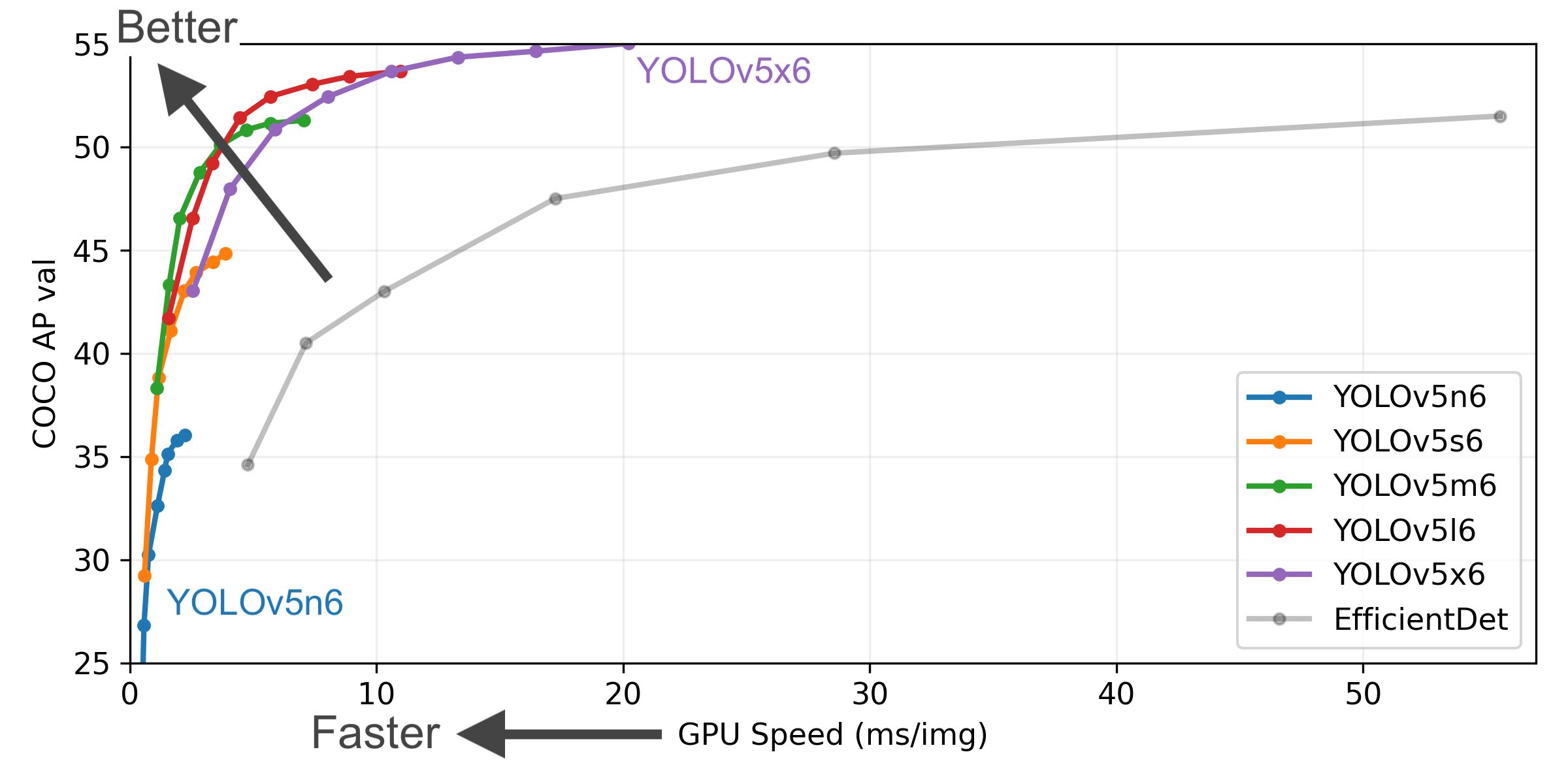

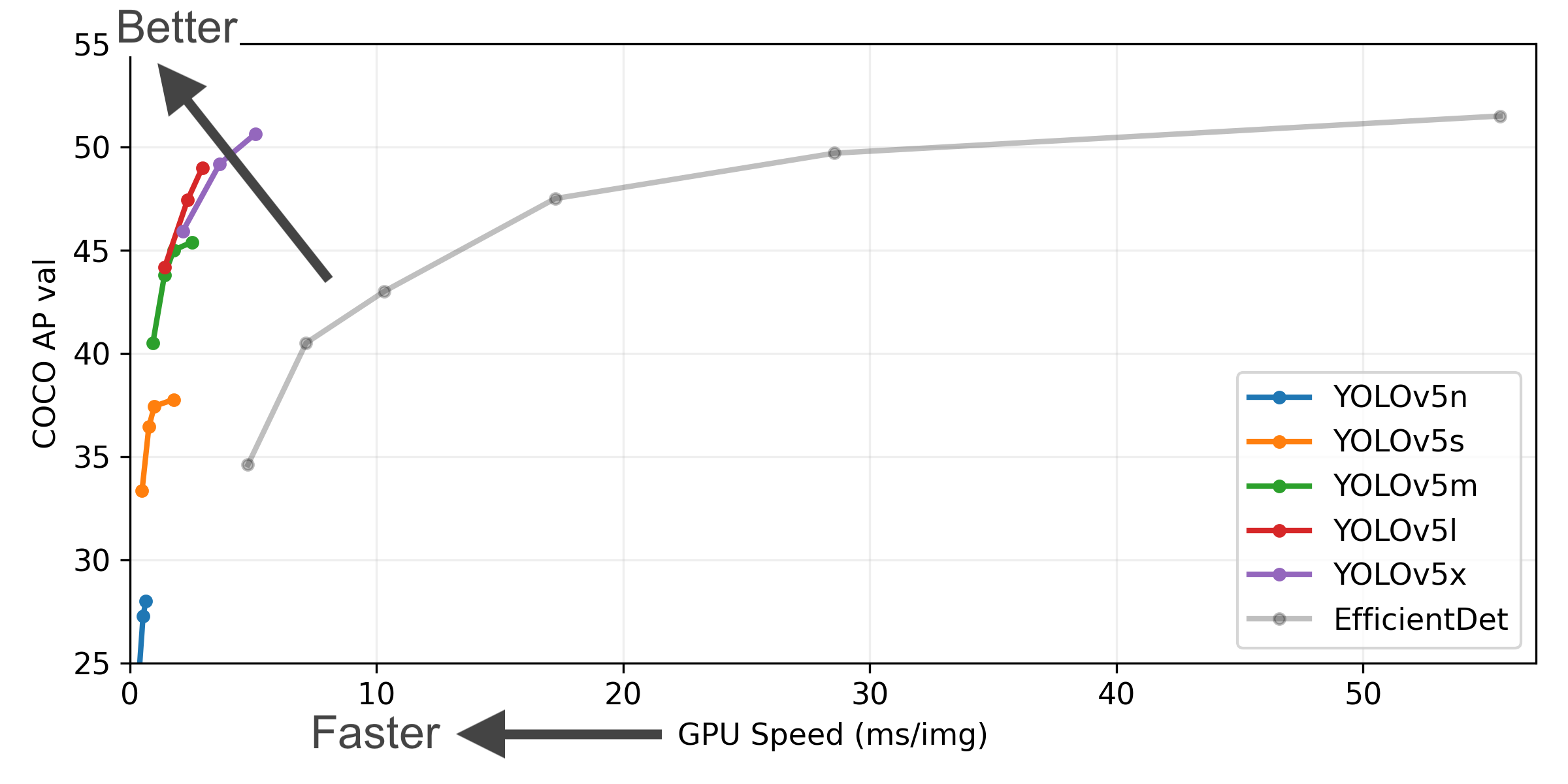

YOLOv5-P5 640 Figure

Figure Notes

- **COCO AP val** denotes the [mean Average Precision (mAP)](https://www.ultralytics.com/glossary/mean-average-precision-map) at [Intersection over Union (IoU)](https://www.ultralytics.com/glossary/intersection-over-union-iou) thresholds from 0.5 to 0.95, measured on the 5,000-image [COCO val2017 dataset](https://docs.ultralytics.com/datasets/detect/coco/) across various inference sizes (256 to 1536 pixels). - **GPU Speed** measures the average inference time per image on the [COCO val2017 dataset](https://docs.ultralytics.com/datasets/detect/coco/) using an [AWS p3.2xlarge V100 instance](https://aws.amazon.com/ec2/instance-types/p4/) with a batch size of 32. - **EfficientDet** data is sourced from the [google/automl repository](https://github.com/google/automl) at batch size 8. - **Reproduce** these results using the command: `python val.py --task study --data coco.yaml --iou 0.7 --weights yolov5n6.pt yolov5s6.pt yolov5m6.pt yolov5l6.pt yolov5x6.pt`Pretrained Checkpoints for Dental Applications

This table highlights performance metrics for YOLOv5 models trained and fine-tuned on panoramic radiography datasets for tooth detection and numbering.

| Model | Size

(pixels) | mAPval

50-95 | Speed

CPU b1

(ms) | Speed

V100 b1

(ms) | Params

(M) | FLOPs

@640 (B) |

|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------|------------------------|-----------------------|-------------------------------|-------------------------------|--------------------|-------------------------|

| YOLOv5n | 640 | 32.0 | 50 | 8.0 | 1.9 | 4.5 |

| YOLOv5s | 640 | 40.5 | 100 | 8.5 | 7.2 | 16.5 |

| YOLOv5m | 640 | 48.0 | 230 | 10.5 | 21.2 | 49.0 |

| YOLOv5l | 640 | 52.5 | 450 | 12.0 | 46.5 | 109.1 |

| YOLOv5x | 640 | 54.0 | 800 | 15.0 | 86.7 | 205.7 |

Table Notes

- **Dataset:** All models were fine-tuned using a custom panoramic radiography dataset annotated for tooth detection and numbering. - **Reproducibility:** Use the provided pretrained weights to reproduce results or fine-tune further using your dataset and the following command:python train.py --data data/dental.yaml --img 640 --epochs 100 --weights 'path_to_weights.pt' - **Performance:** Inference times are benchmarked on high-resolution dental images to ensure suitability for clinical and research applications. - **mAPval** values represent single-model, single-scale performance on the [COCO val2017 dataset](https://docs.ultralytics.com/datasets/detect/coco/).

Reproduce using: `python val.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65` - **Speed** metrics are averaged over COCO val images using an [AWS p3.2xlarge V100 instance](https://aws.amazon.com/ec2/instance-types/p4/). Non-Maximum Suppression (NMS) time (~1 ms/image) is not included.

Reproduce using: `python val.py --data coco.yaml --img 640 --task speed --batch 1` - **TTA** ([Test Time Augmentation](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation/)) includes reflection and scale augmentations for improved accuracy.

Reproduce using: `python val.py --data coco.yaml --img 1536 --iou 0.7 --augment`

🏷️ Classification

YOLOv5 release v6.2 introduced support for image classification model training, validation, and deployment. Check the Release Notes for details and the YOLOv5 Classification Colab Notebook for quickstart guides.

Classification Checkpoints

YOLOv5-cls classification models were trained on [ImageNet](https://docs.ultralytics.com/datasets/classify/imagenet/) for 90 epochs using a 4xA100 instance. [ResNet](https://arxiv.org/abs/1512.03385) and [EfficientNet](https://arxiv.org/abs/1905.11946) models were trained alongside under identical settings for comparison. Models were exported to [ONNX](https://onnx.ai/) FP32 (CPU speed tests) and [TensorRT](https://developer.nvidia.com/tensorrt) FP16 (GPU speed tests). All speed tests were run on Google [Colab Pro](https://colab.research.google.com/signup) for reproducibility. | Model | Size

(pixels) | Acc

top1 | Acc

top5 | Training

90 epochs

4xA100 (hours) | Speed

ONNX CPU

(ms) | Speed

TensorRT V100

(ms) | Params

(M) | FLOPs

@224 (B) | | -------------------------------------------------------------------------------------------------- | --------------------- | ---------------- | ---------------- | -------------------------------------------- | ------------------------------ | ----------------------------------- | ------------------ | ---------------------- | | [YOLOv5n-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n-cls.pt) | 224 | 64.6 | 85.4 | 7:59 | **3.3** | **0.5** | **2.5** | **0.5** | | [YOLOv5s-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s-cls.pt) | 224 | 71.5 | 90.2 | 8:09 | 6.6 | 0.6 | 5.4 | 1.4 | | [YOLOv5m-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m-cls.pt) | 224 | 75.9 | 92.9 | 10:06 | 15.5 | 0.9 | 12.9 | 3.9 | | [YOLOv5l-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l-cls.pt) | 224 | 78.0 | 94.0 | 11:56 | 26.9 | 1.4 | 26.5 | 8.5 | | [YOLOv5x-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x-cls.pt) | 224 | **79.0** | **94.4** | 15:04 | 54.3 | 1.8 | 48.1 | 15.9 | | | | | | | | | | | | [ResNet18](https://github.com/ultralytics/yolov5/releases/download/v7.0/resnet18.pt) | 224 | 70.3 | 89.5 | **6:47** | 11.2 | 0.5 | 11.7 | 3.7 | | [ResNet34](https://github.com/ultralytics/yolov5/releases/download/v7.0/resnet34.pt) | 224 | 73.9 | 91.8 | 8:33 | 20.6 | 0.9 | 21.8 | 7.4 | | [ResNet50](https://github.com/ultralytics/yolov5/releases/download/v7.0/resnet50.pt) | 224 | 76.8 | 93.4 | 11:10 | 23.4 | 1.0 | 25.6 | 8.5 | | [ResNet101](https://github.com/ultralytics/yolov5/releases/download/v7.0/resnet101.pt) | 224 | 78.5 | 94.3 | 17:10 | 42.1 | 1.9 | 44.5 | 15.9 | | | | | | | | | | | | [EfficientNet_b0](https://github.com/ultralytics/yolov5/releases/download/v7.0/efficientnet_b0.pt) | 224 | 75.1 | 92.4 | 13:03 | 12.5 | 1.3 | 5.3 | 1.0 | | [EfficientNet_b1](https://github.com/ultralytics/yolov5/releases/download/v7.0/efficientnet_b1.pt) | 224 | 76.4 | 93.2 | 17:04 | 14.9 | 1.6 | 7.8 | 1.5 | | [EfficientNet_b2](https://github.com/ultralytics/yolov5/releases/download/v7.0/efficientnet_b2.pt) | 224 | 76.6 | 93.4 | 17:10 | 15.9 | 1.6 | 9.1 | 1.7 | | [EfficientNet_b3](https://github.com/ultralytics/yolov5/releases/download/v7.0/efficientnet_b3.pt) | 224 | 77.7 | 94.0 | 19:19 | 18.9 | 1.9 | 12.2 | 2.4 |

Table Notes (click to expand)

- All checkpoints were trained for 90 epochs using the SGD optimizer with `lr0=0.001` and `weight_decay=5e-5` at an image size of 224 pixels, using default settings.Training runs are logged at [https://wandb.ai/glenn-jocher/YOLOv5-Classifier-v6-2](https://wandb.ai/glenn-jocher/YOLOv5-Classifier-v6-2). - **Accuracy** values (top-1 and top-5) represent single-model, single-scale performance on the [ImageNet-1k dataset](https://docs.ultralytics.com/datasets/classify/imagenet/).

Reproduce using: `python classify/val.py --data ../datasets/imagenet --img 224` - **Speed** metrics are averaged over 100 inference images using a Google [Colab Pro V100 High-RAM instance](https://colab.research.google.com/signup).

Reproduce using: `python classify/val.py --data ../datasets/imagenet --img 224 --batch 1` - **Export** to ONNX (FP32) and TensorRT (FP16) was performed using `export.py`.

Reproduce using: `python export.py --weights yolov5s-cls.pt --include engine onnx --imgsz 224`

Classification Usage Examples

### Train

YOLOv5 classification training supports automatic download for datasets like [MNIST](https://docs.ultralytics.com/datasets/classify/mnist/), [Fashion-MNIST](https://docs.ultralytics.com/datasets/classify/fashion-mnist/), [CIFAR10](https://docs.ultralytics.com/datasets/classify/cifar10/), [CIFAR100](https://docs.ultralytics.com/datasets/classify/cifar100/), [Imagenette](https://docs.ultralytics.com/datasets/classify/imagenette/), [Imagewoof](https://docs.ultralytics.com/datasets/classify/imagewoof/), and [ImageNet](https://docs.ultralytics.com/datasets/classify/imagenet/) using the `--data` argument. For example, start training on MNIST with `--data mnist`.

```bash

# Train on a single GPU using CIFAR-100 dataset

python classify/train.py --model yolov5s-cls.pt --data cifar100 --epochs 5 --img 224 --batch 128

# Train using Multi-GPU DDP on ImageNet dataset

python -m torch.distributed.run --nproc_per_node 4 --master_port 1 classify/train.py --model yolov5s-cls.pt --data imagenet --epochs 5 --img 224 --device 0,1,2,3

```

### Val

Validate the accuracy of the YOLOv5m-cls model on the ImageNet-1k validation dataset:

```bash

# Download ImageNet validation split (6.3GB, 50,000 images)

bash data/scripts/get_imagenet.sh --val

# Validate the model

python classify/val.py --weights yolov5m-cls.pt --data ../datasets/imagenet --img 224

```

### Predict

Use the pretrained YOLOv5s-cls.pt model to classify the image `bus.jpg`:

```bash

# Run prediction

python classify/predict.py --weights yolov5s-cls.pt --source data/images/bus.jpg

```

```python

# Load model from PyTorch Hub

model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5s-cls.pt")

```

### Export

Export trained YOLOv5s-cls, ResNet50, and EfficientNet_b0 models to ONNX and TensorRT formats:

```bash

# Export models

python export.py --weights yolov5s-cls.pt resnet50.pt efficientnet_b0.pt --include onnx engine --img 224

```

☁️ Environments

Get started quickly with our pre-configured environments. Click the icons below for setup details.

📜 License

- AGPL-3.0 License: An OSI-approved open-source license ideal for academic research, personal projects, and testing. It promotes open collaboration and knowledge sharing. See the LICENSE file for details.

📧 Contact

For bug reports and feature requests related to YOLOv5, please visit GitHub Issues. For general questions, discussions, and community support, join our Discord server!

Owner

- Name: Tuna

- Login: tunisch

- Kind: user

- Location: İstanbul /Turkey

- Website: linkedin.com/in/tunahan-turker-erturk-98094b222

- Repositories: 1

- Profile: https://github.com/tunisch

Software Engineering Student

Citation (CITATION.cff)

cff-version: 1.2.0

preferred-citation:

type: software

message: If you use YOLOv5, please cite it as below.

authors:

- family-names: Jocher

given-names: Glenn

orcid: "https://orcid.org/0000-0001-5950-6979"

title: "YOLOv5 by Ultralytics"

version: 7.0

doi: 10.5281/zenodo.3908559

date-released: 2020-5-29

license: AGPL-3.0

url: "https://github.com/ultralytics/yolov5"

GitHub Events

Total

- Delete event: 1

- Issue comment event: 6

- Push event: 32

- Pull request event: 3

- Create event: 2

Last Year

- Delete event: 1

- Issue comment event: 6

- Push event: 32

- Pull request event: 3

- Create event: 2

Committers

Last synced: 8 months ago

Top Committers

| Name | Commits | |

|---|---|---|

| Glenn Jocher | g****r@u****m | 2,082 |

| Ayush Chaurasia | a****a@g****m | 54 |

| dependabot[bot] | 4****] | 46 |

| Tuna | 8****h | 35 |

| Jirka | j****c@s****z | 34 |

| Alex Stoken | a****n@g****m | 32 |

| Yonghye Kwon | d****e@g****m | 20 |

| NanoCode012 | k****g@r****m | 20 |

| pre-commit-ci[bot] | 6****] | 19 |

| imyhxy | i****y@g****m | 14 |

| Paula Derrenger | 1****r | 11 |

| Kalen Michael | k****e@g****m | 10 |

| Zengyf-CVer | 4****r | 9 |

| Jiacong Fang | z****t@1****m | 9 |

| ChristopherSTAN | 4****3@q****m | 9 |

| Ultralytics Assistant | 1****t | 8 |

| Victor Sonck | v****k@g****m | 7 |

| Colin Wong | c****h@g****m | 7 |

| Snyk bot | s****t@s****o | 6 |

| Lornatang | l****1@g****m | 5 |

| Laughing | 6****q | 5 |

| Diego Montes | 5****s | 5 |

| Abhiram V | 6****t | 5 |

| Zhiqiang Wang | z****g@f****m | 5 |

| yxNONG | 6****G | 4 |

| tkianai | 4****i | 4 |

| UnglvKitDe | 1****e | 4 |

| Jebastin Nadar | n****0@g****m | 4 |

| Dhruv Nair | d****r@g****m | 4 |

| Cristi Fati | f****j@y****m | 4 |

| and 316 more... | ||

Committer Domains (Top 20 + Academic)

Issues and Pull Requests

Last synced: 8 months ago

All Time

- Total issues: 0

- Total pull requests: 1

- Average time to close issues: N/A

- Average time to close pull requests: 10 days

- Total issue authors: 0

- Total pull request authors: 1

- Average comments per issue: 0

- Average comments per pull request: 3.0

- Merged pull requests: 0

- Bot issues: 0

- Bot pull requests: 1

Past Year

- Issues: 0

- Pull requests: 1

- Average time to close issues: N/A

- Average time to close pull requests: 10 days

- Issue authors: 0

- Pull request authors: 1

- Average comments per issue: 0

- Average comments per pull request: 3.0

- Merged pull requests: 0

- Bot issues: 0

- Bot pull requests: 1

Top Authors

Issue Authors

Pull Request Authors

- dependabot[bot] (3)

Top Labels

Issue Labels

Pull Request Labels

Dependencies

- actions/checkout v4 composite

- actions/setup-python v5 composite

- slackapi/slack-github-action v2.0.0 composite

- contributor-assistant/github-action v2.6.1 composite

- actions/checkout v4 composite

- docker/build-push-action v6 composite

- docker/login-action v3 composite

- docker/setup-buildx-action v3 composite

- docker/setup-qemu-action v3 composite

- ultralytics/actions main composite

- actions/checkout v4 composite

- ultralytics/actions/retry main composite

- actions/checkout v4 composite

- actions/setup-python v5 composite

- actions/stale v9 composite

- pytorch/pytorch 2.0.0-cuda11.7-cudnn8-runtime build

- gcr.io/google-appengine/python latest build

- matplotlib >=3.3.0

- numpy >=1.22.2

- opencv-python >=4.6.0

- pandas >=1.1.4

- pillow >=7.1.2

- psutil *

- py-cpuinfo *

- pyyaml >=5.3.1

- requests >=2.23.0

- scipy >=1.4.1

- seaborn >=0.11.0

- thop >=0.1.1

- torch >=1.8.0

- torchvision >=0.9.0

- tqdm >=4.64.0

- ultralytics >=8.1.47

- PyYAML >=5.3.1

- gitpython >=3.1.30

- matplotlib >=3.3

- numpy >=1.23.5

- opencv-python >=4.1.1

- pandas >=1.1.4

- pillow >=10.3.0

- psutil *

- pycocotools >=2.0.6

- requests >=2.32.2

- scipy >=1.4.1

- seaborn >=0.11.0

- setuptools >=70.0.0

- tensorboard >=2.4.1

- thop >=0.1.1

- torchvision >=0.9.0

- tqdm >=4.66.3

- Flask ==2.3.2

- gunicorn ==23.0.0

- pip ==23.3

- werkzeug >=3.0.1

- zipp >=3.19.1