hugsvision

HugsVision is a easy to use huggingface wrapper for state-of-the-art computer vision

Science Score: 46.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

○CITATION.cff file

-

✓codemeta.json file

Found codemeta.json file -

✓.zenodo.json file

Found .zenodo.json file -

○DOI references

-

✓Academic publication links

Links to: arxiv.org -

✓Committers with academic emails

1 of 2 committers (50.0%) from academic institutions -

○Institutional organization owner

-

○JOSS paper metadata

-

○Scientific vocabulary similarity

Low similarity (20.3%) to scientific vocabulary

Keywords

Repository

HugsVision is a easy to use huggingface wrapper for state-of-the-art computer vision

Basic Info

- Host: GitHub

- Owner: qanastek

- License: mit

- Language: Jupyter Notebook

- Default Branch: main

- Homepage: https://pypi.org/project/hugsvision/

- Size: 103 MB

Statistics

- Stars: 197

- Watchers: 2

- Forks: 21

- Open Issues: 18

- Releases: 0

Topics

Metadata Files

README.md

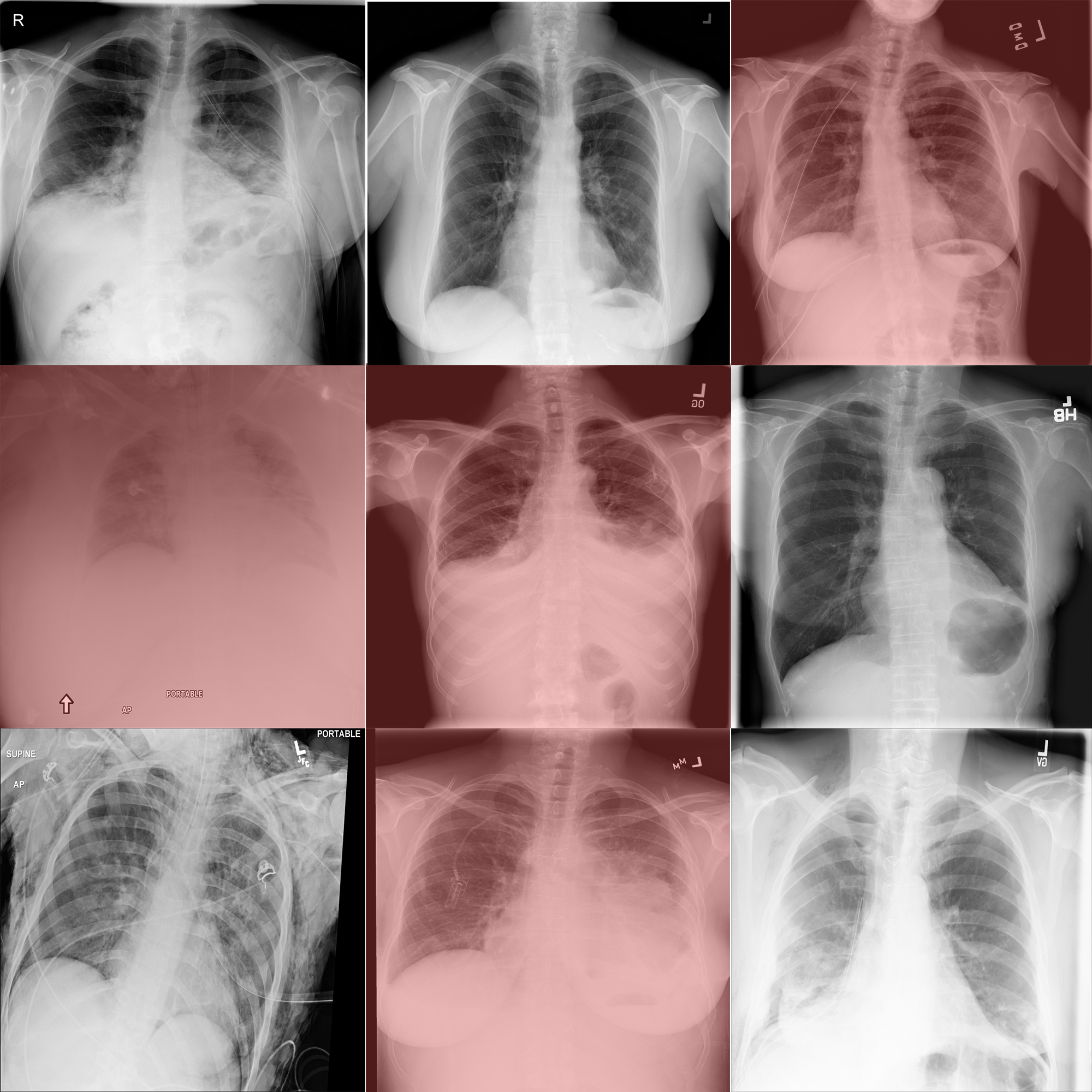

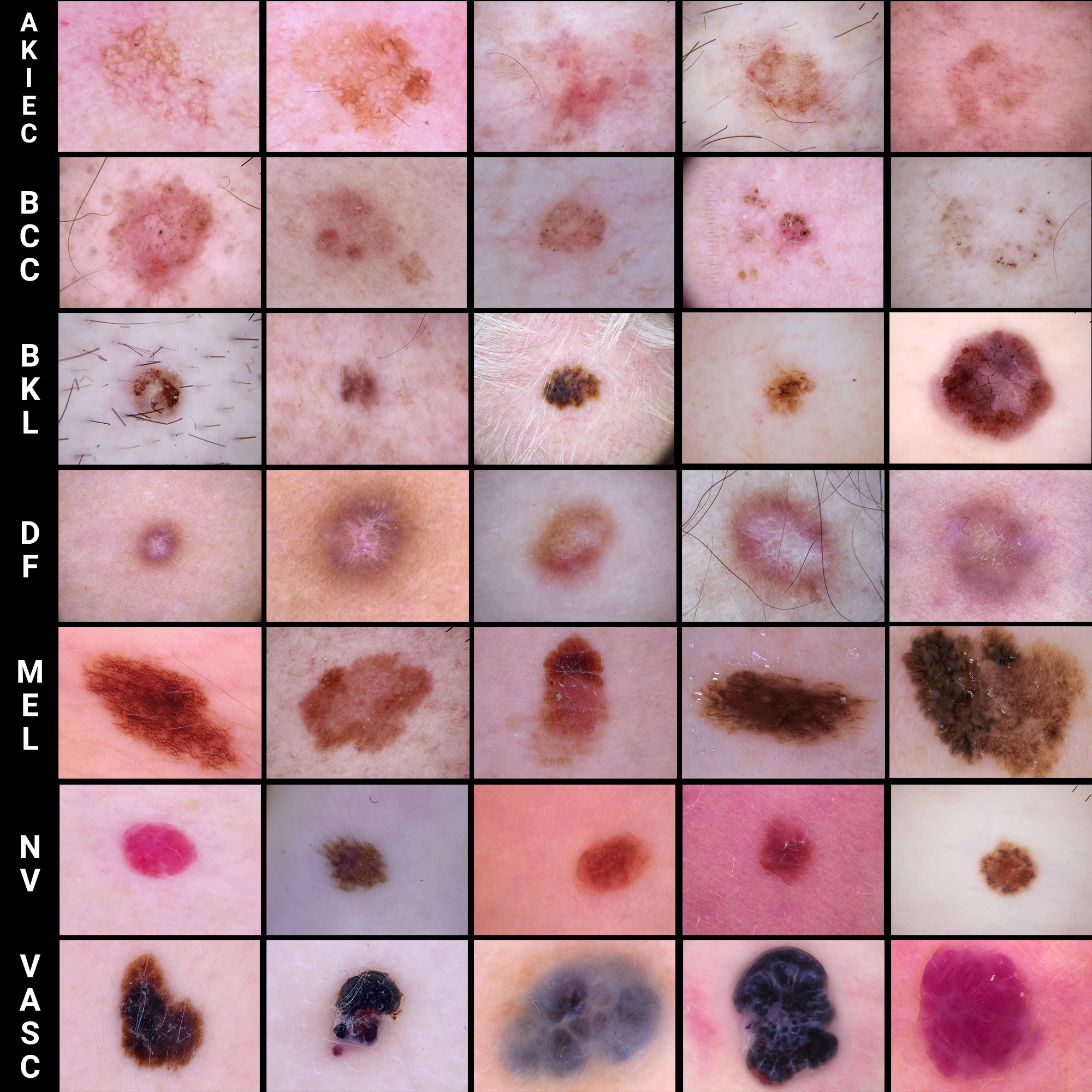

HugsVision is an open-source and easy to use all-in-one huggingface wrapper for computer vision.

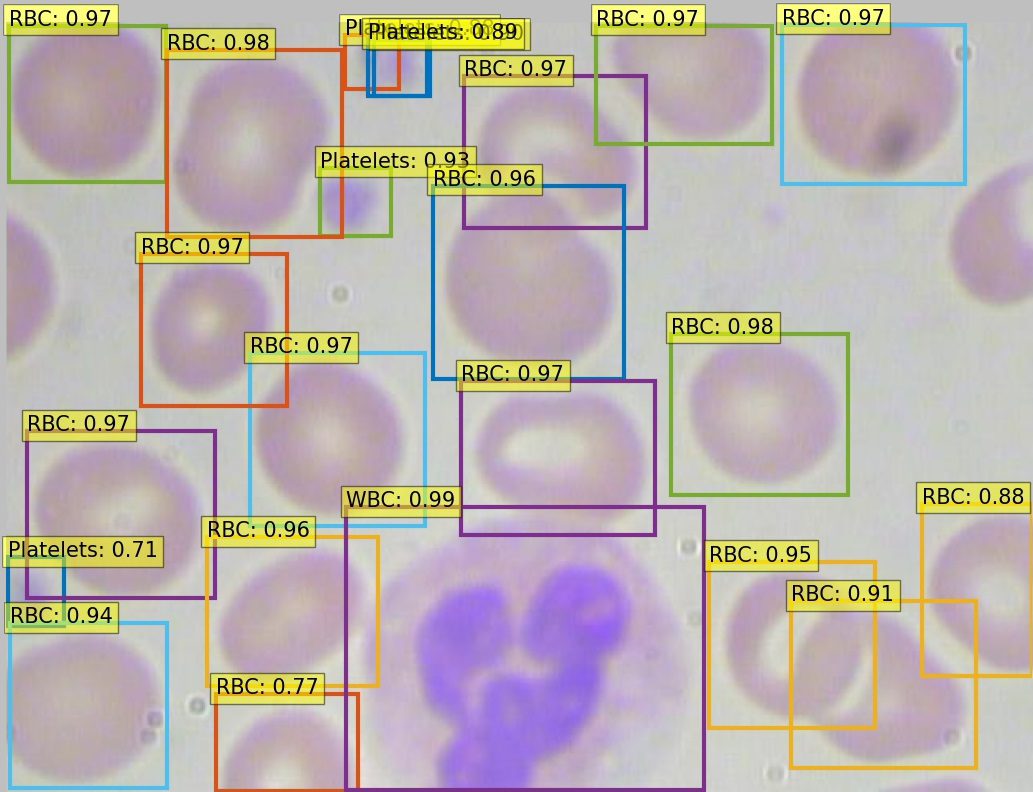

The goal is to create a fast, flexible and user-friendly toolkit that can be used to easily develop state-of-the-art computer vision technologies, including systems for Image Classification, Semantic Segmentation, Object Detection, Image Generation, Denoising and much more.

HugsVision is currently in beta.

Quick installation

HugsVision is constantly evolving. New features, tutorials, and documentation will appear over time. HugsVision can be installed via PyPI to rapidly use the standard library. Moreover, a local installation can be used by those users than want to run experiments and modify/customize the toolkit. HugsVision supports both CPU and GPU computations. For most recipes, however, a GPU is necessary during training. Please note that CUDA must be properly installed to use GPUs.

Anaconda setup

bash

conda create --name HugsVision python=3.6 -y

conda activate HugsVision

More information on managing environments with Anaconda can be found in the conda cheat sheet.

Install via PyPI

Once you have created your Python environment (Python 3.6+) you can simply type:

bash

pip install hugsvision

Install with GitHub

Once you have created your Python environment (Python 3.6+) you can simply type:

bash

git clone https://github.com/qanastek/HugsVision.git

cd HugsVision

pip install -r requirements.txt

pip install --editable .

Any modification made to the hugsvision package will be automatically interpreted as we installed it with the --editable flag.

Example Usage

Let's train a binary classifier that can distinguish people with or without Pneumothorax thanks to their radiography.

Steps:

- Move to the recipe directory

cd recipes/pneumothorax/binary_classification/ - Download the dataset here ~779 MB.

- Transform the dataset into a directory based one, thanks to the

process.pyscript. - Train the model:

python train_example_vit.py --imgs="./pneumothorax_binary_classification_task_data/" --name="pneumo_model_vit" --epochs=1 - Rename

<MODEL_PATH>/config.jsonto<MODEL_PATH>/preprocessor_config.jsonin my case, the model is situated at the output path like./out/MYVITMODEL/1_2021-08-10-00-53-58/model/ - Make a prediction:

python predict.py --img="42.png" --path="./out/MYVITMODEL/1_2021-08-10-00-53-58/model/"

Models recipes

You can find all the currently available models or tasks under the recipes/ folder.

HuggingFace Spaces

You can try some of the models or tasks on HuggingFace thanks to theirs amazing spaces :

|

|

Model architectures

All the model checkpoints provided by Transformers and compatible with our tasks can be seamlessly integrated from the huggingface.co model hub where they are uploaded directly by users and organizations.

Before starting implementing, please check if your model has an implementation in PyTorch by refering to this table.

Transformers currently provides the following architectures for Computer Vision:

- ViT (from Google Research, Brain Team) released with the paper An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale, by Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, Neil Houlsby.

- DeiT (from Facebook AI and Sorbonne University) released with the paper Training data-efficient image transformers & distillation through attention by Hugo Touvron, Matthieu Cord, Matthijs Douze, Francisco Massa, Alexandre Sablayrolles, Herv Jgou.

- BEiT (from Microsoft Research) released with the paper BEIT: BERT Pre-Training of Image Transformers by Hangbo Bao, Li Dong and Furu Wei.

- DETR (from Facebook AI) released with the paper End-to-End Object Detection with Transformers by Nicolas Carion, Francisco Massa, Gabriel Synnaeve, Nicolas Usunier, Alexander Kirillov and Sergey Zagoruyko.

Build PyPi package

Build: python setup.py sdist bdist_wheel

Upload: twine upload dist/*

Citation

If you want to cite the tool you can use this:

bibtex

@misc{HugsVision,

title={HugsVision},

author={Yanis Labrak},

publisher={GitHub},

journal={GitHub repository},

howpublished={\url{https://github.com/qanastek/HugsVision}},

year={2021}

}

Owner

- Name: Labrak Yanis

- Login: qanastek

- Kind: user

- Location: Avignon, France

- Company: Laboratoire Informatique d'Avignon

- Website: linkedin.com/in/yanis-labrak-8a7412145/

- Twitter: LabrakYanis

- Repositories: 8

- Profile: https://github.com/qanastek

👨🏻🎓 PhD. student in Computer Science (CS), Avignon University 🇫🇷 🏛 Research Scientist - Machine Learning in Healthcare

GitHub Events

Total

- Watch event: 6

- Pull request event: 1

- Fork event: 1

Last Year

- Watch event: 6

- Pull request event: 1

- Fork event: 1

Committers

Last synced: 8 months ago

Top Committers

| Name | Commits | |

|---|---|---|

| Labrak Yanis | y****k@a****r | 72 |

| Manuel Romero | m****8@g****m | 1 |

Committer Domains (Top 20 + Academic)

Issues and Pull Requests

Last synced: 8 months ago

All Time

- Total issues: 40

- Total pull requests: 3

- Average time to close issues: 20 days

- Average time to close pull requests: 4 months

- Total issue authors: 10

- Total pull request authors: 3

- Average comments per issue: 1.03

- Average comments per pull request: 0.33

- Merged pull requests: 1

- Bot issues: 0

- Bot pull requests: 0

Past Year

- Issues: 0

- Pull requests: 1

- Average time to close issues: N/A

- Average time to close pull requests: N/A

- Issue authors: 0

- Pull request authors: 1

- Average comments per issue: 0

- Average comments per pull request: 0.0

- Merged pull requests: 0

- Bot issues: 0

- Bot pull requests: 0

Top Authors

Issue Authors

- qanastek (29)

- johnfelipe (2)

- ohjho (2)

- Breeze-Zero (1)

- raseena-tp (1)

- zihaoz96 (1)

- minaahmed (1)

- guneetsk99 (1)

- M-Melodious (1)

- RicoFio (1)

Pull Request Authors

- ReiiNoki (2)

- ohjho (1)

- mrm8488 (1)

Top Labels

Issue Labels

Pull Request Labels

Dependencies

- Pillow *

- matplotlib *

- opencv-python *

- pycocotools *

- pytorch-lightning *

- scikit-learn *

- tabulate *

- timm *

- torch *

- torchmetrics *

- torchvision *

- tqdm *

- transformers *

- ubuntu 18.04 build