logpai

A machine learning toolkit for log parsing [ICSE'19, DSN'16]

Science Score: 49.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

○CITATION.cff file

-

✓codemeta.json file

Found codemeta.json file -

✓.zenodo.json file

Found .zenodo.json file -

✓DOI references

Found 2 DOI reference(s) in README -

✓Academic publication links

Links to: arxiv.org, researchgate.net, ieee.org -

○Committers with academic emails

-

○Institutional organization owner

-

○JOSS paper metadata

-

○Scientific vocabulary similarity

Low similarity (12.4%) to scientific vocabulary

Keywords

Keywords from Contributors

Repository

A machine learning toolkit for log parsing [ICSE'19, DSN'16]

Basic Info

Statistics

- Stars: 1,811

- Watchers: 58

- Forks: 577

- Open Issues: 4

- Releases: 5

Topics

Metadata Files

README.md

Logparser

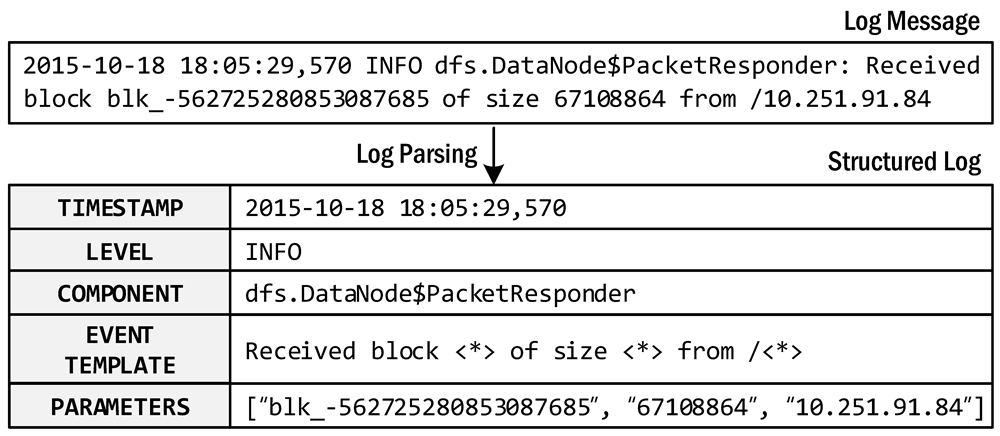

Logparser provides a machine learning toolkit and benchmarks for automated log parsing, which is a crucial step for structured log analytics. By applying logparser, users can automatically extract event templates from unstructured logs and convert raw log messages into a sequence of structured events. The process of log parsing is also known as message template extraction, log key extraction, or log message clustering in the literature.

An example of log parsing

New updates

- Since the first release of logparser, many PRs and issues have been submitted due to incompatibility with Python 3. Finally, we update logparser v1.0.0 with support for Python 3. Thanks for all the contributions (#PR86, #PR85, #PR83, #PR80, #PR65, #PR57, #PR53, #PR52, #PR51, #PR49, #PR18, #PR22)!

- We build the package wheel logparser3 and release it on pypi. Please install via

pip install logparser3. - We refactor the code structure and beautify the code via the Python code formatter black.

Log parsers available:

| Publication | Parser | Paper Title | Benchmark | |:-----------:|:-------------------------------------------------------------------------------------------:|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:----------:| | IPOM'03 | SLCT | A Data Clustering Algorithm for Mining Patterns from Event Logs, by Risto Vaarandi. | :arrowupperright: | | QSIC'08 | AEL | Abstracting Execution Logs to Execution Events for Enterprise Applications, by Zhen Ming Jiang, Ahmed E. Hassan, Parminder Flora, Gilbert Hamann. | :arrowupperright: | | KDD'09 | IPLoM | Clustering Event Logs Using Iterative Partitioning, by Adetokunbo Makanju, A. Nur Zincir-Heywood, Evangelos E. Milios. |:arrowupperright: | | ICDM'09 | LKE | Execution Anomaly Detection in Distributed Systems through Unstructured Log Analysis, by Qiang Fu, Jian-Guang Lou, Yi Wang, Jiang Li. [Microsoft] |:arrowupperright: | | MSR'10 | LFA | Abstracting Log Lines to Log Event Types for Mining Software System Logs, by Meiyappan Nagappan, Mladen A. Vouk. |:arrowupperright: | | CIKM'11 | LogSig | LogSig: Generating System Events from Raw Textual Logs, by Liang Tang, Tao Li, Chang-Shing Perng. |:arrowupperright: | | SCC'13 | SHISO | Incremental Mining of System Log Format, by Masayoshi Mizutani. |:arrowupperright: | | CNSM'15 | LogCluster | LogCluster - A Data Clustering and Pattern Mining Algorithm for Event Logs, by Risto Vaarandi, Mauno Pihelgas. |:arrowupperright: | | CNSM'15 | LenMa | Length Matters: Clustering System Log Messages using Length of Words, by Keiichi Shima. |:arrowupperright: | | CIKM'16 | LogMine | LogMine: Fast Pattern Recognition for Log Analytics, by Hossein Hamooni, Biplob Debnath, Jianwu Xu, Hui Zhang, Geoff Jiang, Adbullah Mueen. [NEC] |:arrowupperright: | | ICDM'16 | Spell | Spell: Streaming Parsing of System Event Logs, by Min Du, Feifei Li. |:arrowupperright: | | ICWS'17 | Drain | Drain: An Online Log Parsing Approach with Fixed Depth Tree, by Pinjia He, Jieming Zhu, Zibin Zheng, and Michael R. Lyu. |:arrowupperright: | | ICPC'18 | MoLFI | A Search-based Approach for Accurate Identification of Log Message Formats, by Salma Messaoudi, Annibale Panichella, Domenico Bianculli, Lionel Briand, Raimondas Sasnauskas. |:arrowupperright: | | TSE'20 | Logram | Logram: Efficient Log Parsing Using n-Gram Dictionaries, by Hetong Dai, Heng Li, Che-Shao Chen, Weiyi Shang, and Tse-Hsun (Peter) Chen. |:arrowupperright: | | ECML-PKDD'20 | NuLog | Self-Supervised Log Parsing, by Sasho Nedelkoski, Jasmin Bogatinovski, Alexander Acker, Jorge Cardoso, Odej Kao. |:arrowupperright: | | ICSME'22 | ULP | An Effective Approach for Parsing Large Log Files, by Issam Sedki, Abdelwahab Hamou-Lhadj, Otmane Ait-Mohamed, Mohammed A. Shehab. |:arrowupperright: | | TSC'23 | Brain | Brain: Log Parsing with Bidirectional Parallel Tree, by Siyu Yu, Pinjia He, Ningjiang Chen, Yifan Wu. |:arrowupperright: | | ICSE'24 | DivLog | DivLog: Log Parsing with Prompt Enhanced In-Context Learning, by Junjielong Xu, Ruichun Yang, Yintong Huo, Chengyu Zhang, and Pinjia He. |:arrowupperright: |

:bulb: Welcome to submit a PR to push your parser code to logparser and add your paper to the table.

Installation

We recommend installing the logparser package and requirements via pip install.

pip install logparser3

In particular, the package depends on the following requirements. Note that regex matching in Python is brittle, so we recommend fixing the regex library to version 2022.3.2.

Note: If you encouter "Error: need to escape...", please follow the instructions here.

- python 3.6+

- regex 2022.3.2

- numpy

- pandas

- scipy

- scikit-learn

Conditional requirements:

- If using MoLFI:

deap - If using SHISO:

nltk - If using SLCT:

gcc - If using LogCluster:

perl - If using NuLog:

torch,torchvision,keras_preprocessing - If using DivLog:

openai,tiktoken(require python 3.8+)

Get started

Run the demo:

For each log parser, we provide a demo to help you get started. Each demo shows the basic usage of a target log parser and the hyper-parameters to configure. For example, the following command shows how to run the demo for Drain.

cd logparser/Drain python demo.pyRun the benchmark:

For each log parser, we provide a benchmark script to run log parsing on the loghub_2k datasets for evaluating parsing accuarcy. You can also use other benchmark datasets for log parsing.

cd logparser/Drain python benchmark.pyThe benchmarking results can be found at the readme file of each parser, e.g., https://github.com/logpai/logparser/tree/main/logparser/Drain#benchmark.

Parse your own logs:

It is easy to apply logparser to parsing your own log data. To do so, you need to install the logparser3 package first. Then you can develop your own script following the below code snippet to start log parsing. See the full example code at example/parseyourown_logs.py.

```python from logparser.Drain import LogParser

inputdir = 'PATHTOLOGS/' # The input directory of log file outputdir = 'result/' # The output directory of parsing results logfile = 'unknow.log' # The input log file name logformat = '

Regular expression list for optional preprocessing (default: [])

regex = [ r'(/|)([0-9]+.){3}[0-9]+(:[0-9]+|)(:|)' # IP ] st = 0.5 # Similarity threshold depth = 4 # Depth of all leaf nodes

parser = LogParser(logformat, indir=inputdir, outdir=outputdir, depth=depth, st=st, rex=regex) parser.parse(logfile) ```

After running logparser, you can obtain extracted event templates and parsed structured logs in the output folder.

+ `*_templates.csv` (See example [HDFS_2k.log_templates.csv](https://github.com/logpai/logparser/blob/main/logparser/Drain/demo_result/HDFS_2k.log_templates.csv))

| EventId | EventTemplate | Occurrences |

|----------|------------------------------------------------|-------------|

| dc2c74b7 | PacketResponder <*> for block <*> terminating | 311 |

| e3df2680 | Received block <*> of size <*> from <*> | 292 |

| 09a53393 | Receiving block <*> src: <*> dest: <*> | 292 |

+ `*_structured.csv` (See example [HDFS_2k.log_structured.csv](https://github.com/logpai/logparser/blob/main/logparser/Drain/demo_result/HDFS_2k.log_structured.csv))

| ... | Level | Content | EventId | EventTemplate | ParameterList |

|-----|-------|-----------------------------------------------------------------------------------------------|----------|---------------------------------------------------------------------|--------------------------------------------|

| ... | INFO | PacketResponder 1 for block blk_38865049064139660 terminating | dc2c74b7 | PacketResponder <*> for block <*> terminating | ['1', 'blk_38865049064139660'] |

| ... | INFO | Received block blk_3587508140051953248 of size 67108864 from /10.251.42.84 | e3df2680 | Received block <*> of size <*> from <*> | ['blk_3587508140051953248', '67108864', '/10.251.42.84'] |

| ... | INFO | Verification succeeded for blk_-4980916519894289629 | 32777b38 | Verification succeeded for <*> | ['blk_-4980916519894289629'] |

Production use

The main goal of logparser is used for research and benchmark purpose. Researchers can use logparser as a code base to develop new log parsers while practitioners could assess the performance and scalability of current log parsing methods through our benchmarking. We strongly recommend practitioners to try logparser in your production environment. But be aware that the current implementation of logparser is far from ready for production use. Whereas we currently have no plan to do that, we do have a few suggestions for developers who want to build an intelligent production-level log parser.

- Please be aware of the licenses of third-party libraries used in logparser. We suggest to keep one parser and delete the others and then re-build the package wheel. This would not break the use of logparser.

- Please enhance logparser with efficiency and scalability with multi-processing, add failure recovery, add persistence to disk or message queue Kafka.

- Drain3 provides a good example for your reference that is built with practical enhancements for production scenarios.

Citation

If you use our logparser tools or benchmarking results in your publication, please cite the following papers.

- [ICSE'19] Jieming Zhu, Shilin He, Jinyang Liu, Pinjia He, Qi Xie, Zibin Zheng, Michael R. Lyu. Tools and Benchmarks for Automated Log Parsing. International Conference on Software Engineering (ICSE), 2019.

- [DSN'16] Pinjia He, Jieming Zhu, Shilin He, Jian Li, Michael R. Lyu. An Evaluation Study on Log Parsing and Its Use in Log Mining. IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), 2016.

Contributors

|

Zhujiem |

Pinjia He |

LIU, Jinyang |

Junjielong Xu |

Shilin HE |

Joseph |

|

Jos A. Cordn |

Rustam Temirov |

Siyu Yu (Youth Yu) |

Thomas Ryckeboer |

Isuru Boyagane |

Discussion

Welcome to join our WeChat group for any question and discussion. Alternatively, you can open an issue here.

Owner

- Name: LOGPAI

- Login: logpai

- Kind: organization

- Website: https://logpai.com

- Repositories: 17

- Profile: https://github.com/logpai

Log Analytics Powered by AI

GitHub Events

Total

- Issues event: 10

- Watch event: 200

- Issue comment event: 9

- Push event: 6

- Pull request event: 9

- Fork event: 28

Last Year

- Issues event: 10

- Watch event: 200

- Issue comment event: 9

- Push event: 6

- Pull request event: 9

- Fork event: 28

Committers

Last synced: 9 months ago

Top Committers

| Name | Commits | |

|---|---|---|

| PunyTitan | p****e@g****m | 57 |

| Jamie Zhu | z****e@g****m | 39 |

| Jamie Zhu | j****u | 37 |

| Jinyang88 | l****5@g****m | 10 |

| Siyuexi | s****i@f****m | 8 |

| jimzhu | j****z@g****m | 7 |

| Shilin He | s****l@g****m | 5 |

| github-actions[bot] | 4****] | 3 |

| Joseph Hit Hard | m****5@g****m | 1 |

| José Antonio Cordón | 8****5 | 1 |

| Rustam Temirov | r****9@g****m | 1 |

| Siyu Yu (Youth Yu) | g****6@g****m | 1 |

| Thomas Ryckeboer | 3****k | 1 |

| W. M. Isuru Bandara Boyagane | i****5@g****m | 1 |

| dependabot[bot] | 4****] | 1 |

Committer Domains (Top 20 + Academic)

Issues and Pull Requests

Last synced: 6 months ago

All Time

- Total issues: 80

- Total pull requests: 36

- Average time to close issues: 3 months

- Average time to close pull requests: 12 months

- Total issue authors: 65

- Total pull request authors: 26

- Average comments per issue: 2.11

- Average comments per pull request: 0.75

- Merged pull requests: 9

- Bot issues: 0

- Bot pull requests: 1

Past Year

- Issues: 7

- Pull requests: 9

- Average time to close issues: 27 days

- Average time to close pull requests: 20 days

- Issue authors: 6

- Pull request authors: 6

- Average comments per issue: 0.71

- Average comments per pull request: 0.11

- Merged pull requests: 2

- Bot issues: 0

- Bot pull requests: 0

Top Authors

Issue Authors

- zhujiem (4)

- ankit-nassa (3)

- psychogyiokostas (3)

- Tan3364 (2)

- charleswu52 (2)

- xiaoze17 (2)

- fmjubori (2)

- Anny2131 (2)

- ghost (2)

- white2018 (2)

- kevko2020 (2)

- vikramriyer (1)

- j4d (1)

- MarcPlatini (1)

- HankKung (1)

Pull Request Authors

- Siyuexi (5)

- tokyojackson (3)

- JoeHitHard (3)

- StruggleForCode (2)

- Aaryaveerkrishna23 (2)

- dependabot[bot] (2)

- xxxx2077 (2)

- Wapiti08 (2)

- thomasryck (2)

- gutjuri (2)

- zzkluck (1)

- axwitech (1)

- laangzj (1)

- lopozz (1)

- AEnguerrand (1)

Top Labels

Issue Labels

Pull Request Labels

Packages

- Total packages: 2

-

Total downloads:

- pypi 2,746 last-month

-

Total dependent packages: 0

(may contain duplicates) -

Total dependent repositories: 0

(may contain duplicates) - Total versions: 7

- Total maintainers: 1

pypi.org: logparser3

A machine learning toolkit for log parsing from LOGPAI

- Homepage: https://github.com/logpai/logparser

- Documentation: https://logparser3.readthedocs.io/

- License: Apache-2.0 License

-

Latest release: 1.0.4

published over 2 years ago

Rankings

Maintainers (1)

pypi.org: logpai

Log Analytics Powered by AI

- Homepage: https://github.com/logpai/logparser

- Documentation: https://logpai.readthedocs.io/

- License: Apache-2.0 License

-

Latest release: 1.0.0

published over 2 years ago

Rankings

Maintainers (1)

Dependencies

- deap *

- numpy *

- actions/checkout v3 composite

- actions/setup-python v4 composite

- akhilmhdh/contributors-readme-action master composite

- numpy *

- pandas *

- regex ==2022.3.2

- scipy *

- numpy ==1.23.5

- openai ==0.27.8

- pandas ==1.5.3

- scikit_learn ==1.2.1

- tiktoken ==0.4.0

- tqdm ==4.64.1

- numpy *

- pandas *

- regex ==2022.3.2

- scipy *

- numpy *

- pandas *

- regex ==2022.3.2

- scipy *

- numpy *

- pandas *

- regex ==2022.3.2

- scipy *

- numpy *

- pandas *

- regex ==2022.3.2

- scipy *

- numpy *

- pandas *

- regex ==2022.3.2

- scikit-learn *

- scipy *

- numpy *

- pandas *

- regex ==2022.3.2

- scipy *

- numpy *

- pandas *

- regex ==2022.3.2

- scipy *

- numpy *

- pandas *

- regex ==2022.3.2

- scipy *

- numpy *

- pandas *

- regex ==2022.3.2

- scipy *

- keras_preprocessing ==1.1.2

- numpy *

- pandas *

- pillow ==6.1.0

- regex ==2022.3.2

- scikit_learn *

- scipy *

- torch ==1.3.1

- torchvision ==0.4.2

- tqdm *

- nltk *

- numpy *

- pandas *

- regex ==2022.3.2

- scipy *

- numpy *

- pandas *

- regex ==2022.3.2

- scipy *

- numpy *

- pandas *

- regex ==2022.3.2

- scipy *

- numpy *

- pandas *

- regex ==2022.3.2

- scipy *

- numpy *

- pandas *

- regex ==2022.3.2

- scipy *

- deap *

- nltk *

- numpy *

- pandas *

- regex ==2022.3.2

- scikit-learn *

- scipy *

- tqdm *

- regex ==2022.3.2