Science Score: 64.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

✓CITATION.cff file

Found CITATION.cff file -

✓codemeta.json file

Found codemeta.json file -

✓.zenodo.json file

Found .zenodo.json file -

○DOI references

-

✓Academic publication links

Links to: zenodo.org -

✓Committers with academic emails

1 of 51 committers (2.0%) from academic institutions -

○Institutional organization owner

-

○JOSS paper metadata

-

○Scientific vocabulary similarity

Low similarity (9.0%) to scientific vocabulary

Keywords

Keywords from Contributors

Repository

YOLOv3 in PyTorch > ONNX > CoreML > TFLite

Basic Info

- Host: GitHub

- Owner: ultralytics

- License: agpl-3.0

- Language: Python

- Default Branch: master

- Homepage: https://docs.ultralytics.com

- Size: 10.5 MB

Statistics

- Stars: 10,430

- Watchers: 153

- Forks: 3,457

- Open Issues: 11

- Releases: 12

Topics

Metadata Files

README.md

Ultralytics YOLOv3 is a robust and efficient [computer vision](https://www.ultralytics.com/glossary/computer-vision-cv) model developed by [Ultralytics](https://www.ultralytics.com/). Built on the [PyTorch](https://pytorch.org/) framework, this implementation extends the original YOLOv3 architecture, renowned for its improvements in [object detection](https://www.ultralytics.com/glossary/object-detection) speed and accuracy over earlier versions. It incorporates best practices and insights from extensive research, making it a reliable choice for a wide range of vision AI applications. Explore the [Ultralytics Docs](https://docs.ultralytics.com/) for in-depth guidance (YOLOv3-specific docs may be limited, but general YOLO principles apply), open an issue on [GitHub](https://github.com/ultralytics/yolov5/issues/new/choose) for support, and join our [Discord community](https://discord.com/invite/ultralytics) for questions and discussions! For Enterprise License requests, please complete the form at [Ultralytics Licensing](https://www.ultralytics.com/license).

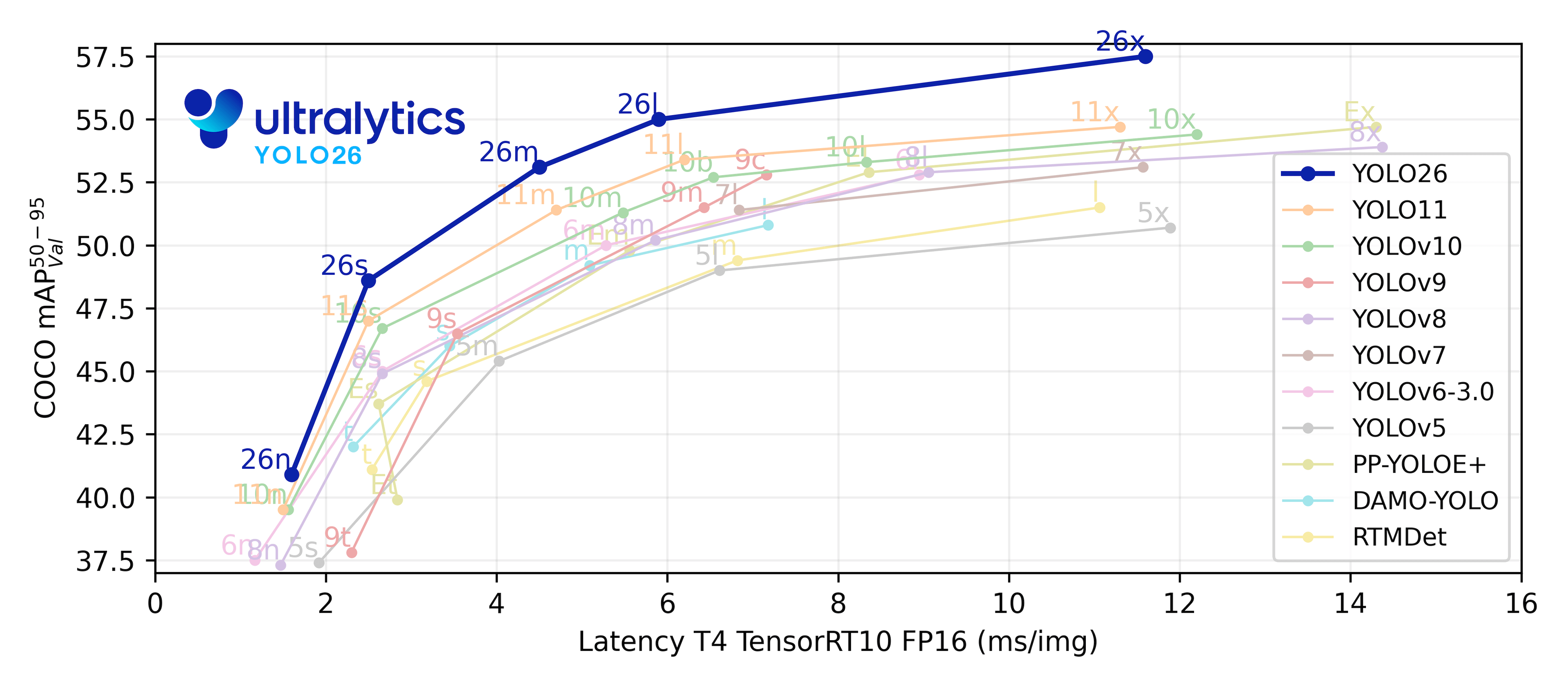

🚀 YOLO11: The Next Evolution

We are thrilled to introduce Ultralytics YOLO11 🚀, the latest advancement in our state-of-the-art vision models! Available now at the Ultralytics YOLO GitHub repository, YOLO11 continues our legacy of speed, precision, and user-friendly design. Whether you're working on object detection, instance segmentation, pose estimation, image classification, or oriented object detection (OBB), YOLO11 delivers the performance and flexibility needed for modern computer vision tasks.

Get started today and unlock the full potential of YOLO11! Visit the Ultralytics Docs for comprehensive guides and resources:

```bash

Install the ultralytics package

pip install ultralytics ```

📚 Documentation

See the Ultralytics Docs for YOLOv3 for full documentation on training, testing, and deployment using the Ultralytics framework. While YOLOv3-specific documentation may be limited, the general YOLO principles apply. Below are quickstart examples adapted for YOLOv3 concepts.

Install

Clone the repository and install dependencies from `requirements.txt` in a [**Python>=3.8.0**](https://www.python.org/) environment. Ensure you have [**PyTorch>=1.8**](https://pytorch.org/get-started/locally/) installed. (Note: This repo is originally YOLOv5, dependencies should be compatible but tailored testing for YOLOv3 is recommended). ```bash # Clone the YOLOv3 repository git clone https://github.com/ultralytics/yolov3 # Navigate to the cloned directory cd yolov3 # Install required packages pip install -r requirements.txt ```Inference with PyTorch Hub

Use YOLOv3 via [PyTorch Hub](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading/) for inference. [Models](https://github.com/ultralytics/yolov5/tree/master/models) like `yolov3.pt`, `yolov3-spp.pt`, `yolov3-tiny.pt` can be loaded. ```python import torch # Load a YOLOv3 model (e.g., yolov3, yolov3-spp) model = torch.hub.load("ultralytics/yolov3", "yolov3", pretrained=True) # specify 'yolov3' or other variants # Define the input image source (URL, local file, PIL image, OpenCV frame, numpy array, or list) img = "https://ultralytics.com/images/zidane.jpg" # Example image # Perform inference results = model(img) # Process the results (options: .print(), .show(), .save(), .crop(), .pandas()) results.print() # Print results to console results.show() # Display results in a window results.save() # Save results to runs/detect/exp ```Inference with detect.py

The `detect.py` script runs inference on various sources. Use `--weights yolov3.pt` or other YOLOv3 variants. It automatically downloads models and saves results to `runs/detect`. ```bash # Run inference using a webcam with yolov3-tiny python detect.py --weights yolov3-tiny.pt --source 0 # Run inference on a local image file with yolov3 python detect.py --weights yolov3.pt --source img.jpg # Run inference on a local video file with yolov3-spp python detect.py --weights yolov3-spp.pt --source vid.mp4 # Run inference on a screen capture python detect.py --weights yolov3.pt --source screen # Run inference on a directory of images python detect.py --weights yolov3.pt --source path/to/images/ # Run inference on a text file listing image paths python detect.py --weights yolov3.pt --source list.txt # Run inference on a text file listing stream URLs python detect.py --weights yolov3.pt --source list.streams # Run inference using a glob pattern for images python detect.py --weights yolov3.pt --source 'path/to/*.jpg' # Run inference on a YouTube video URL python detect.py --weights yolov3.pt --source 'https://youtu.be/LNwODJXcvt4' # Run inference on an RTSP, RTMP, or HTTP stream python detect.py --weights yolov3.pt --source 'rtsp://example.com/media.mp4' ```Training

The commands below show how to train YOLOv3 models on the [COCO dataset](https://docs.ultralytics.com/datasets/detect/coco/). Models and datasets are downloaded automatically. Use the largest `--batch-size` your hardware allows. ```bash # Train YOLOv3-tiny on COCO for 300 epochs (example settings) python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov3-tiny.yaml --batch-size 64 # Train YOLOv3 on COCO for 300 epochs (example settings) python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov3.yaml --batch-size 32 # Train YOLOv3-SPP on COCO for 300 epochs (example settings) python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov3-spp.yaml --batch-size 16 ```Tutorials

Note: These tutorials primarily use YOLOv5 examples but the principles often apply to YOLOv3 within the Ultralytics framework. - **[Train Custom Data](https://docs.ultralytics.com/yolov5/tutorials/train_custom_data/)** 🚀 **RECOMMENDED**: Learn how to train models on your own datasets. - **[Tips for Best Training Results](https://docs.ultralytics.com/guides/model-training-tips/)** ☘️: Improve your model's performance with expert tips. - **[Multi-GPU Training](https://docs.ultralytics.com/yolov5/tutorials/multi_gpu_training/)**: Speed up training using multiple GPUs. - **[PyTorch Hub Integration](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading/)** 🌟 **NEW**: Easily load models using PyTorch Hub. - **[Model Export (TFLite, ONNX, CoreML, TensorRT)](https://docs.ultralytics.com/yolov5/tutorials/model_export/)** 🚀: Convert your models to various deployment formats. - **[NVIDIA Jetson Deployment](https://docs.ultralytics.com/guides/nvidia-jetson/)** 🌟 **NEW**: Deploy models on NVIDIA Jetson devices. - **[Test-Time Augmentation (TTA)](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation/)**: Enhance prediction accuracy with TTA. - **[Model Ensembling](https://docs.ultralytics.com/yolov5/tutorials/model_ensembling/)**: Combine multiple models for better performance. - **[Model Pruning/Sparsity](https://docs.ultralytics.com/yolov5/tutorials/model_pruning_and_sparsity/)**: Optimize models for size and speed. - **[Hyperparameter Evolution](https://docs.ultralytics.com/yolov5/tutorials/hyperparameter_evolution/)**: Automatically find the best training hyperparameters. - **[Transfer Learning with Frozen Layers](https://docs.ultralytics.com/yolov5/tutorials/transfer_learning_with_frozen_layers/)**: Adapt pretrained models to new tasks efficiently. - **[Architecture Summary](https://docs.ultralytics.com/yolov5/tutorials/architecture_description/)** 🌟 **NEW**: Understand the model architecture (focus on YOLOv3 principles). - **[Ultralytics HUB Training](https://www.ultralytics.com/hub)** 🚀 **RECOMMENDED**: Train and deploy YOLO models using Ultralytics HUB. - **[ClearML Logging](https://docs.ultralytics.com/yolov5/tutorials/clearml_logging_integration/)**: Integrate with ClearML for experiment tracking. - **[Neural Magic DeepSparse Integration](https://docs.ultralytics.com/yolov5/tutorials/neural_magic_pruning_quantization/)**: Accelerate inference with DeepSparse. - **[Comet Logging](https://docs.ultralytics.com/yolov5/tutorials/comet_logging_integration/)** 🌟 **NEW**: Log experiments using Comet ML.🧩 Integrations

Ultralytics offers robust integrations with leading AI platforms to enhance your workflow, including dataset labeling, training, visualization, and model management. Discover how Ultralytics, in collaboration with partners like Weights & Biases, Comet ML, Roboflow, and Intel OpenVINO, can optimize your AI projects. Explore more at Ultralytics Integrations.

| Ultralytics HUB 🌟 | Weights & Biases | Comet | Neural Magic | | :-----------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------: | | Streamline YOLO workflows: Label, train, and deploy effortlessly with Ultralytics HUB. Try now! | Track experiments, hyperparameters, and results with Weights & Biases. | Free forever, Comet ML lets you save YOLO models, resume training, and interactively visualize predictions. | Run YOLO inference up to 6x faster with Neural Magic DeepSparse. |

⭐ Ultralytics HUB

Experience seamless AI development with Ultralytics HUB ⭐, the ultimate platform for building, training, and deploying computer vision models. Visualize datasets, train YOLOv3, YOLOv5, and YOLOv8 🚀 models, and deploy them to real-world applications without writing any code. Transform images into actionable insights using our advanced tools and user-friendly Ultralytics App. Start your journey for Free today!

🤔 Why YOLOv3?

YOLOv3 marked a major leap forward in real-time object detection at its release. Key advantages include:

- Improved Accuracy: Enhanced detection of small objects compared to YOLOv2.

- Multi-Scale Predictions: Detects objects at three different scales, boosting performance across varied object sizes.

- Class Prediction: Uses logistic classifiers for object classes, enabling multi-label classification.

- Feature Extractor: Employs a deeper network (Darknet-53) versus the Darknet-19 used in YOLOv2.

While newer models like YOLOv5 and YOLO11 offer further advancements, YOLOv3 remains a reliable and widely adopted baseline, efficiently implemented in PyTorch by Ultralytics.

☁️ Environments

Get started quickly with our pre-configured environments. Click the icons below for setup details.

🤝 Contribute

We welcome your contributions! Making YOLO models accessible and effective is a community effort. Please see our Contributing Guide to get started. Share your feedback through the Ultralytics Survey. Thank you to all our contributors for making Ultralytics YOLO better!

📜 License

Ultralytics provides two licensing options to meet different needs:

- AGPL-3.0 License: An OSI-approved open-source license ideal for academic research, personal projects, and testing. It promotes open collaboration and knowledge sharing. See the LICENSE file for details.

- Enterprise License: Tailored for commercial applications, this license allows seamless integration of Ultralytics software and AI models into commercial products and services, bypassing the open-source requirements of AGPL-3.0. For commercial use cases, please contact us via Ultralytics Licensing.

📧 Contact

For bug reports and feature requests related to Ultralytics YOLO implementations, please visit GitHub Issues. For general questions, discussions, and community support, join our Discord server!

Owner

- Name: Ultralytics

- Login: ultralytics

- Kind: organization

- Email: hello@ultralytics.com

- Location: United States of America

- Website: https://ultralytics.com

- Twitter: ultralytics

- Repositories: 14

- Profile: https://github.com/ultralytics

Simpler. Smarter. Further.

Citation (CITATION.cff)

cff-version: 1.2.0

preferred-citation:

type: software

message: If you use YOLOv5, please cite it as below.

authors:

- family-names: Jocher

given-names: Glenn

orcid: "https://orcid.org/0000-0001-5950-6979"

title: "YOLOv5 by Ultralytics"

version: 7.0

doi: 10.5281/zenodo.3908559

date-released: 2020-5-29

license: AGPL-3.0

url: "https://github.com/ultralytics/yolov5"

GitHub Events

Total

- Create event: 39

- Issues event: 6

- Watch event: 312

- Delete event: 37

- Member event: 1

- Issue comment event: 95

- Push event: 82

- Pull request review event: 2

- Gollum event: 3

- Pull request event: 73

- Fork event: 67

Last Year

- Create event: 39

- Issues event: 6

- Watch event: 312

- Delete event: 37

- Member event: 1

- Issue comment event: 95

- Push event: 82

- Pull request review event: 2

- Gollum event: 3

- Pull request event: 73

- Fork event: 67

Committers

Last synced: 9 months ago

Top Committers

| Name | Commits | |

|---|---|---|

| Glenn Jocher | g****r@u****m | 2,667 |

| dependabot[bot] | 4****] | 43 |

| pre-commit-ci[bot] | 6****] | 11 |

| Paula Derrenger | 1****r | 9 |

| Yonghye Kwon | d****e@g****m | 9 |

| Ultralytics Assistant | 1****t | 8 |

| Guillermo García | g****a@r****m | 7 |

| Josh Veitch-Michaelis | j****b@g****m | 5 |

| perry0418 | 3****8 | 4 |

| Ttayu | y****g@g****m | 3 |

| IlyaOvodov | 3****v | 2 |

| Gabriel Bianconi | b****l@g****m | 2 |

| Fatih Baltacı | b****4@g****m | 2 |

| Daniel Suess | d****l@d****e | 2 |

| Marc | m****2@h****m | 2 |

| e96031413 | 3****3 | 2 |

| s-mohaghegh97 | 7****7 | 2 |

| Adrian Boguszewski | a****i@g****m | 1 |

| Chang Lee | c****6@g****m | 1 |

| Dustin Kendall | 4****0 | 1 |

| Falak | f****t | 1 |

| Francisco Reveriano | 4****o | 1 |

| FuLin | l****2@u****u | 1 |

| huntr.dev | the place to protect open source | a****n@4****m | 1 |

| Thomas Havlik | k****s@l****m | 1 |

| priteshgohil | 4****l | 1 |

| orcund | 5****d | 1 |

| idow09 | i****9@g****m | 1 |

| dependabot-preview[bot] | 2****] | 1 |

| alex-fdias | 7****s | 1 |

| and 21 more... | ||

Committer Domains (Top 20 + Academic)

Issues and Pull Requests

Last synced: 6 months ago

All Time

- Total issues: 196

- Total pull requests: 70

- Average time to close issues: about 2 months

- Average time to close pull requests: 4 days

- Total issue authors: 148

- Total pull request authors: 10

- Average comments per issue: 8.07

- Average comments per pull request: 1.14

- Merged pull requests: 52

- Bot issues: 1

- Bot pull requests: 5

Past Year

- Issues: 5

- Pull requests: 62

- Average time to close issues: 29 minutes

- Average time to close pull requests: 2 days

- Issue authors: 5

- Pull request authors: 6

- Average comments per issue: 1.2

- Average comments per pull request: 1.16

- Merged pull requests: 47

- Bot issues: 1

- Bot pull requests: 5

Top Authors

Issue Authors

- glenn-jocher (15)

- mama110 (8)

- ardeal (4)

- okanlv (4)

- sdfeessdewfs (3)

- caihaunqai (3)

- ghost (3)

- xiao1228 (3)

- violet17 (2)

- ming71 (2)

- jeongjin0 (2)

- jackfaubshner (2)

- Shassk (2)

- YanivO1123 (2)

- persts (2)

Pull Request Authors

- glenn-jocher (127)

- dependabot[bot] (22)

- UltralyticsAssistant (17)

- pderrenger (16)

- sdfeessdewfs1 (2)

- jveitchmichaelis (2)

- guigarfr (2)

- Ttayu (1)

- pre-commit-ci[bot] (1)

- Laughing-q (1)

- QianWei-Code (1)

- nirbenz (1)

- RANJITHROSAN17 (1)

Top Labels

Issue Labels

Pull Request Labels

Dependencies

- actions/cache v3 composite

- actions/checkout v3 composite

- actions/setup-python v4 composite

- actions/checkout v3 composite

- github/codeql-action/analyze v2 composite

- github/codeql-action/autobuild v2 composite

- github/codeql-action/init v2 composite

- actions/first-interaction v1 composite

- actions/stale v7 composite

- Pillow >=7.1.2

- PyYAML >=5.3.1

- ipython *

- matplotlib >=3.2.2

- numpy >=1.18.5

- opencv-python >=4.1.1

- pandas >=1.1.4

- psutil *

- requests >=2.23.0

- scipy >=1.4.1

- seaborn >=0.11.0

- tensorboard >=2.4.1

- thop >=0.1.1

- torchvision >=0.8.1

- tqdm >=4.64.0

- actions/checkout v4 composite

- docker/build-push-action v5 composite

- docker/login-action v3 composite

- docker/setup-buildx-action v3 composite

- docker/setup-qemu-action v3 composite

- actions/checkout v4 composite

- nick-invision/retry v2 composite

- pytorch/pytorch 2.0.0-cuda11.7-cudnn8-runtime build

- gcr.io/google-appengine/python latest build

- Flask ==2.3.2

- gunicorn ==19.10.0

- pip ==21.1

- werkzeug >=2.2.3