forte

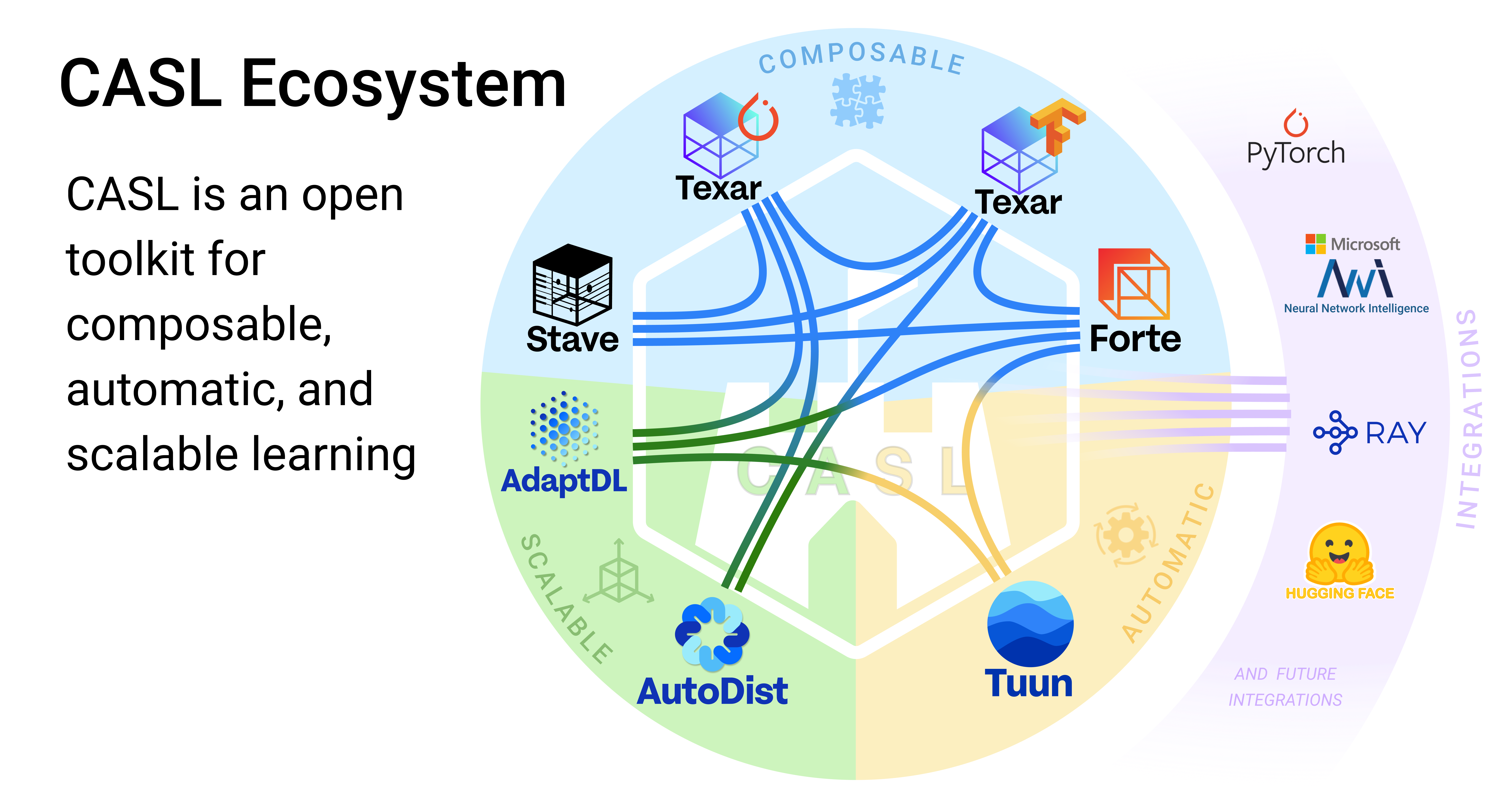

Forte is a flexible and powerful ML workflow builder. This is part of the CASL project: http://casl-project.ai/

Science Score: 41.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

✓CITATION.cff file

Found CITATION.cff file -

✓codemeta.json file

Found codemeta.json file -

○.zenodo.json file

-

○DOI references

-

○Academic publication links

-

✓Committers with academic emails

3 of 53 committers (5.7%) from academic institutions -

○Institutional organization owner

-

○JOSS paper metadata

-

○Scientific vocabulary similarity

Low similarity (16.9%) to scientific vocabulary

Keywords

Keywords from Contributors

Repository

Forte is a flexible and powerful ML workflow builder. This is part of the CASL project: http://casl-project.ai/

Basic Info

Statistics

- Stars: 248

- Watchers: 18

- Forks: 59

- Open Issues: 105

- Releases: 11

Topics

Metadata Files

README.md

Download • Quick Start • Contribution Guide • License • Documentation • Publication

Bring good software engineering to your ML solutions, starting from Data!

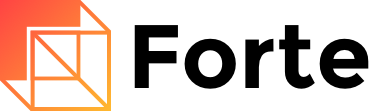

Forte is a data-centric framework designed to engineer complex ML workflows. Forte allows practitioners to build ML components in a composable and modular way. Behind the scene, it introduces DataPack, a standardized data structure for unstructured data, distilling good software engineering practices such as reusability, extensibility, and flexibility into ML solutions.

DataPacks are standard data packages in an ML workflow, that can represent the source data (e.g. text, audio, images) and additional markups (e.g. entity mentions, bounding boxes). It is powered by a customizable data schema named "Ontology", allowing domain experts to inject their knowledge into ML engineering processes easily.

Installation

To install the released version from PyPI:

bash

pip install forte

To install from source:

bash

git clone https://github.com/asyml/forte.git

cd forte

pip install .

To install some forte adapter for some existing libraries:

Install from PyPI: ```bash

To install other tools. Check here https://github.com/asyml/forte-wrappers#libraries-and-tools-supported for available tools.

pip install forte.spacy ```

Install from source:

```bash git clone https://github.com/asyml/forte-wrappers.git cd forte-wrappers

Change spacy to other tools. Check here https://github.com/asyml/forte-wrappers#libraries-and-tools-supported for available tools.

pip install src/spacy ```

Some components or modules in forte may require some extra requirements:

pip install forte[data_aug]: Install packages required for data augmentation modules.pip install forte[ir]: Install packages required for Information Retrieval Supportspip install forte[remote]: Install packages required for pipeline serving functionalities, such as Remote Processor.pip install forte[audio_ext]: Install packages required for Forte Audio support, such as Audio Reader.pip install forte[stave]: Install packages required for Stave integration.pip install forte[models]: Install packages required for ner training, srl, srl with new training system, and srl_predictor and ner_predictorpip install forte[test]: Install packages required for running unit tests.pip install forte[wikipedia]: Install packages required for reading wikipedia datasets.pip install forte[nlp]: Install packages required for additional NLP supports, such as subword_tokenizer and texar encoderpip install forte[extractor]: Install packages required for extractor-based training system, extractor, train_preprocessor, tagging trainer, DataPack dataset, types, and converter.pip install forte[payload]install packages required for payload. ## Quick Start Guide Writing NLP pipelines with Forte is easy. The following example creates a simple pipeline that analyzes the sentences, tokens, and named entities from a piece of text.

Before we start, make sure the SpaCy wrapper is installed.

bash

pip install forte.spacy

Let's start by writing a simple processor that analyze POS tags to tokens using the good old NLTK library. ```python import nltk from forte.processors.base import PackProcessor from forte.data.datapack import DataPack from ft.onto.baseontology import Token

class NLTKPOSTagger(PackProcessor): r"""A wrapper of NLTK pos tagger."""

def initialize(self, resources, configs):

super().initialize(resources, configs)

# download the NLTK average perceptron tagger

nltk.download("averaged_perceptron_tagger")

def _process(self, input_pack: DataPack):

# get a list of token data entries from `input_pack`

# using `DataPack.get()`` method

token_texts = [token.text for token in input_pack.get(Token)]

# use nltk pos tagging module to tag token texts

taggings = nltk.pos_tag(token_texts)

# assign nltk taggings to token attributes

for token, tag in zip(input_pack.get(Token), taggings):

token.pos = tag[1]

``

If we break it down, we will notice there are two main functions.

In theinitializefunction, we download and prepare the model. And then in the_process

function, we actually process theDataPackobject, take the some tokens from it, and

use the NLTK tagger to create POS tags. The results are stored as thepos` attribute of

the tokens.

Before we go into the details of the implementation, let's try it in a full pipeline.

```python from forte import Pipeline

from forte.data.readers import StringReader from fortex.spacy import SpacyProcessor

pipeline: Pipeline = PipelineDataPack pipeline.set_reader(StringReader()) pipeline.add(SpacyProcessor(), {"processors": ["sentence", "tokenize"]}) pipeline.add(NLTKPOSTagger()) ```

Here we have successfully created a pipeline with a few components:

* a StringReader that reads data from a string.

* a SpacyProcessor that calls SpaCy to split the sentences and create tokenization

* and finally the brand new NLTKPOSTagger we just implemented,

Let's see it run in action!

python

input_string = "Forte is a data-centric ML framework"

for pack in pipeline.initialize().process_dataset(input_string):

for sentence in pack.get("ft.onto.base_ontology.Sentence"):

print("The sentence is: ", sentence.text)

print("The POS tags of the tokens are:")

for token in pack.get(Token, sentence):

print(f" {token.text}[{token.pos}]", end = " ")

print()

It gives us output as follows:

Forte[NNP] is[VBZ] a[DT] data[NN] -[:] centric[JJ] ML[NNP] framework[NN] .[.]

We have successfully created a simple pipeline. In the nutshell, the DataPacks are

the standard packages "flowing" on the pipeline. They are created by the reader, and

then pass along the pipeline.

Each processor, such as our NLTKPOSTagger,

interfaces directly with DataPacks and do not need to worry about the

other part of the pipeline, making the engineering process more modular. In this example

pipeline, SpacyProcessor creates the Sentence and Token, and then we implemented

the NLTKPOSTagger to add Part-of-Speech tags to the tokens.

To learn more about the details, check out of documentation! The classes used in this guide can also be found in this repository or the Forte Wrappers repository

And There's More

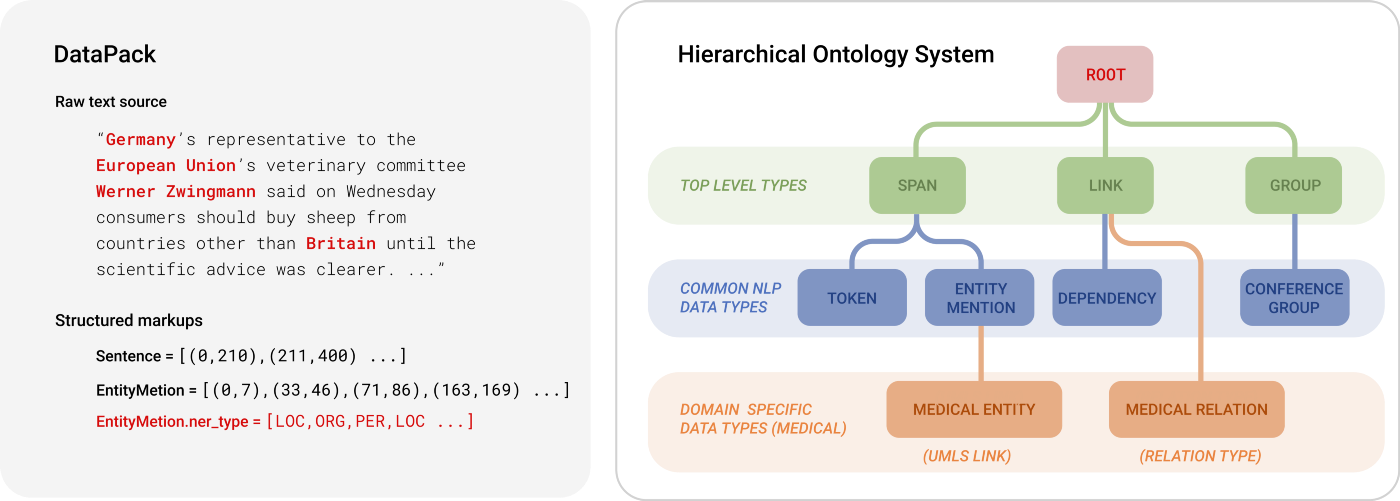

The data-centric abstraction of Forte opens the gate to many other opportunities. Not only does Forte allow engineers to develop reusable components easily, it further provides a simple way to develop composable ML modules. For example, Forte allows us to: * create composable ML solutions with reusable models and processing logic * easily interface with a great collection of 3rd party toolkits built by the community * build plug-and-play data augmentation tools

To learn more about these, you can visit: * Examples * Documentation * Currently we are working on some interesting tutorials, stay tuned for a full set of documentation on how to do NLP with Forte!

Contributing

Forte was originally developed in CMU and is actively contributed by Petuum in collaboration with other institutes. This project is part of the CASL Open Source family.

If you are interested in making enhancement to Forte, please first go over our Code of Conduct and Contribution Guideline

About

Supported By

License

Owner

- Name: ASYML

- Login: asyml

- Kind: organization

- Website: asyml.io

- Repositories: 9

- Profile: https://github.com/asyml

Machine Learning as Machine Assembly, part of the CASL project https://www.casl-project.ai/

Citation (citation)

To cite Forte, use the following demo paper at EMNLP 2020.

Zhengzhong Liu, Guanxiong Ding, Avinash Bukkittu, Mansi Gupta, Pengzhi Gao, Atif Ahmed, Zhang Shikun, Gao Xin, Swapnil Singhavi, Li Linwei, Wei Wei, Hu Zecong, Shi Haoran, Liang XIaodan, Teruko Mitamura, Eric P. Xing, and Hu Zhiting. 2020. A Data-Centric Framework for Composable NLP Workflows. In EMNLP 2020.

@inproceedings{Liu2020,

author = {Liu, Zhengzhong and Ding, Guanxiong and Bukkittu, Avinash and Gupta, Mansi and Gao, Pengzhi and Ahmed, Atif and Shikun, Zhang and Xin, Gao and Singhavi, Swapnil and Linwei, Li and Wei, Wei and Zecong, Hu and Haoran, Shi and XIaodan, Liang and Mitamura, Teruko and Xing, Eric P. and Zhiting, Hu},

booktitle = {EMNLP 2020},

title = {{A Data-Centric Framework for Composable NLP Workflows}},

year = {2020}

}

GitHub Events

Total

- Watch event: 8

Last Year

- Watch event: 8

Committers

Last synced: 9 months ago

Top Committers

| Name | Commits | |

|---|---|---|

| Hector | h****r@g****m | 283 |

| Wei Wei | w****i@p****m | 98 |

| hector.liu | h****u@p****m | 97 |

| yuhaoLucas | 4****3@q****m | 66 |

| AvinashBukkittu | a****1@g****m | 46 |

| feipenghe | h****e@g****m | 41 |

| mylibrar | 5****r | 39 |

| Pengzhi Gao | p****o@p****m | 35 |

| qinzzz | w****7@1****m | 35 |

| Allen Shi | h****i@p****m | 33 |

| Jiaqiang Ruan | j****6@g****m | 26 |

| Mansi Gupta | m****0@g****m | 25 |

| jrxk | x****f@g****m | 16 |

| Pushkar-Bhuse | 4****e | 15 |

| Suqi Sun | s****n@m****l | 12 |

| Zecong Hu | z****u@p****m | 11 |

| wanglec | 4****c | 11 |

| Piyush Yadav | p****y@g****m | 10 |

| Mansi Gupta | m****a@p****m | 10 |

| qinzzz | 3****z | 8 |

| YanwenLin | y****l@a****u | 8 |

| jennyzhang-petuum | 7****m | 8 |

| Zhanyuan Zhang | 3****b | 7 |

| jieralice13 | j****n@g****m | 7 |

| feipenghe | h****e@u****u | 7 |

| Atif Ahmed | a****3@g****m | 7 |

| Yanwen(Jason) Lin | 3****l | 6 |

| cz9779 | c****9@o****m | 6 |

| J007X | 9****X | 5 |

| jzpang | j****3@g****m | 4 |

| and 23 more... | ||

Committer Domains (Top 20 + Academic)

Issues and Pull Requests

Last synced: 6 months ago

All Time

- Total issues: 55

- Total pull requests: 49

- Average time to close issues: about 1 month

- Average time to close pull requests: about 2 months

- Total issue authors: 10

- Total pull request authors: 13

- Average comments per issue: 0.8

- Average comments per pull request: 1.98

- Merged pull requests: 33

- Bot issues: 0

- Bot pull requests: 3

Past Year

- Issues: 0

- Pull requests: 0

- Average time to close issues: N/A

- Average time to close pull requests: N/A

- Issue authors: 0

- Pull request authors: 0

- Average comments per issue: 0

- Average comments per pull request: 0

- Merged pull requests: 0

- Bot issues: 0

- Bot pull requests: 0

Top Authors

Issue Authors

- hepengfe (18)

- mylibrar (12)

- hunterhector (11)

- J007X (6)

- Pushkar-Bhuse (3)

- nikhilranjan7 (1)

- qinzzz (1)

- giveme2min (1)

- bhaskar2443053 (1)

- wanglec (1)

Pull Request Authors

- hepengfe (15)

- hunterhector (8)

- J007X (6)

- Pushkar-Bhuse (6)

- dependabot[bot] (5)

- mylibrar (4)

- batermj (2)

- Piyush13y (1)

- qinzzz (1)

- Xuezhi-Liang (1)

- nikhilranjan7 (1)

- TrellixVulnTeam (1)

- bhaskar2443053 (1)

Top Labels

Issue Labels

Pull Request Labels

Packages

- Total packages: 1

-

Total downloads:

- pypi 550 last-month

- Total dependent packages: 0

- Total dependent repositories: 6

- Total versions: 13

- Total maintainers: 1

pypi.org: forte

Forte is extensible framework for building composable and modularized NLP workflows.

- Homepage: https://github.com/asyml/forte

- Documentation: https://forte.readthedocs.io/

- License: Apache License Version 2.0

-

Latest release: 0.2.0

published almost 4 years ago

Rankings

Maintainers (1)

Dependencies

- actions/cache v2 composite

- actions/cache v3 composite

- actions/checkout v3 composite

- actions/checkout v2 composite

- actions/setup-python v4 composite

- actions/setup-python v2 composite

- mxschmitt/action-tmate v3 composite

- peter-evans/repository-dispatch v1 composite

- actions/checkout v2 composite

- actions/setup-python v2 composite

- pypa/gh-action-pypi-publish release/v1 composite

- actions/checkout v2 composite

- actions/setup-python v2 composite

- pypa/gh-action-pypi-publish release/v1 composite

- Pygments >=2.1.1

- asyml_utilities *

- elasticsearch ==7.5.1

- faiss-cpu >=1.6.1

- fastapi ==0.65.2

- funcsigs *

- jinja2 <=3.0.3

- jsonpickle >=1.4

- jsonschema >=3.0.2

- mypy_extensions *

- myst-parser >=0.14.0

- nbautoexport ==0.4.0

- nbsphinx ==0.8.8

- nltk ==3.6.6

- pyyaml >=5.4

- sortedcontainers >=2.1.0

- sphinx <4

- sphinx-comments *

- sphinx-rtd-theme >=0.5.0

- sphinx_autodoc_typehints *

- sphinxcontrib-spelling *

- testbook *

- texar-pytorch >=0.1.2

- transformers >=3.1

- typed_ast >=1.5.0

- typed_astunparse *

- typing >=3.7.4

- typing-inspect >=0.6.0

- uvicorn ==0.14.0

- sounddevice >=0.4.4

- soundfile >=0.10.3

- transformers >=4.15.0

- h5py *

- munkres *

- nltk *

- numpy *

- pyxdameraulevenshtein *

- scikit-learn *

- forte.tweepy *

- forte.vader *

- torch >=1.5.0

- asyml-utilities *

- dataclasses *

- enum34 *

- funcsigs ==1.0.2

- importlib-resources ==5.1.4

- jsonpickle >=1.4

- jsonschema >=3.0.2

- mypy_extensions *

- numpy >=1.16

- packaging *

- pyyaml >=5.4

- smart-open ==1.8.4

- sortedcontainers >=2.1.0

- typed_ast >=1.5.0

- typed_astunparse *

- typing-inspect >=0.6.0

- asyml-utilities *

- dataclasses *

- enum34 ==1.1.10

- funcsigs >=1.0.2

- importlib-resources >=5.1.4

- jsonpickle >=1.4

- jsonschema >=3.0.2

- numpy >=1.16.6

- pyyaml >=5.4

- smart-open >=1.8.4

- sortedcontainers >=2.1.0

- typed_ast >=1.5.0

- typed_astunparse >=2.1.4

- typing >=3.7.4

- typing-inspect >=0.6.0