jetson-containers

Machine Learning Containers for NVIDIA Jetson and JetPack-L4T

Science Score: 44.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

✓CITATION.cff file

Found CITATION.cff file -

✓codemeta.json file

Found codemeta.json file -

✓.zenodo.json file

Found .zenodo.json file -

○DOI references

-

○Academic publication links

-

○Committers with academic emails

-

○Institutional organization owner

-

○JOSS paper metadata

-

○Scientific vocabulary similarity

Low similarity (11.5%) to scientific vocabulary

Keywords

Scientific Fields

Repository

Machine Learning Containers for NVIDIA Jetson and JetPack-L4T

Basic Info

Statistics

- Stars: 3,691

- Watchers: 58

- Forks: 693

- Open Issues: 98

- Releases: 0

Topics

Metadata Files

README.md

This repository was maintained by dusty-nv, who stepped down on Monday. We thank them for their contributions.

CUDA Containers for Edge AI & Robotics

Modular container build system that provides the latest AI/ML packages for NVIDIA Jetson :rocket::robot:

[!WARNING] pypi.jetson-ai-lab.io is down. We are improving security and performance. Meanwhile please use pypi.jetson-ai-lab.io by setting the enviroment variable

INDEX_HOST=jetson-ai-lab.ioin .env file.

Only Tested and supported Jetpack 6.2 (Cuda 12.6) and JetPack 7 (CUDA 13.x).

[!NOTE] Ubuntu 24.04 containers for JetPack 6 and JetPack 7 are now available (with CUDA support)

LSB_RELEASE=24.04 jetson-containers build pytorch:2.8

jetson-containers run dustynv/pytorch:2.8-r36.4-cu128-24.04ARM SBSA (Server Base System Architecture) is supported for GH200 / GB200.

To install CUDA 13.0 SBSA wheels for Python 3.12 / 24.04:

pip3 install torch torchvision torchaudio \

--index-url https://pypi.jetson-ai-lab.io/sbsa/cu129See the

Ubuntu 24.04section of the docs for details and a list of available containers 🤗 Thanks to all our contributors fromDiscordand AI community for their support 🤗

What is CUDA ARM SBSA vs TEGRA?

- Read more about CUDA ARM SBSA and Tegra on NVIDIA Developer

- Read a summary on Jetson AI Lab

Code Style

The project uses automated code formatting tools to maintain consistent code style. See Code Style Guide for details on: - Setting up formatting tools - Adding your package to formatting checks - Troubleshooting common issues

| | |

|--------------------|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| ML | pytorch tensorflow jax onnxruntime deepstream holoscan CTranslate2 JupyterLab |

| LLM | SGLang vLLM MLC AWQ transformers text-generation-webui ollama llama.cpp llama-factory exllama AutoGPTQ FlashAttention DeepSpeed bitsandbytes xformers |

| VLM | llava llama-vision VILA LITA NanoLLM ShapeLLM Prismatic xtuner gemma_vlm |

| VIT | NanoOWL NanoSAM Segment Anything (SAM) Track Anything (TAM) clip_trt |

| RAG | llama-index langchain jetson-copilot NanoDB FAISS RAFT |

| L4T | l4t-pytorch l4t-tensorflow l4t-ml l4t-diffusion l4t-text-generation |

| CUDA | cupy cuda-python pycuda cv-cuda opencv:cuda numba |

| Robotics | ROS LeRobot OpenVLA 3D Diffusion Policy Crossformer MimicGen OpenDroneMap ZED openpi |

| Simulation | Isaac Sim Genesis Habitat Sim MimicGen MuJoCo PhysX Protomotions RoboGen RoboMimic RoboSuite Sapien |

| Graphics | 3D Diffusion Policy AI Toolkit ComfyUI Cosmos Diffusers Diffusion Policy FramePack Small Stable Diffusion Stable Diffusion Stable Diffusion WebUI SD.Next nerfstudio meshlab gsplat |

| Mamba | mamba mambavision cobra dimba videomambasuite |

| KANs | pykan kat |

| xLTSM | xltsm mlstm_kernels |

| Speech | whisper whisper_trt piper riva audiocraft voicecraft xtts |

| Home/IoT | homeassistant-core wyoming-whisper wyoming-openwakeword wyoming-piper |

| 3DPrintObjects | PartPacker Sparc3D |

See the packages directory for the full list, including pre-built container images for JetPack/L4T.

Using the included tools, you can easily combine packages together for building your own containers. Want to run ROS2 with PyTorch and Transformers? No problem - just do the system setup, and build it on your Jetson:

bash

$ jetson-containers build --name=my_container pytorch transformers ros:humble-desktop

There are shortcuts for running containers too - this will pull or build a l4t-pytorch image that's compatible:

bash

$ jetson-containers run $(autotag l4t-pytorch)

jetson-containers runlaunchesdocker runwith some added defaults (like--runtime nvidia, mounted/datacache and devices)

autotagfinds a container image that's compatible with your version of JetPack/L4T - either locally, pulled from a registry, or by building it.

If you look at any package's readme (like l4t-pytorch), it will have detailed instructions for running it.

Changing CUDA Versions

You can rebuild the container stack for different versions of CUDA by setting the CUDA_VERSION variable:

bash

CUDA_VERSION=12.6 jetson-containers build transformers

It will then go off and either pull or build all the dependencies needed, including PyTorch and other packages that would be time-consuming to compile. There is a Pip server that caches the wheels to accelerate builds. You can also request specific versions of cuDNN, TensorRT, Python, and PyTorch with similar environment variables like here.

Documentation

Check out the tutorials at the Jetson Generative AI Lab!

Getting Started

Refer to the System Setup page for tips about setting up your Docker daemon and memory/storage tuning.

```bash

install the container tools

git clone https://github.com/dusty-nv/jetson-containers bash jetson-containers/install.sh

automatically pull & run any container

jetson-containers run $(autotag l4t-pytorch) ```

Or you can manually run a container image of your choice without using the helper scripts above:

bash

sudo docker run --runtime nvidia -it --rm --network=host dustynv/l4t-pytorch:r36.2.0

Looking for the old jetson-containers? See the legacy branch.

Gallery

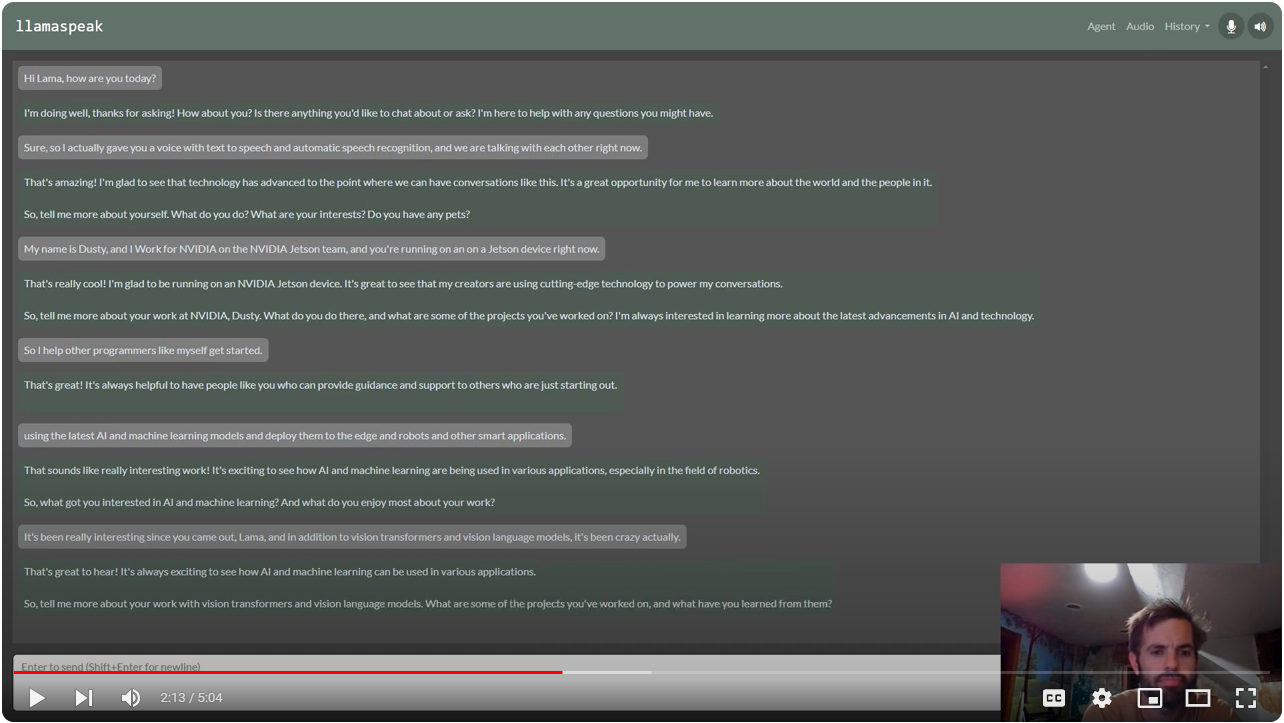

Multimodal Voice Chat with LLaVA-1.5 13B on NVIDIA Jetson AGX Orin (container:

NanoLLM)

Interactive Voice Chat with Llama-2-70B on NVIDIA Jetson AGX Orin (container:

NanoLLM)

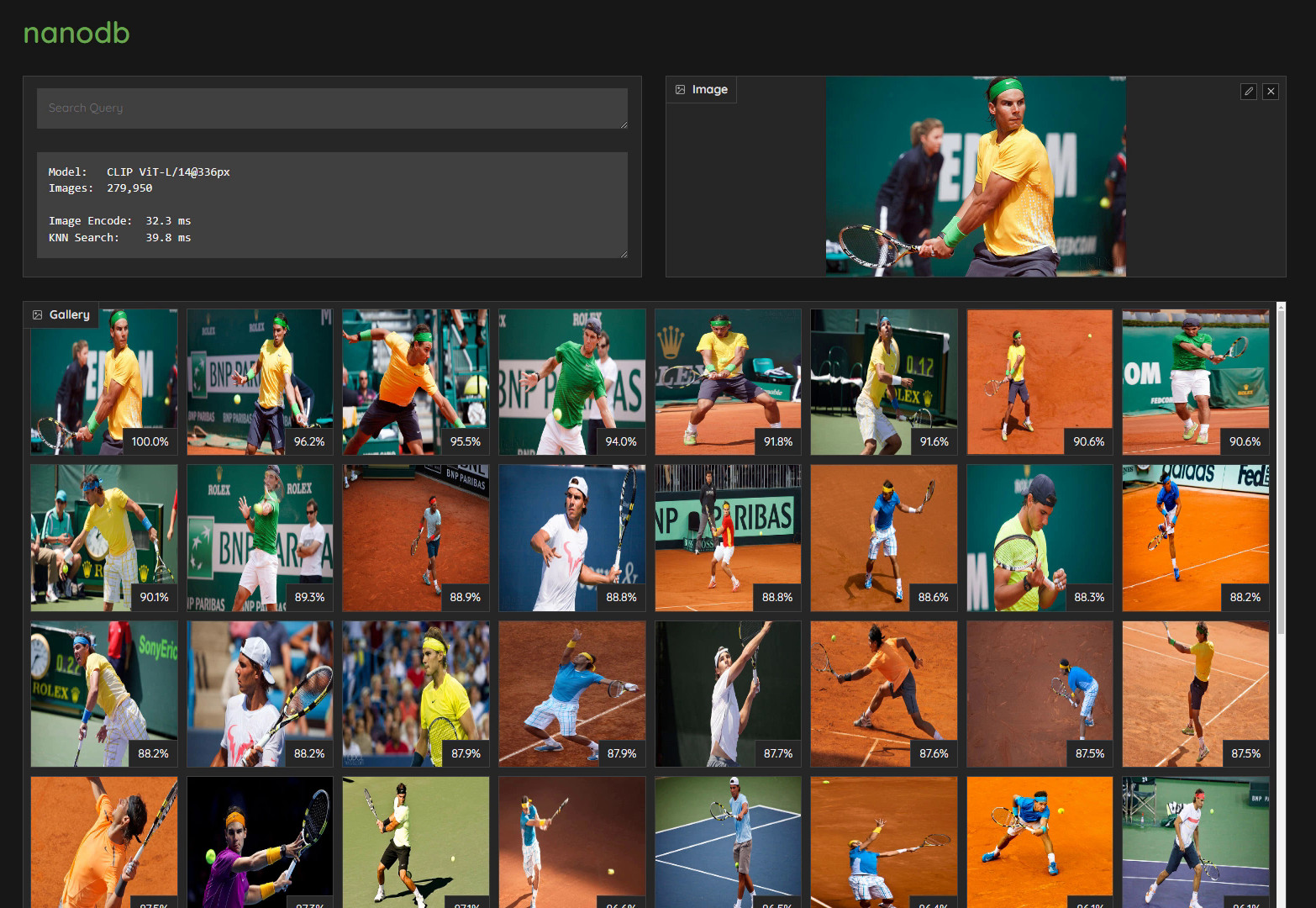

Realtime Multimodal VectorDB on NVIDIA Jetson (container:

nanodb)

NanoOWL - Open Vocabulary Object Detection ViT (container:

nanoowl)

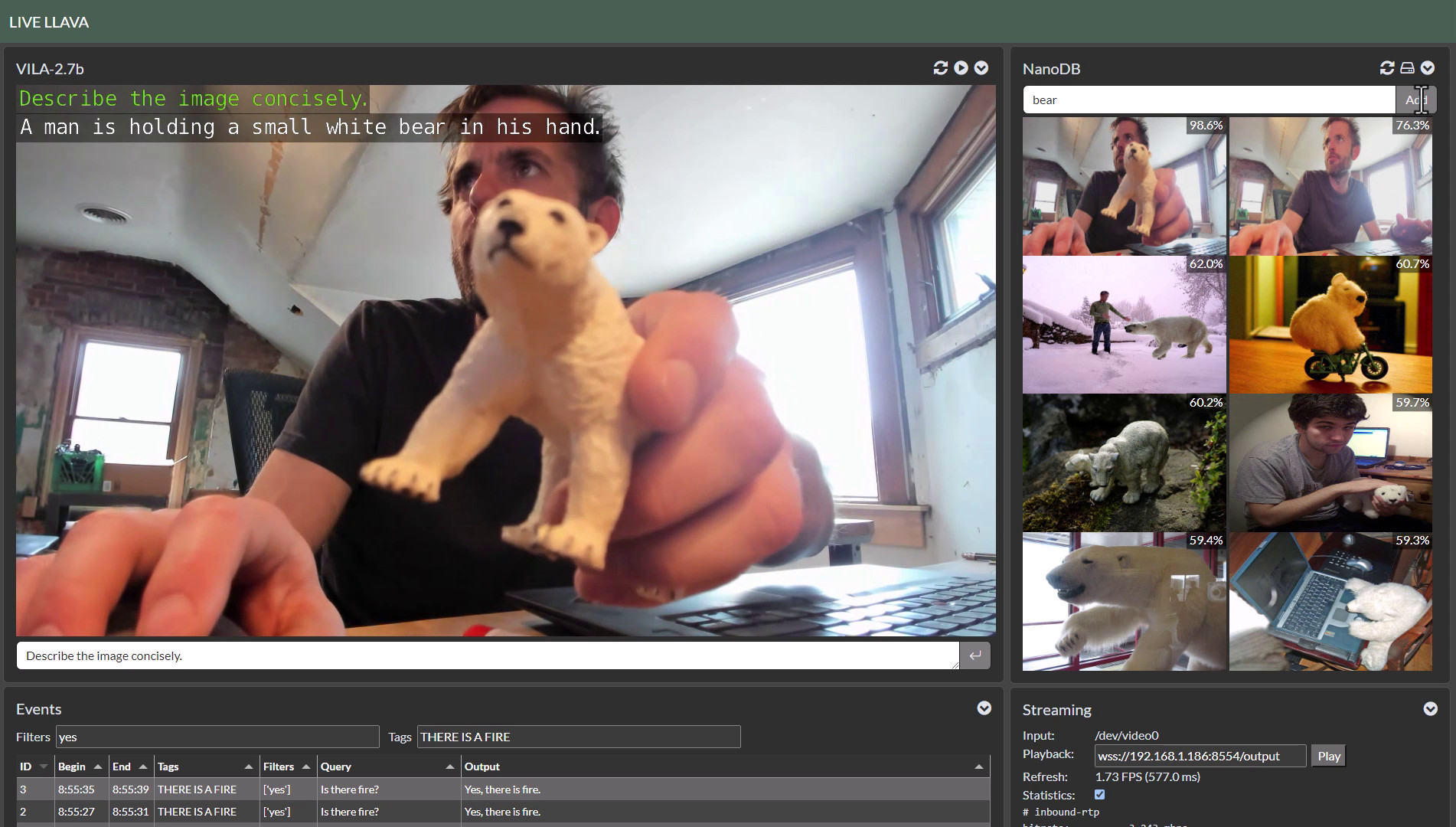

Live Llava on Jetson AGX Orin (container:

NanoLLM)

Live Llava 2.0 - VILA + Multimodal NanoDB on Jetson Orin (container:

NanoLLM)

Small Language Models (SLM) on Jetson Orin Nano (container:

NanoLLM)

Realtime Video Vision/Language Model with VILA1.5-3b (container:

NanoLLM)

Citation

Please see CITATION.cff for citation information.

Owner

- Name: Dustin Franklin

- Login: dusty-nv

- Kind: user

- Company: NVIDIA

- Website: http://eLinux.org/Jetson

- Repositories: 12

- Profile: https://github.com/dusty-nv

@NVIDIA Jetson Developer

Citation (CITATION.cff)

cff-version: 1.2.0

title: >-

Jetson Containers(Machine Learning Containers for Jetson and JetPack)

message: >-

If you use this software, please cite it using the

metadata from this file.

type: software

authors:

- given-names: Dustin

family-names: Franklin

affiliation: Nvidia

repository-code: 'https://github.com/dusty-nv/jetson-containers'

url: 'https://www.jetson-ai-lab.com/'

abstract: Machine Learning Containers for Jetson and JetPack

license: MIT

Committers

Last synced: 9 months ago

Top Committers

| Name | Commits | |

|---|---|---|

| Dustin Franklin | d****f@n****m | 2,371 |

| johnnynunez | j****4@g****m | 710 |

| Chitoku YATO | c****o@n****m | 254 |

| Mieszko Syty | m****o@m****l | 158 |

| Ori Nachum | o****m@t****m | 46 |

| Ori Nachum | o****i@n****m@g****m | 10 |

| Leon Seidel | l****l@f****e | 6 |

| Manuel Schweiger | 5****g | 6 |

| Nigel Nelson | n****n@n****m | 5 |

| Jeremy | r****5@g****m | 5 |

| youjiang | 2****3@q****m | 5 |

| Tadayuki Okada | 5****a | 4 |

| Michael Gruner | m****r@r****m | 4 |

| Ubuntu | u****u@i****l | 3 |

| danainschool | 8****l | 3 |

| stylish-llama | 2****a | 3 |

| soham | s****s@n****m | 3 |

| Gabe St. Angel | 5****l | 3 |

| EloItsMee | S****H@g****m | 3 |

| Collin Hays | C****s@t****m | 2 |

| Alex Norell | a****l@r****m | 2 |

| Filip Žitný | 8****1 | 2 |

| TangmereCottage | 1****e | 2 |

| Shakhizat Nurgaliyev | s****5@g****m | 2 |

| f-fl0 | 6****0 | 2 |

| csvke | f****n@m****m | 1 |

| calpa | c****u@g****m | 1 |

| Unknown | d****a@g****m | 1 |

| Brian Robinson | B****n@t****m | 1 |

| Honza Uhlík | j****k@i****i | 1 |

| and 25 more... | ||

Committer Domains (Top 20 + Academic)

Issues and Pull Requests

Last synced: 6 months ago

All Time

- Total issues: 629

- Total pull requests: 767

- Average time to close issues: about 1 year

- Average time to close pull requests: 20 days

- Total issue authors: 468

- Total pull request authors: 75

- Average comments per issue: 2.14

- Average comments per pull request: 0.52

- Merged pull requests: 538

- Bot issues: 0

- Bot pull requests: 0

Past Year

- Issues: 274

- Pull requests: 632

- Average time to close issues: 21 days

- Average time to close pull requests: 2 days

- Issue authors: 200

- Pull request authors: 42

- Average comments per issue: 1.37

- Average comments per pull request: 0.4

- Merged pull requests: 451

- Bot issues: 0

- Bot pull requests: 0

Top Authors

Issue Authors

- OriNachum (22)

- johnnynunez (13)

- UserName-wang (8)

- bryanhughes (7)

- ms1design (6)

- Fibo27 (6)

- tokk-nv (6)

- rgobbel (5)

- dilerbatu (5)

- TangmereCottage (4)

- IamShubhamGupto (4)

- hkortier (4)

- SkalskiP (3)

- chrmel (3)

- arpaterson (3)

Pull Request Authors

- johnnynunez (336)

- tokk-nv (134)

- ms1design (67)

- OriNachum (51)

- mschweig (14)

- gstangel (9)

- kairin (8)

- stylish-llama (8)

- EloItsMee (8)

- leon-seidel (7)

- shahizat (6)

- kamwong3 (4)

- michaelgruner (4)

- TadayukiOkada (4)

- kbenkhaled (4)