shapiq

Shapley Interactions and Shapley Values for Machine Learning

Science Score: 36.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

○CITATION.cff file

-

✓codemeta.json file

Found codemeta.json file -

✓.zenodo.json file

Found .zenodo.json file -

○DOI references

-

✓Academic publication links

Links to: arxiv.org -

○Academic email domains

-

○Institutional organization owner

-

○JOSS paper metadata

-

○Scientific vocabulary similarity

Low similarity (16.7%) to scientific vocabulary

Keywords

Repository

Shapley Interactions and Shapley Values for Machine Learning

Basic Info

- Host: GitHub

- Owner: mmschlk

- License: mit

- Language: Python

- Default Branch: main

- Homepage: https://shapiq.readthedocs.io

- Size: 315 MB

Statistics

- Stars: 608

- Watchers: 11

- Forks: 38

- Open Issues: 35

- Releases: 15

Topics

Metadata Files

README.md

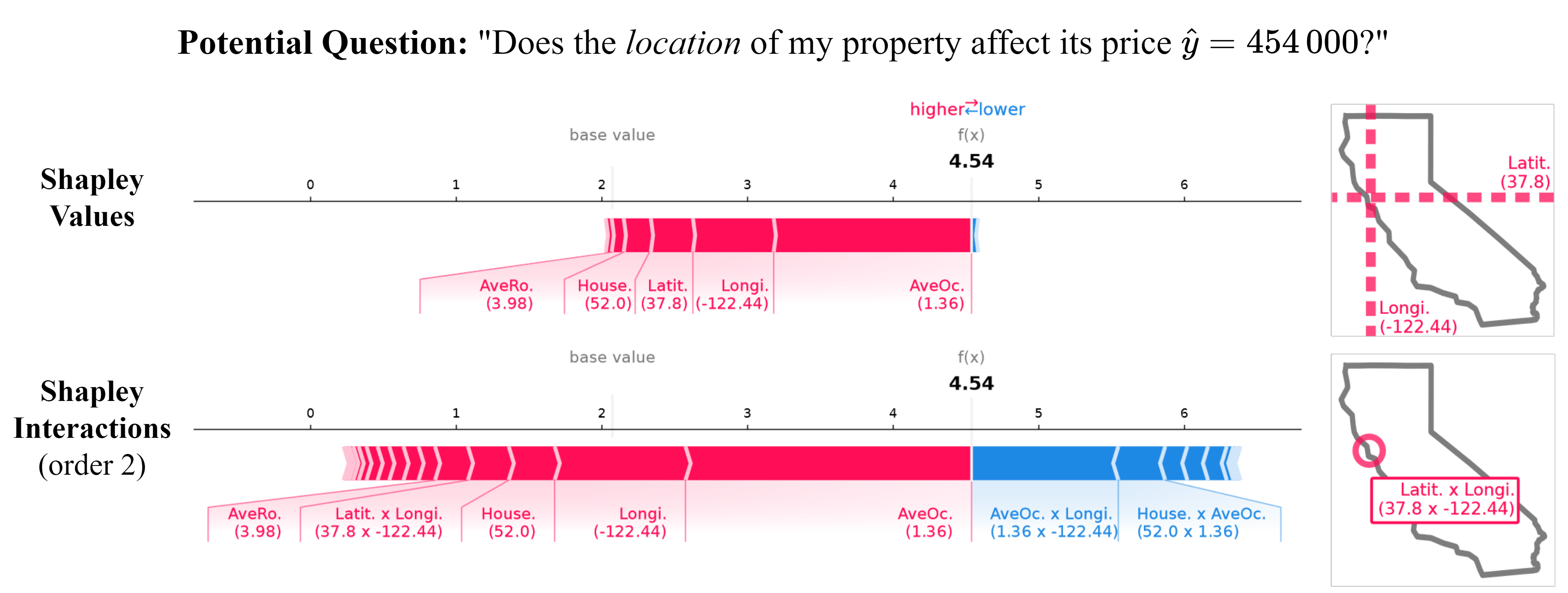

shapiq: Shapley Interactions for Machine Learning

An interaction may speak more than a thousand main effects.

Shapley Interaction Quantification (shapiq) is a Python package for (1) approximating any-order Shapley interactions, (2) benchmarking game-theoretical algorithms for machine learning, (3) explaining feature interactions of model predictions. shapiq extends the well-known shap package for both researchers working on game theory in machine learning, as well as the end-users explaining models. SHAP-IQ extends individual Shapley values by quantifying the synergy effect between entities (aka players in the jargon of game theory) like explanatory features, data points, or weak learners in ensemble models. Synergies between players give a more comprehensive view of machine learning models.

Install

shapiq is intended to work with Python 3.10 and above.

Installation can be done via uv :

sh

uv add shapiq

or via pip:

sh

pip install shapiq

Quickstart

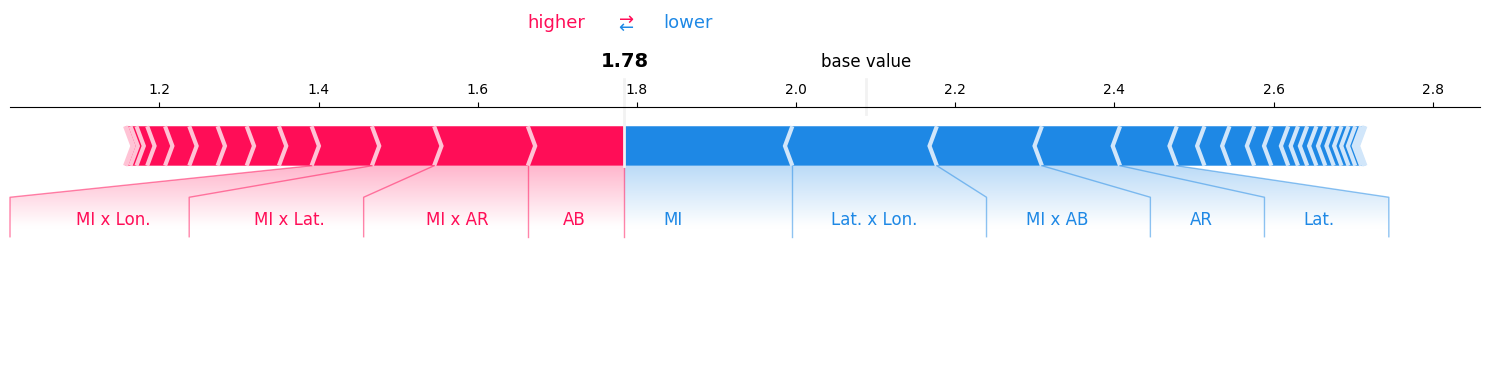

You can explain your model with shapiq.explainer and visualize Shapley interactions with shapiq.plot.

If you are interested in the underlying game theoretic algorithms, then check out the shapiq.approximator and shapiq.games modules.

Compute any-order feature interactions

Explain your models with Shapley interactions:

Just load your data and model, and then use a shapiq.Explainer to compute Shapley interactions.

```python import shapiq

load data

X, y = shapiq.loadcaliforniahousing(to_numpy=True)

train a model

from sklearn.ensemble import RandomForestRegressor model = RandomForestRegressor() model.fit(X, y)

set up an explainer with k-SII interaction values up to order 4

explainer = shapiq.TabularExplainer( model=model, data=X, index="k-SII", max_order=4 )

explain the model's prediction for the first sample

interaction_values = explainer.explain(X[0], budget=256)

analyse interaction values

print(interaction_values)

InteractionValues( index=k-SII, maxorder=4, minorder=0, estimated=False, estimationbudget=256, nplayers=8, baseline_value=2.07282292, Top 10 interactions: (0,): 1.696969079 # attribution of feature 0 (0, 5): 0.4847876 (0, 1): 0.4494288 # interaction between features 0 & 1 (0, 6): 0.4477677 (1, 5): 0.3750034 (4, 5): 0.3468325 (0, 3, 6): -0.320 # interaction between features 0 & 3 & 6 (2, 3, 6): -0.329 (0, 1, 5): -0.363 (6,): -0.56358890 ) ```

Compute Shapley values like you are used to with SHAP

If you are used to working with SHAP, you can also compute Shapley values with shapiq the same way:

You can load your data and model, and then use the shapiq.Explainer to compute Shapley values.

If you set the index to 'SV', you will get the Shapley values as you know them from SHAP.

```python import shapiq

data, model = ... # get your data and model explainer = shapiq.Explainer( model=model, data=data, index="SV", # Shapley values ) shapleyvalues = explainer.explain(data[0]) shapleyvalues.plotforce(featurenames=...) ```

Once you have the Shapley values, you can easily compute Interaction values as well:

python

explainer = shapiq.Explainer(

model=model,

data=data,

index="k-SII", # k-SII interaction values

max_order=2 # specify any order you want

)

interaction_values = explainer.explain(data[0])

interaction_values.plot_force(feature_names=...)

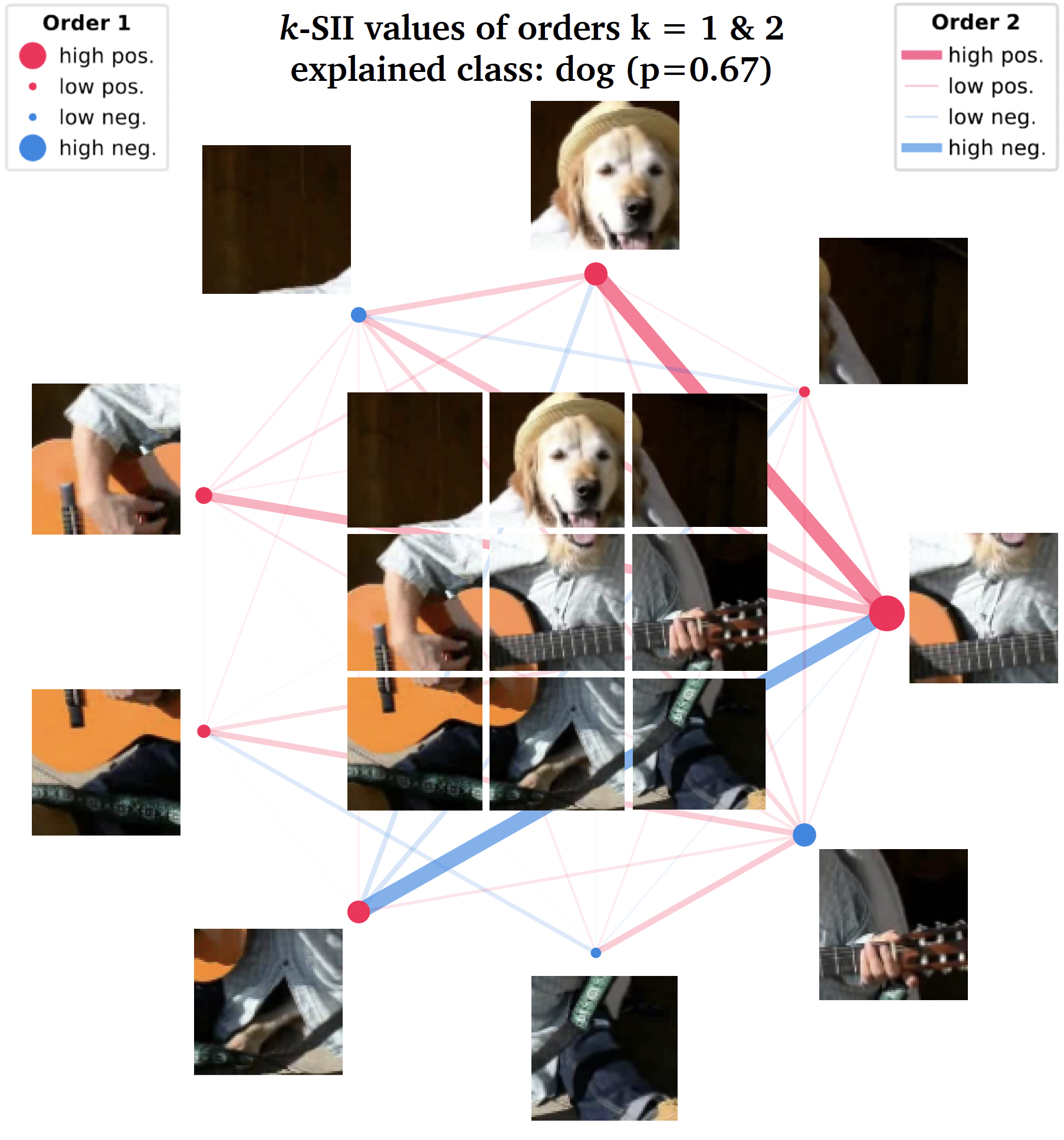

Visualize feature interactions

A handy way of visualizing interaction scores up to order 2 are network plots. You can see an example of such a plot below. The nodes represent feature attributions and the edges represent the interactions between features. The strength and size of the nodes and edges are proportional to the absolute value of attributions and interactions, respectively.

```python shapiq.networkplot( firstordervalues=interactionvalues.getnordervalues(1), secondordervalues=interactionvalues.getnorder_values(2) )

or use

interactionvalues.plotnetwork() ```

The pseudo-code above can produce the following plot (here also an image is added):

Explain TabPFN

With shapiq you can also explain TabPFN by making use of the remove-and-recontextualize explanation paradigm implemented in shapiq.TabPFNExplainer.

python

import tabpfn, shapiq

data, labels = ... # load your data

model = tabpfn.TabPFNClassifier() # get TabPFN

model.fit(data, labels) # "fit" TabPFN (optional)

explainer = shapiq.TabPFNExplainer( # setup the explainer

model=model,

data=data,

labels=labels,

index="FSII"

)

fsii_values = explainer.explain(data[0]) # explain with Faithful Shapley values

fsii_values.plot_force() # plot the force plot

Use SPEX (SParse EXplainer)

For large-scale use-cases you can also check out the SPEX approximator.

```python

load your data and model with large number of features

data, model, n_features = ...

use the SPEX approximator directly

approximator = shapiq.SPEX(n=nfeatures, index="FBII", maxorder=2) fbii_scores = approximator.approximate(budget=2000, game=model.predict)

or use SPEX with an explainer

explainer = shapiq.Explainer( model=model, data=data, index="FBII", max_order=2, approximator="spex" # specify SPEX as approximator ) explanation = explainer.explain(data[0]) ```

Documentation with tutorials

The documentation of shapiq can be found at https://shapiq.readthedocs.io.

If you are new to Shapley values or Shapley interactions, we recommend starting with the introduction and the basic tutorials.

There is a lot of great resources available to get you started with Shapley values and interactions.

Citation

If you use shapiq and enjoy it, please consider citing our NeurIPS paper or consider starring this repository.

bibtex

@inproceedings{Muschalik.2024b,

title = {shapiq: Shapley Interactions for Machine Learning},

author = {Maximilian Muschalik and Hubert Baniecki and Fabian Fumagalli and

Patrick Kolpaczki and Barbara Hammer and Eyke H\"{u}llermeier},

booktitle = {The Thirty-eight Conference on Neural Information Processing Systems Datasets and Benchmarks Track},

year = {2024},

url = {https://openreview.net/forum?id=knxGmi6SJi}

}

Contributing

We welcome any kind of contributions to shapiq!

If you are interested in contributing, please check out our contributing guidelines.

If you have any questions, feel free to reach out to us.

We are tracking our progress via a project board and the issues section.

If you find a bug or have a feature request, please open an issue or help us fixing it by opening a pull request.

License

This project is licensed under the MIT License.

Funding

This work is openly available under the MIT license. Some authors acknowledge the financial support by the German Research Foundation (DFG) under grant number TRR 318/1 2021 438445824.

Built with by the shapiq team.

Owner

- Name: Maximilian

- Login: mmschlk

- Kind: user

- Location: Germany

- Company: AIML@LMU

- Twitter: Muschalik_Max

- Repositories: 8

- Profile: https://github.com/mmschlk

PhD student at LMU Munich

GitHub Events

Total

- Fork event: 22

- Create event: 89

- Release event: 8

- Issues event: 147

- Watch event: 377

- Delete event: 74

- Member event: 4

- Issue comment event: 116

- Push event: 438

- Gollum event: 3

- Pull request review comment event: 31

- Pull request review event: 98

- Pull request event: 145

Last Year

- Fork event: 22

- Create event: 89

- Release event: 8

- Issues event: 147

- Watch event: 377

- Delete event: 74

- Member event: 4

- Issue comment event: 116

- Push event: 438

- Gollum event: 3

- Pull request review comment event: 31

- Pull request review event: 98

- Pull request event: 145

Issues and Pull Requests

Last synced: 6 months ago

All Time

- Total issues: 62

- Total pull requests: 55

- Average time to close issues: about 2 months

- Average time to close pull requests: 6 days

- Total issue authors: 17

- Total pull request authors: 14

- Average comments per issue: 0.76

- Average comments per pull request: 0.29

- Merged pull requests: 39

- Bot issues: 0

- Bot pull requests: 7

Past Year

- Issues: 58

- Pull requests: 55

- Average time to close issues: about 1 month

- Average time to close pull requests: 6 days

- Issue authors: 17

- Pull request authors: 14

- Average comments per issue: 0.78

- Average comments per pull request: 0.29

- Merged pull requests: 39

- Bot issues: 0

- Bot pull requests: 7

Top Authors

Issue Authors

- mmschlk (113)

- hbaniecki (23)

- FFmgll (5)

- CharlesCousyn (2)

- asif7adil (2)

- Linh-nk (2)

- AsyaOrlova (1)

- landonbutler (1)

- Deathn0t (1)

- 8W9aG (1)

- annprzy (1)

- xingzhongyu (1)

- glennkroegel (1)

- jreivilo (1)

- danielarifmurphy (1)

Pull Request Authors

- mmschlk (80)

- dependabot[bot] (48)

- Advueu963 (14)

- hbaniecki (12)

- FFmgll (5)

- kolpaczki (4)

- AlessandroPierro (2)

- pwhofman (2)

- r-visser (2)

- IsaH57 (2)

- heinzll (2)

- JuliaHerbinger (1)

- annprzy (1)

- chenhao20241224 (1)

- justinkang221 (1)

Top Labels

Issue Labels

Pull Request Labels

Packages

- Total packages: 1

-

Total downloads:

- pypi 9,507 last-month

- Total dependent packages: 0

- Total dependent repositories: 0

- Total versions: 16

- Total maintainers: 2

pypi.org: shapiq

Shapley Interactions for Machine Learning

- Documentation: https://shapiq.readthedocs.io/

- License: mit

-

Latest release: 1.3.1

published 7 months ago