Science Score: 33.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

○CITATION.cff file

-

✓codemeta.json file

Found codemeta.json file -

○.zenodo.json file

-

○DOI references

-

✓Academic publication links

Links to: arxiv.org -

✓Committers with academic emails

1 of 20 committers (5.0%) from academic institutions -

○Institutional organization owner

-

○JOSS paper metadata

-

○Scientific vocabulary similarity

Low similarity (16.8%) to scientific vocabulary

Keywords

Repository

Bias Auditing & Fair ML Toolkit

Basic Info

- Host: GitHub

- Owner: dssg

- License: mit

- Language: Python

- Default Branch: master

- Homepage: http://www.datasciencepublicpolicy.org/aequitas/

- Size: 978 MB

Statistics

- Stars: 727

- Watchers: 42

- Forks: 119

- Open Issues: 53

- Releases: 0

Topics

Metadata Files

README.md

Aequitas: Bias Auditing & "Correction" Toolkit

[comment]: <> (Add badges for coverage when we have tests, update repo for other types of badges!)

aequitas is an open-source bias auditing and Fair ML toolkit for data scientists, machine learning researchers, and policymakers. We provide an easy-to-use and transparent tool for auditing predictors of ML models, as well as experimenting with "correcting biased model" using Fair ML methods in binary classification settings.

For more context around dealing with bias and fairness issues in AI//ML systems, take a look at our detailed tutorial and related publications.

Version 1.0.0: Aequitas Flow - Optimizing Fairness in ML Pipelines

Explore Aequitas Flow, our latest update in version 1.0.0, designed to augment bias audits with bias mitigation and allow enrich experimentation with Fair ML methods using our new, streamlined capabilities.

Installation

cmd

pip install aequitas

or

cmd

pip install git+https://github.com/dssg/aequitas.git

Example Notebooks supporting various tasks and workflows

| Notebook | Description | |-|-| | Audit a Model's Predictions | Check how to do an in-depth bias audit with the COMPAS example notebook or use your own data. | | Correct a Model's Predictions | Create a dataframe to audit a specific model, and correct the predictions with group-specific thresholds in the Model correction notebook. | | Train a Model with Fairness Considerations | Experiment with your own dataset or methods and check the results of a Fair ML experiment. | | Add your method to Aequitas Flow | Learn how to add your own method to the Aequitas Flow toolkit. |

Quickstart on Bias Auditing

To perform a bias audit, you need a pandas DataFrame with the following format:

| | label | score | sensattr1 | sensattr2 | ... | sensattrN | |-----|-------|-------|-------------|-------------|-----|-------------| | 0 | 0 | 0 | A | F | | Y | | 1 | 0 | 1 | C | F | | N | | 2 | 1 | 1 | B | T | | N | | ... | | | | | | | | N | 1 | 0 | E | T | | Y |

where label is the target variable for your prediction task and score is the model output.

Only one sensitive attribute is required; all must be in Categorical format.

```python from aequitas import Audit

audit = Audit(df) ```

To obtain a summary of the bias audit, run: ```python

Select the fairness metric of interest for your dataset

audit.summaryplot(["tpr", "fpr", "pprev"]) ``` <img src="https://raw.githubusercontent.com/dssg/aequitas/master/docs/images/summary_chart.svg" width="900">

We can also observe a single metric and sensitive attribute:

python

audit.disparity_plot(attribute="sens_attr_2", metrics=["fpr"])

Quickstart on experimenting with Bias Reduction (Fair ML) methods

To perform an experiment, a dataset is required. It must have a label column, a sensitive attribute column, and features.

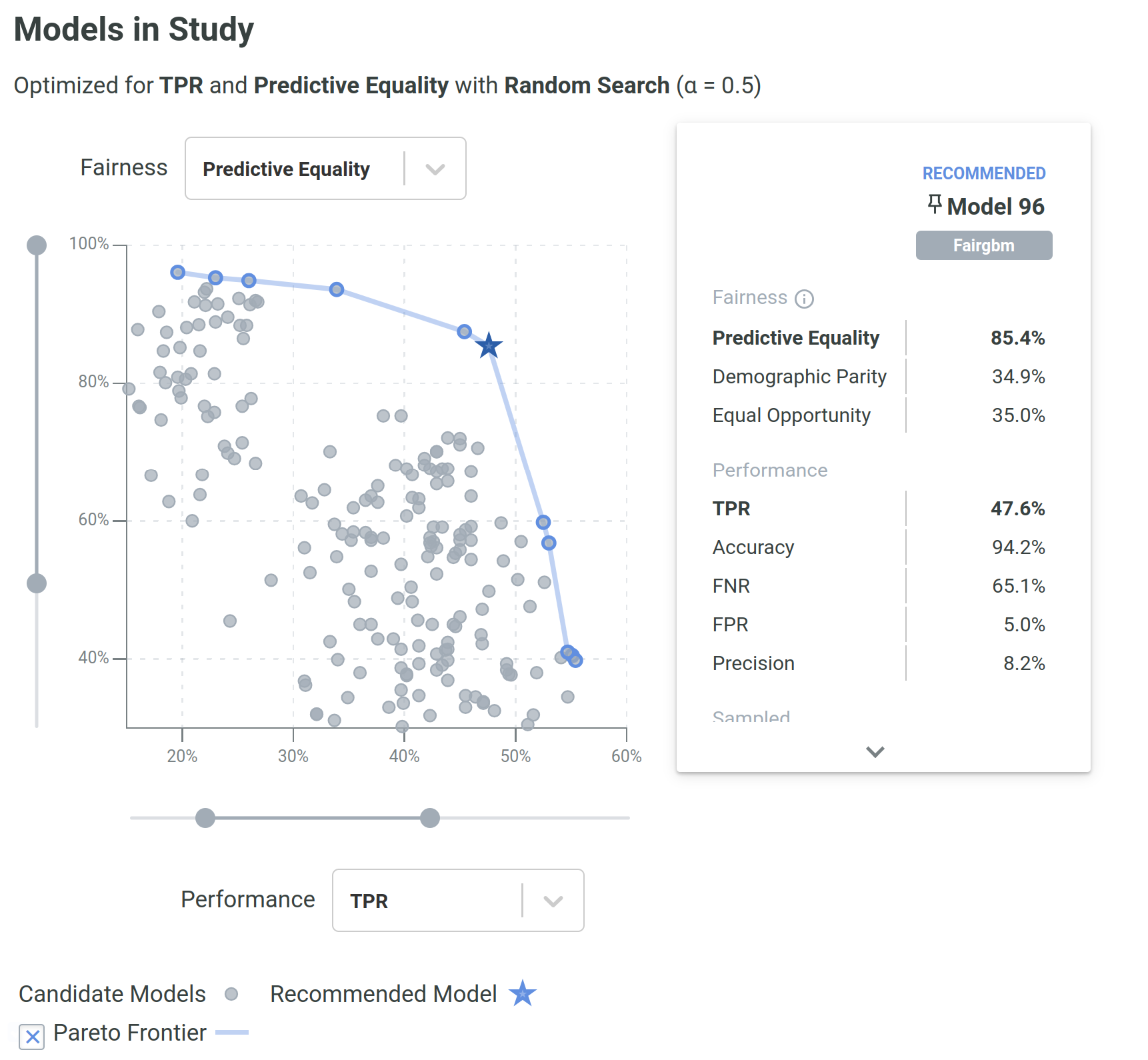

```python from aequitas.flow import DefaultExperiment

experiment = DefaultExperiment.frompandas(dataset, targetfeature="label", sensitivefeature="attr", experimentsize="small") experiment.run()

experiment.plot_pareto() ```

The DefaultExperiment class allows for an easier entry-point to experiments in the package. This class has two main parameters to configure the experiment: experiment_size and methods. The former defines the size of the experiment, which can be either test (1 model per method), small (10 models per method), medium (50 models per method), or large (100 models per method). The latter defines the methods to be used in the experiment, which can be either all or a subset, namely preprocessing or inprocessing.

Several aspects of an experiment (e.g., algorithms, number of runs, dataset splitting) can be configured individually in more granular detail in the Experiment class.

[comment]: <> (Make default experiment this easy to run)

Quickstart on Method Training

Assuming an aequitas.flow.Dataset, it is possible to train methods and use their functionality depending on the type of algorithm (pre-, in-, or post-processing).

For pre-processing methods: ```python from aequitas.flow.methods.preprocessing import PrevalenceSampling

sampler = PrevalenceSampling() sampler.fit(dataset.train.X, dataset.train.y, dataset.train.s) Xsample, ysample, s_sample = sampler.transform(dataset.train.X, dataset.train.y, dataset.train.s) ```

for in-processing methods: ```python from aequitas.flow.methods.inprocessing import FairGBM

model = FairGBM() model.fit(Xsample, ysample, ssample) scoresval = model.predictproba(dataset.validation.X, dataset.validation.y, dataset.validation.s) scorestest = model.predict_proba(dataset.test.X, dataset.test.y, dataset.test.s) ```

for post-processing methods: ```python from aequitas.flow.methods.postprocessing import BalancedGroupThreshold

threshold = BalancedGroupThreshold("toppct", 0.1, "fpr") threshold.fit(dataset.validation.X, scoresval, dataset.validation.y, dataset.validation.s) correctedscores = threshold.transform(dataset.test.X, scorestest, dataset.test.s) ```

With this sequence, we would sample a dataset, train a FairGBM model, and then adjust the scores to have equal FPR per group (achieving Predictive Equality).

Features of the Toolkit

- Metrics: Audits based on confusion matrix-based metrics with flexibility to select the more important ones depending on use-case.

- Plotting options: The major outcomes of bias auditing and experimenting offer also plots adequate to different user objectives.

- Fair ML methods: Interface and implementation of several Fair ML methods, including pre-, in-, and post-processing methods.

- Datasets: Two "families" of datasets included, named BankAccountFraud and FolkTables.

- Extensibility: Adapted to receive user-implemented methods, with intuitive interfaces and method signatures.

- Reproducibility: Option to save artifacts of Experiments, from the transformed data to the fitted models and predictions.

- Modularity: Fair ML Methods and default datasets can be used individually or integrated in an

Experiment. - Hyperparameter optimization: Out of the box integration and abstraction of Optuna's hyperparameter optimization capabilities for experimentation.

Fair ML Methods

We support a range of methods designed to address bias and discrimination in different stages of the ML pipeline.

| Type | Method | Description |

|---|---|---|

| Pre-processing | Data Repairer | Transforms the data distribution so that a given feature distribution is marginally independent of the sensitive attribute, s. |

| Label Flipping | Flips the labels of a fraction of the training data according to the Fair Ordering-Based Noise Correction method. | |

| Prevalence Sampling | Generates a training sample with controllable balanced prevalence for the groups in dataset, either by undersampling or oversampling. | |

| Massaging | Flips selected labels to reduce prevalence disparity between groups. | |

| Correlation Suppression | Removes features that are highly correlated with the sensitive attribute. | |

| Feature Importance Suppression | Iterively removes the most important features with respect to the sensitive attribute. | |

| In-processing | FairGBM | Novel method where a boosting trees algorithm (LightGBM) is subject to pre-defined fairness constraints. |

| Fairlearn Classifier | Models from the Fairlearn reductions package. Possible parameterization for ExponentiatedGradient and GridSearch methods. | |

| Post-processing | Group Threshold | Adjusts the threshold per group to obtain a certain fairness criterion (e.g., all groups with 10% FPR) |

| Balanced Group Threshold | Adjusts the threshold per group to obtain a certain fairness criterion, while satisfying a global constraint (e.g., Demographic Parity with a global FPR of 10%) | |

Fairness Metrics

aequitas provides the value of confusion matrix metrics for each possible value of the sensitive attribute columns To calculate fairness metrics. The cells of the confusion metrics are:

| Cell | Symbol | Description | |--------------------|:-------:|----------------------------------------------------------------| | False Positive | $FPg$ | The number of entities of the group with $\hat{Y}=1$ and $Y=0$ | | False Negative | $FNg$ | The number of entities of the group with $\hat{Y}=0$ and $Y=1$ | | True Positive | $TPg$ | The number of entities of the group with $\hat{Y}=1$ and $Y=1$ | | True Negative | $TNg$ | The number of entities of the group with $\hat{Y}=0$ and $Y=0$ |

From these, we calculate several metrics:

| Metric | Formula | Description | |-------------------------------|:---------------------------------------------------:|-------------------------------------------------------------------------------------------| | Accuracy | $Accg = \cfrac{TPg + TNg}{|g|}$ | The fraction of correctly predicted entities withing the group. | | True Positive Rate | $TPRg = \cfrac{TPg}{TPg + FNg}$ | The fraction of true positives within the label positive entities of a group. | | True Negative Rate | $TNRg = \cfrac{TNg}{TNg + FPg}$ | The fraction of true negatives within the label negative entities of a group. | | False Negative Rate | $FNRg = \cfrac{FNg}{TPg + FNg}$ | The fraction of false negatives within the label positive entities of a group. | | False Positive Rate | $FPRg = \cfrac{FPg}{TNg + FPg}$ | The fraction of false positives within the label negative entities of a group. | | Precision | $Precisiong = \cfrac{TPg}{TPg + FPg}$ | The fraction of true positives within the predicted positive entities of a group. | | Negative Predictive Value | $NPVg = \cfrac{TNg}{TNg + FNg}$ | The fraction of true negatives within the predicted negative entities of a group. | | False Discovery Rate | $FDRg = \cfrac{FPg}{TPg + FPg}$ | The fraction of false positives within the predicted positive entities of a group. | | False Omission Rate | $FORg = \cfrac{FNg}{TNg + FNg}$ | The fraction of false negatives within the predicted negative entities of a group. | | Predicted Positive | $PPg = TPg + FPg$ | The number of entities within a group where the decision is positive, i.e., $\hat{Y}=1$. | | Total Predictive Positive | $K = \sum PP{g(ai)}$ | The total number of entities predicted positive across groups defined by $A$ | | Predicted Negative | $PNg = TNg + FNg$ | The number of entities within a group where the decision is negative, i.e., $\hat{Y}=0$ | | Predicted Prevalence | $Pprevg=\cfrac{PPg}{|g|}=P(\hat{Y}=1 | A=ai)$ | The fraction of entities within a group which were predicted as positive. | | Predicted Positive Rate | $PPRg = \cfrac{PPg}{K} = P(A=A_i | \hat{Y}=1)$ | The fraction of the entities predicted as positive that belong to a certain group. |

These are implemented in the Group class. With the Bias class, several fairness metrics can be derived by different combinations of ratios of these metrics.

Further documentation

You can find the toolkit documentation here.

For more examples of the python library and a deep dive into concepts of fairness in ML, see our Tutorial presented on KDD and AAAI. Visit also the Aequitas project website.

Citing Aequitas

To cite Aequitas, please refer to the following papers:

- Aequitas Flow: Streamlining Fair ML Experimentation (2024) PDF

```bib @article{jesus2024aequitas, title={Aequitas Flow: Streamlining Fair ML Experimentation}, author={Jesus, S{\'e}rgio and Saleiro, Pedro and Jorge, Beatriz M and Ribeiro, Rita P and Gama, Jo{~a}o and Bizarro, Pedro and Ghani, Rayid and others}, journal={arXiv preprint arXiv:2405.05809}, year={2024} }

```

- Aequitas: A Bias and Fairness Audit Toolkit (2018) PDF

bib

@article{2018aequitas,

title={Aequitas: A Bias and Fairness Audit Toolkit},

author={Saleiro, Pedro and Kuester, Benedict and Stevens, Abby and Anisfeld, Ari and Hinkson, Loren and London, Jesse and Ghani, Rayid}, journal={arXiv preprint arXiv:1811.05577}, year={2018}}

Owner

- Name: Data Science for Social Good

- Login: dssg

- Kind: organization

- Email: info@datascienceforsocialgood.org

- Location: Pittsburgh, PA

- Website: http://dssgfellowship.org

- Twitter: datascifellows

- Repositories: 145

- Profile: https://github.com/dssg

GitHub Events

Total

- Issues event: 2

- Watch event: 41

- Delete event: 4

- Issue comment event: 2

- Push event: 1

- Pull request review event: 1

- Pull request event: 2

- Fork event: 11

- Create event: 1

Last Year

- Issues event: 2

- Watch event: 41

- Delete event: 4

- Issue comment event: 2

- Push event: 1

- Pull request review event: 1

- Pull request event: 2

- Fork event: 11

- Create event: 1

Committers

Last synced: over 2 years ago

Top Committers

| Name | Commits | |

|---|---|---|

| Pedro Saleiro | p****o@g****m | 436 |

| Loren Hinkson | l****n@g****m | 117 |

| Jesse London | j****n@g****m | 66 |

| Ari | a****d@u****u | 34 |

| Abby Stevens | a****s@g****m | 27 |

| Rayid Ghani | r****i | 14 |

| Rayid Ghani | r****i@g****m | 7 |

| sergio.jesus | 3****s | 4 |

| techbar | t****r@T****l | 3 |

| Pedro Saleiro | p****o@f****m | 2 |

| kalkairis | k****s@g****m | 2 |

| Ari Anisfeld | a****d@g****m | 2 |

| David Polido | d****o@g****m | 1 |

| João Palmeiro | j****o@g****m | 1 |

| Skyler Wharton | s****r@s****m | 1 |

| Beatriz Malveiro | m****o@g****m | 1 |

| Duncan Parkes | d****s@g****m | 1 |

| andre.cruz | a****z@f****m | 1 |

| Davut Emre | d****r@g****m | 1 |

| André Cruz | A****z | 1 |

Committer Domains (Top 20 + Academic)

Issues and Pull Requests

Last synced: 8 months ago

All Time

- Total issues: 113

- Total pull requests: 128

- Average time to close issues: over 1 year

- Average time to close pull requests: about 2 months

- Total issue authors: 32

- Total pull request authors: 22

- Average comments per issue: 0.67

- Average comments per pull request: 0.33

- Merged pull requests: 95

- Bot issues: 0

- Bot pull requests: 18

Past Year

- Issues: 11

- Pull requests: 9

- Average time to close issues: 4 days

- Average time to close pull requests: 13 days

- Issue authors: 7

- Pull request authors: 3

- Average comments per issue: 0.64

- Average comments per pull request: 0.56

- Merged pull requests: 5

- Bot issues: 0

- Bot pull requests: 0

Top Authors

Issue Authors

- saleiro (16)

- sgpjesus (15)

- LiFaytheGoblin (9)

- rayidghani (6)

- yajush1998 (3)

- alenastern (3)

- lorenh516 (3)

- IoanaSil14 (2)

- valmik-patel (2)

- reluzita (2)

- ejm714 (2)

- cherdeman (2)

- wangw23 (1)

- lshpaner (1)

- AndreFCruz (1)

Pull Request Authors

- reluzita (38)

- sgpjesus (36)

- dependabot[bot] (25)

- saleiro (6)

- lorenh516 (6)

- jesteria (6)

- valmik-patel (5)

- davidpolido (4)

- anisfeld (4)

- biamalveiro (3)

- sujith-kamme (2)

- AndreFCruz (2)

- diehlbw (2)

- skylerwharton (1)

- LiFaytheGoblin (1)

Top Labels

Issue Labels

Pull Request Labels

Packages

- Total packages: 2

-

Total downloads:

- pypi 24,056 last-month

-

Total dependent packages: 2

(may contain duplicates) -

Total dependent repositories: 50

(may contain duplicates) - Total versions: 25

- Total maintainers: 2

pypi.org: aequitas

The bias and fairness audit toolkit.

- Homepage: https://github.com/dssg/aequitas

- Documentation: https://aequitas.readthedocs.io/

- License: MIT

-

Latest release: 1.0.0

published about 2 years ago

Rankings

pypi.org: aequitas-lite

The bias and fairness audit toolkit.

- Homepage: https://github.com/dssg/aequitas

- Documentation: https://aequitas-lite.readthedocs.io/

- License: https://github.com/dssg/aequitas/blob/master/LICENSE

-

Latest release: 0.43.5

published almost 3 years ago

Rankings

Maintainers (1)

Dependencies

- ipython * development

- Flask ==0.12.2

- Flask-Bootstrap ==3.3.7.1

- SQLAlchemy >=1.1.1

- altair >=4.1.0

- markdown2 ==2.3.5

- matplotlib >=3.0.3

- millify ==0.1.1

- ohio >=0.2.0

- pandas >=0.24.1

- pyyaml >=5.1

- scipy >=0.18.1

- seaborn >=0.9.0

- tabulate ==0.8.2

- xhtml2pdf ==0.2.2

- python 3.6.6 build

- argcmdr ==0.6.0

- awsebcli ==3.12.4

- bumpversion ==0.5.3

- flake8 ==3.5.0

- twine ==1.14.0

- 1100 dependencies

- @babel/core ^7.2.2 development

- @babel/polyfill ^7.8.7 development

- @babel/preset-env ^7.3.1 development

- @babel/preset-react ^7.0.0 development

- @emotion/react ^11.7.1 development

- @emotion/styled ^11.6.0 development

- @feedzai/eslint-config-feedzai ^4.0.1 development

- @feedzai/eslint-config-feedzai-react ^4.1.0 development

- @mui/material ^5.3.0 development

- @testing-library/jest-dom ^5.14.1 development

- @testing-library/react ^11.2.7 development

- @testing-library/user-event ^13.1.9 development

- autoprefixer ^9.8.6 development

- babel-jest ^27.0.5 development

- babel-loader ^8.0.5 development

- css-loader ^2.1.1 development

- eslint ^6.8.0 development

- eslint-config-prettier ^8.3.0 development

- eslint-plugin-import ^2.23.2 development

- eslint-plugin-jest ^22.21.0 development

- eslint-plugin-jsx-a11y ^6.4.1 development

- eslint-plugin-react ^7.23.2 development

- eslint-plugin-react-hooks ^2.5.1 development

- file-loader ^3.0.1 development

- jest ^27.0.4 development

- language-tags 1.0.5 development

- postcss ^8.3.0 development

- postcss-loader ^3.0.0 development

- prettier 2.3.0 development

- process ^0.11.10 development

- prop-types ^15.7.2 development

- react ^16.8.1 development

- react-dom ^16.8.1 development

- sass ^1.32.0 development

- sass-loader ^10.1.1 development

- style-loader ^0.23.1 development

- webpack ^5.0.0 development

- webpack-cli ^3.3.12 development

- webpack-dev-server ^3.11.0 development

- @fontsource/roboto ^4.4.5

- @headlessui/react ^1.2.0

- @tippyjs/react ^4.2.5

- @visx/axis ^1.10.0

- @visx/glyph ^1.7.0

- @visx/grid ^1.11.1

- @visx/group ^1.7.0

- @visx/scale ^1.7.0

- @visx/shape ^1.11.1

- @visx/zoom ^2.6.1

- d3-array ^2.12.1

- d3-format ^3.0.0

- d3-selection ^2.0.0

- lodash ^4.17.15

- react-contexify ^5.0.0

- react-error-boundary ^3.1.3

- react-icons ^4.2.0

- react-select ^4.3.1