https://github.com/sparks-baird/self-driving-lab-demo

Software and instructions for setting up and running a self-driving lab (autonomous experimentation) demo using dimmable RGB LEDs, an 8-channel spectrophotometer, a microcontroller, and an adaptive design algorithm, as well as extensions to liquid- and solid-based color matching demos.

Science Score: 59.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

○CITATION.cff file

-

✓codemeta.json file

Found codemeta.json file -

✓.zenodo.json file

Found .zenodo.json file -

✓DOI references

Found 7 DOI reference(s) in README -

✓Academic publication links

Links to: zenodo.org -

✓Committers with academic emails

1 of 3 committers (33.3%) from academic institutions -

○Institutional organization owner

-

○JOSS paper metadata

-

○Scientific vocabulary similarity

Low similarity (13.4%) to scientific vocabulary

Keywords

Repository

Software and instructions for setting up and running a self-driving lab (autonomous experimentation) demo using dimmable RGB LEDs, an 8-channel spectrophotometer, a microcontroller, and an adaptive design algorithm, as well as extensions to liquid- and solid-based color matching demos.

Basic Info

- Host: GitHub

- Owner: sparks-baird

- License: mit

- Language: Jupyter Notebook

- Default Branch: main

- Homepage: https://self-driving-lab-demo.readthedocs.io/

- Size: 213 MB

Statistics

- Stars: 78

- Watchers: 5

- Forks: 10

- Open Issues: 48

- Releases: 17

Topics

Metadata Files

README.md

<!-- These are examples of badges you might also want to add to your README. Update the URLs accordingly.

If you're reading this on GitHub, navigate to the documentation for tutorials, APIs, and more

self-driving-lab-demo

Software and instructions for setting up and running an autonomous (self-driving) laboratory optics demo using dimmable RGB LEDs, an 8-channel spectrophotometer, a microcontroller, and an adaptive design algorithm, as well as extensions to liquid- and solid-based color matching demos.

Demos

This repository covers three teaching and prototyping demos for self-driving laboratories in the fields of optics (light-mixing), chemistry (liquid-mixing), and solid-state materials science (solid-mixing).

CLSLab:Light

NOTE: Some updates have occurred since the creation of the video tutorial and the publication of the manuscript. Please read the description section of the YouTube video and see https://github.com/sparks-baird/self-driving-lab-demo/issues/245.

| White paper [postprint] | Build instructions manuscript | YouTube build instructions | Purchase* | | --- | --- | --- | --- |

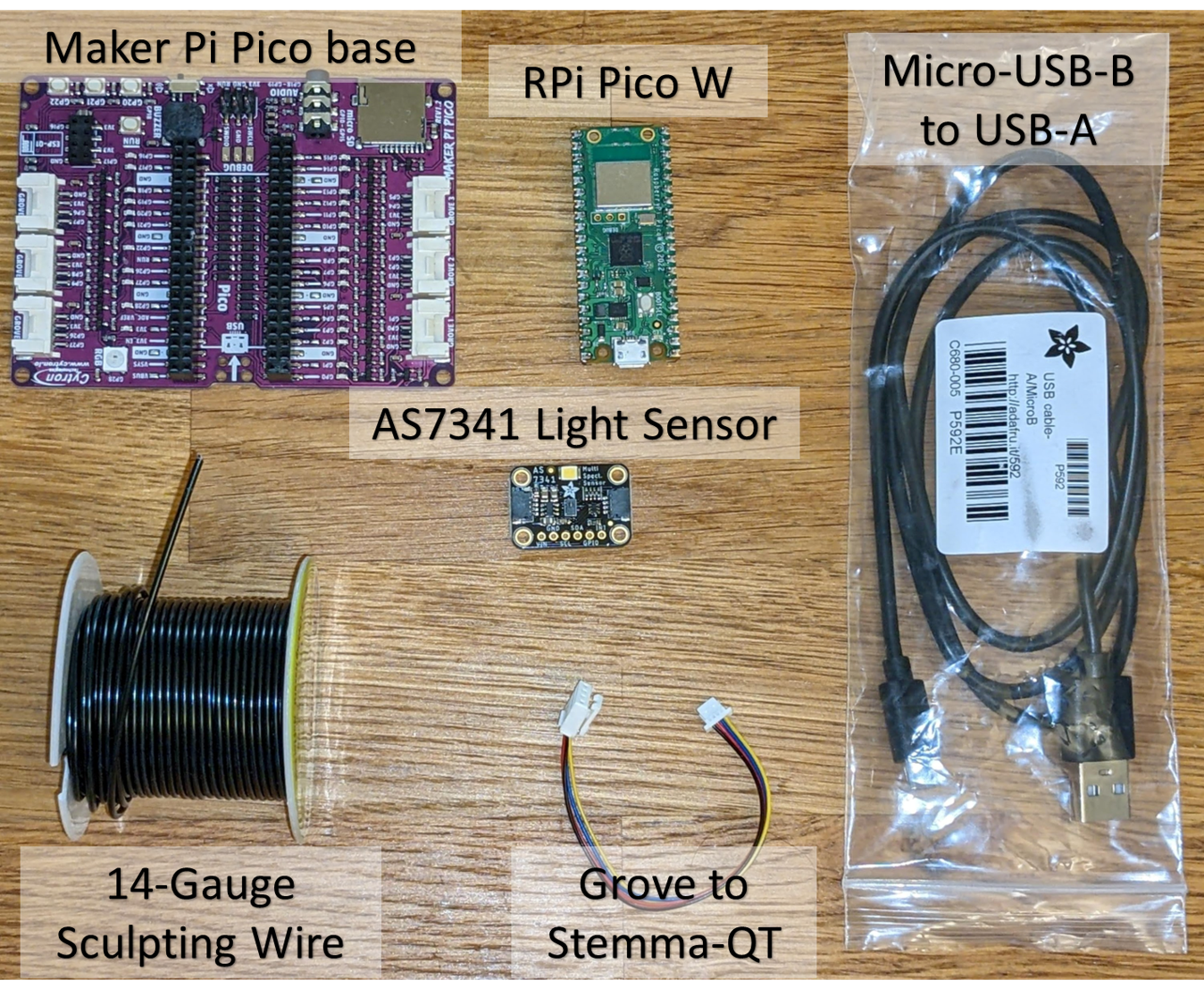

Self-driving labs are the future; however, the capital and expertise required can be daunting. We introduce the idea of an experimental optimization task for less than $100, a square foot of desk space, and an hour of total setup time from the shopping cart to the first "autonomous drive." For our first demo, we use optics rather than chemistry; after all, light is easier to move than matter. While not strictly materials-based, importantly, several core principles of a self-driving materials discovery lab are retained in this cross-domain example: - sending commands to hardware to adjust physical parameters - receiving measured objective properties - decision-making via active learning - utilizing cloud-based simulations

The demo is accessible, extensible, modular, and repeatable, making it an ideal candidate for both low-cost experimental adaptive design prototyping and learning the principles of self-driving laboratories in a low-risk setting.

| Summary | Unassembled | Assembled |

| --- | --- | --- |

|  |

|  |

|

Users

University instructors utilizing CLSLab-Light during Spring 2023: 4 (~40 kits in total)

At-cost Commercialization

- GroupGets round 1: funded and fulfilled (19 kits)

- GroupGets round 2: funded and fulfilled (20 kits)

*CLSLab:Light is stocked in the GroupGets Store. It has a higher GroupGets fee (only GroupGets sees the extra profit). If you don't want to wait for new rounds and you'd rather order a pre-built kit, this is the best option right now.

CLSLab:Liquid

| Bill of materials | | --- |

We extend the light-mixing demo to a color-matching materials optimization problem using dilute colored dyes. This optimization task costs less than 300 USD, requires less than three square feet of desk space, and less than three hours of total setup time from the shopping cart to the first autonomous drive. The demo is modular and extensible; additional peristaltic pump channels can be added, the dye reservoirs can be increased, and chemically sensitive parts can be replaced with chemically resistant ones.

| Summary | Schematic | Assembled |

| --- | --- | --- |

|

|  |

|

CLSLab:Solid

There are few to no examples of a low-cost demo platform involving the handling of solid-state materials (i.e., powders, pellets). For this demo, we propose using red, yellow, and blue powdered wax as a replacement for the liquid colored dyes. The demo is more expensive due to the need for robotics. The demo involves using tealight candle holders, transferring them to a rotating stage via a robotic arm, dispensing a combination of powders, melting the wax via an incandescent light bulb, measuring a discrete color spectrum, and moving the completed sample to a separate sample storage area.

See Also

- Journal of Brief Ideas submission

- Hackaday project page

- Adafruit Forum: Developing a closed-loop feedback system via DotStar LEDs

Basic Usage

I recommend going through the introductory Colab notebook, but here is a shorter version of how an optimization comparison can be run between grid search, random search, and Bayesian optimization using a free public demo.

Basic Installation

python

pip install self-driving-lab-demo

Client Setup for Public Test Demo

```python from selfdrivinglabdemo import ( SelfDrivingLabDemoLight, # SelfDrivingLabDemoLiquid, mqttobservesensordata, getpahoclient, )

PICOID = "test" sensortopic = f"sdl-demo/picow/{PICO_ID}/as7341/" # to match with Pico W code

instantiate client once and reuse to avoid opening too many connections

client = getpahoclient(sensor_topic)

sdl = SelfDrivingLabDemoLight( autoload=True, # perform target data experiment automatically, default is False observesensordatafn=mqttobservesensordata, # default observesensordatakwargs=dict(picoid=PICO_ID, client=client), simulation=False, # default ) ```

Optimization Comparison

```python from selfdrivinglabdemo.utils.search import ( gridsearch, randomsearch, axbayesian_optimization, )

num_iter = 27

grid, griddata = gridsearch(sdl, numiter) randominputs, randomdata = randomsearch(sdl, numiter) bestparameters, values, experiment, model = axbayesianoptimization(sdl, num_iter) ```

Visualization

```python import plotly.express as px import pandas as pd

grid

gridinputdf = pd.DataFrame(grid) gridoutputdf = pd.DataFrame(griddata)[["frechet"]] griddf = pd.concat([gridinputdf, gridoutputdf], axis=1) griddf["bestsofar"] = griddf["frechet"].cummin()

random

randominputdf = pd.DataFrame(randominputs, columns=["R", "G", "B"]) randomoutputdf = pd.DataFrame(randomdata)[["frechet"]] randomdf = pd.concat([randominputdf, randomoutputdf], axis=1) randomdf["bestsofar"] = random_df["frechet"].cummin()

bayes

trials = list(experiment.trials.values()) bayesinputdf = pd.DataFrame([t.arm.parameters for t in trials]) bayesoutputdf = pd.Series( [t.objectivemean for t in trials], name="frechet" ).toframe() bayesdf = pd.concat([bayesinputdf, bayesoutputdf], axis=1) bayesdf["bestsofar"] = bayes_df["frechet"].cummin()

concatenation

griddf["type"] = "grid" randomdf["type"] = "random" bayesdf["type"] = "bayesian" df = pd.concat([griddf, randomdf, bayesdf], axis=0)

plotting

px.line(df, x=df.index, y="bestsofar", color="type").updatelayout( xaxistitle="iteration", yaxis_title="Best error so far", ) ```

Example Output

Advanced Installation

PyPI

python

conda create -n self-driving-lab-demo python=3.10.*

conda activate self-driving-lab-demo

pip install self-driving-lab-demo

Local

In order to set up the necessary environment:

- review and uncomment what you need in

environment.ymland create an environmentself-driving-lab-demowith the help of conda:conda env create -f environment.yml - activate the new environment with:

conda activate self-driving-lab-demo

NOTE: The conda environment will have self-driving-lab-demo installed in editable mode. Some changes, e.g. in

setup.cfg, might require you to runpip install -e .again.

Optional and needed only once after git clone:

install several pre-commit git hooks with: ```bash pre-commit install

You might also want to run

pre-commit autoupdate``

and checkout the configuration under.pre-commit-config.yaml. The-n, --no-verifyflag ofgit commit` can be used to deactivate pre-commit hooks temporarily.install nbstripout git hooks to remove the output cells of committed notebooks with:

bash nbstripout --install --attributes notebooks/.gitattributesThis is useful to avoid large diffs due to plots in your notebooks. A simplenbstripout --uninstallwill revert these changes.

Then take a look into the scripts and notebooks folders.

Dependency Management & Reproducibility

- Always keep your abstract (unpinned) dependencies updated in

environment.ymland eventually insetup.cfgif you want to ship and install your package viapiplater on. - Create concrete dependencies as

environment.lock.ymlfor the exact reproduction of your environment with:bash conda env export -n self-driving-lab-demo -f environment.lock.ymlFor multi-OS development, consider using--no-buildsduring the export. - Update your current environment with respect to a new

environment.lock.ymlusing:bash conda env update -f environment.lock.yml --prune

Project Organization

AUTHORS.md <- List of developers and maintainers.

CHANGELOG.md <- Changelog to keep track of new features and fixes.

CONTRIBUTING.md <- Guidelines for contributing to this project.

Dockerfile <- Build a docker container with `docker build .`.

LICENSE.txt <- License as chosen on the command-line.

README.md <- The top-level README for developers.

configs <- Directory for configurations of model & application.

data

external <- Data from third party sources.

interim <- Intermediate data that has been transformed.

processed <- The final, canonical data sets for modeling.

raw <- The original, immutable data dump.

docs <- Directory for Sphinx documentation in rst or md.

environment.yml <- The conda environment file for reproducibility.

models <- Trained and serialized models, model predictions,

or model summaries.

notebooks <- Jupyter notebooks. Naming convention is a number (for

ordering), the creator's initials and a description,

e.g. `1.0-fw-initial-data-exploration`.

pyproject.toml <- Build configuration. Don't change! Use `pip install -e .`

to install for development or to build `tox -e build`.

references <- Data dictionaries, manuals, and all other materials.

reports <- Generated analysis as HTML, PDF, LaTeX, etc.

figures <- Generated plots and figures for reports.

scripts <- Analysis and production scripts which import the

actual PYTHON_PKG, e.g. train_model.

setup.cfg <- Declarative configuration of your project.

setup.py <- [DEPRECATED] Use `python setup.py develop` to install for

development or `python setup.py bdist_wheel` to build.

src

self_driving_lab_demo <- Actual Python package where the main functionality goes.

tests <- Unit tests which can be run with `pytest`.

.coveragerc <- Configuration for coverage reports of unit tests.

.isort.cfg <- Configuration for git hook that sorts imports.

.pre-commit-config.yaml <- Configuration of pre-commit git hooks.

Note

This project has been set up using PyScaffold 4.2.3.post1.dev10+g7a0f254 and the dsproject extension 0.7.2.post1.dev3+g948a662.

Owner

- Name: Sparks/Baird Materials Informatics

- Login: sparks-baird

- Kind: organization

- Email: sterling.baird@utah.edu

- Location: United States of America

- Repositories: 63

- Profile: https://github.com/sparks-baird

Sterling Baird and Taylor Sparks Materials Informatics Projects

GitHub Events

Total

- Create event: 4

- Release event: 2

- Issues event: 17

- Watch event: 6

- Delete event: 2

- Issue comment event: 56

- Push event: 27

- Pull request review event: 2

- Pull request event: 7

- Fork event: 3

Last Year

- Create event: 4

- Release event: 2

- Issues event: 17

- Watch event: 6

- Delete event: 2

- Issue comment event: 56

- Push event: 27

- Pull request review event: 2

- Pull request event: 7

- Fork event: 3

Committers

Last synced: 9 months ago

Top Committers

| Name | Commits | |

|---|---|---|

| sgbaird | s****d@u****u | 912 |

| Neil-YL | y****2@g****m | 1 |

| Luthira Abeykoon | 7****a | 1 |

Committer Domains (Top 20 + Academic)

Issues and Pull Requests

Last synced: 6 months ago

All Time

- Total issues: 90

- Total pull requests: 95

- Average time to close issues: about 1 month

- Average time to close pull requests: 2 days

- Total issue authors: 12

- Total pull request authors: 4

- Average comments per issue: 1.19

- Average comments per pull request: 1.33

- Merged pull requests: 90

- Bot issues: 0

- Bot pull requests: 0

Past Year

- Issues: 15

- Pull requests: 10

- Average time to close issues: 30 days

- Average time to close pull requests: 16 days

- Issue authors: 6

- Pull request authors: 4

- Average comments per issue: 2.4

- Average comments per pull request: 2.2

- Merged pull requests: 5

- Bot issues: 0

- Bot pull requests: 0

Top Authors

Issue Authors

- sgbaird (72)

- ianfoster (4)

- kinstonwithoutg (2)

- jsmz2602 (2)

- Endocipher7 (1)

- Scienfitz (1)

- viola-m-li (1)

- sdaulton (1)

- cjbrew (1)

- jkitchin (1)

- uznab16 (1)

- shooyonekawa (1)

Pull Request Authors

- sgbaird (99)

- Luthiraa (3)

- Copilot (2)

- Neil-YL (2)

Top Labels

Issue Labels

Pull Request Labels

Packages

- Total packages: 1

-

Total downloads:

- pypi 217 last-month

- Total dependent packages: 0

- Total dependent repositories: 0

- Total versions: 31

- Total maintainers: 1

pypi.org: self-driving-lab-demo

Software and instructions for setting up and running an autonomous (self-driving) laboratory optics demo using dimmable RGB LEDs, a 10-channel spectrometer, a microcontroller, and an adaptive design algorithm.

- Homepage: https://github.com/sparks-baird/self-driving-lab-demo/

- Documentation: https://pyscaffold.org/

- License: MIT

-

Latest release: 0.8.12

published 9 months ago

Rankings

Maintainers (1)

Dependencies

- actions/checkout v3 composite

- actions/download-artifact v3 composite

- actions/setup-python v4 composite

- actions/upload-artifact v3 composite

- coverallsapp/github-action master composite

- softprops/action-gh-release v1 composite

- condaforge/mambaforge latest build

- myst-parser *

- sphinx >=3.2.1

- acrylic *