Machine Learning Validation via Rational Dataset Sampling with astartes

Machine Learning Validation via Rational Dataset Sampling with astartes - Published in JOSS (2023)

Science Score: 100.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

✓CITATION.cff file

Found CITATION.cff file -

✓codemeta.json file

Found codemeta.json file -

✓.zenodo.json file

Found .zenodo.json file -

✓DOI references

Found 14 DOI reference(s) in README and JOSS metadata -

✓Academic publication links

Links to: scholar.google, sciencedirect.com, wiley.com, rsc.org, acs.org, joss.theoj.org, zenodo.org -

✓Committers with academic emails

3 of 4 committers (75.0%) from academic institutions -

○Institutional organization owner

-

✓JOSS paper metadata

Published in Journal of Open Source Software

Keywords

Scientific Fields

Repository

Better Data Splits for Machine Learning

Basic Info

- Host: GitHub

- Owner: JacksonBurns

- License: mit

- Language: Python

- Default Branch: main

- Homepage: https://jacksonburns.github.io/astartes/

- Size: 22.7 MB

Statistics

- Stars: 84

- Watchers: 2

- Forks: 5

- Open Issues: 6

- Releases: 18

Topics

Metadata Files

README.md

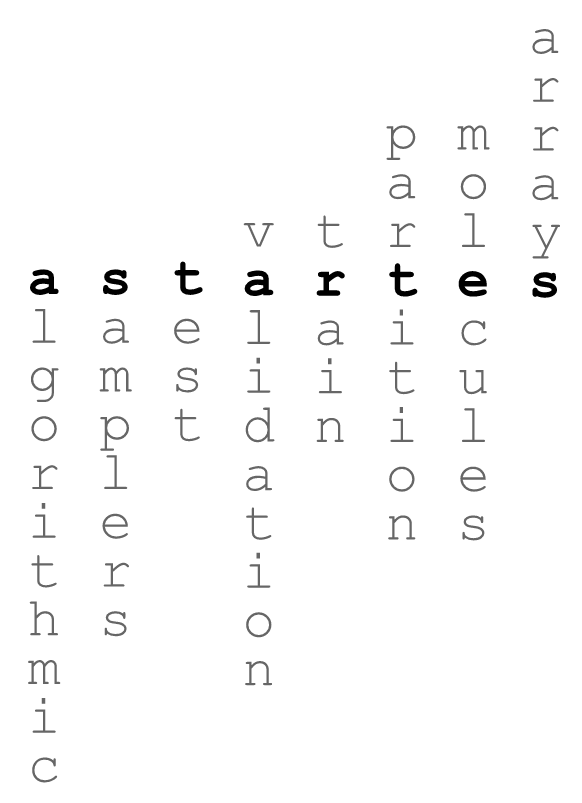

astartes

(as-tar-tees)

Train:Validation:Test Algorithmic Sampling for Molecules and Arbitrary Arrays

Online Documentation

Follow this link for a nicely-rendered version of this README along with additional tutorials for moving from traintestsplit in sklearn to astartes. Keep reading for a installation guide and links to tutorials!

Installing astartes

We recommend installing astartes within a virtual environment, using either venv or conda (or other tools) to simplify dependency management. Python versions 3.8, 3.9, 3.10, 3.11, and 3.12 are supported on all platforms.

Warning Windows (PowerShell) and MacOS Catalina or newer (zsh) require double quotes around text using the

'[]'characters (i.e.pip install "astartes[molecules]").

pip

astartes is available on PyPI and can be installed using pip:

- To include the featurization options for chemical data, use

pip install astartes[molecules]. - To install only the sampling algorithms, use

pip install astartes(this install will have fewer dependencies and may be more readily compatible in environments with existing workflows).

conda

astartes package is also available on conda-forge with this command: conda install -c conda-forge astartes.

To install astartes with support for featurizing molecules, use: conda install -c conda-forge astartes aimsim.

This will download the base astartes package as well as aimsim, which is the backend used for molecular featurization.

The PyPI distribution has fewer dependencies for the molecules subpackage because it uses aimsim_core instead of aimsim.

You can achieve this on conda by first running conda install -c conda-forge astartes and then pip install aimsim_core (aimsim_core is not available on conda-forge).

Source

To install astartes from source for development, see the Contributing & Developer Notes section.

Statement of Need

Machine learning has sparked an explosion of progress in chemical kinetics, materials science, and many other fields as researchers use data-driven methods to accelerate steps in traditional workflows within some acceptable error tolerance. To facilitate adoption of these models, there are two important tasks to consider: 1. use a validation set when selecting the optimal hyperparameter for the model and separately use a held-out test set to measure performance on unseen data. 2. evaluate model performance on both interpolative and extrapolative tasks so future users are informed of any potential limitations.

astartes addresses both of these points by implementing an sklearn-compatible train_val_test_split function.

Additional technical detail is provided below as well as in our companion paper in the Journal of Open Source Software: Machine Learning Validation via Rational Dataset Sampling with astartes.

For a demo-based explainer using machine learning on a fast food menu, see the astartes Reproducible Notebook published at the United States Research Software Engineers Conference at this page.

Target Audience

astartes is generally applicable to machine learning involving both discovery and inference and model validation.

There are specific functions in astartes for applications in cheminformatics (astartes.molecules) but the methods implemented are general to all numerical data.

Quick Start

astartes is designed as a drop-in replacement for sklearn's train_test_split function (see the sklearn documentation). To switch to astartes, change from sklearn.model_selection import train_test_split to from astartes import train_test_split.

Like sklearn, astartes accepts any iterable object as X, y, and labels.

Each will be converted to a numpy array for internal operations, and returned as a numpy array with limited exceptions: if X is a pandas DataFrame, y is a Series, or labels is a Series, astartes will cast it back to its original type including its index and column names.

Note The developers recommend passing

X,y, andlabelsasnumpyarrays and handling the conversion to and from other types explicitly on your own. Behind-the-scenes type casting can lead to unexpected behavior!

By default, astartes will split data randomly. Additionally, a variety of algorithmic sampling approaches can be used by specifying the sampler argument to the function (see the Table of Implemented Samplers for a complete list of options and their corresponding references):

```python from sklearn.datasets import load_diabetes

X, y = loaddiabetes(returnX_y=True)

Xtrain, Xtest, ytrain, ytest = traintestsplit( X, # preferably numpy arrays, but astartes will cast it for you y, sampler = 'kennard_stone', # any of the supported samplers ) ```

Note Extrapolation sampling algorithms will return an additional set of arrays (the cluster labels) which will result in a

ValueError: too many values to unpackif not called properly. See thesplit_comparisonsGoogle colab demo for a full explanation.

That's all you need to get started with astartes!

The next sections include more examples and some demo notebooks you can try in your browser.

Example Notebooks

Click the badges in the table below to be taken to a live, interactive demo of astartes:

| Demo | Topic | Link |

|:---:|---|---|

| Comparing Sampling Algorithms with Fast Food | Visual representations of how different samplers affect data partitioning | |

| Using

train_val_test_split with the sklearn example datasets | Demonstrating how witholding a test set with train_val_test_split can impact performance | |

| Cheminformatics sample set partitioning with

astartes | Extrapolation vs. Interpolation impact on cheminformatics model accuracy | |

| Comparing partitioning approaches for alkanes | Visualizing how sampler impact model performance with simple chemicals |

|

To execute these notebooks locally, clone this repository (i.e. git clone https://github.com/JacksonBurns/astartes.git), navigate to the astartes directory, run pip install .[demos], then open and run the notebooks in your preferred editor.

You do not need to execute the cells prefixed with %%capture - they are only present for compatibility with Google Colab.

Packages Using astartes

- Chemprop, a machine learning library for chemical property prediction, uses

astartesin the backend for splitting molecular structures. fastprop, a descriptor-based property prediction library, usesastartes.- Google Scholar of articles citing the JOSS paper for

astartes

Withhold Testing Data with train_val_test_split

For rigorous ML research, it is critical to withhold some data during training to use a test set.

The model should never see this data during training (unlike the validation set) so that we can get an accurate measurement of its performance.

With astartes performing this three-way data split is readily available with train_val_test_split:

```python

from astartes import trainvaltest_split

Xtrain, Xval, Xtest = trainvaltestsplit(X, sampler = 'sphereexclusion')

``

You can now train your model withXtrain, optimize your model withXval, and measure its performance withXtest`.

Evaluate the Impact of Splitting Algorithms on Regression Models

For data with many features it can be difficult to visualize how different sampling algorithms change the distribution of data into training, validation, and testing like we do in some of the demo notebooks.

To aid in analyzing the impact of the algorithms, astartes provides generate_regression_results_dict.

This function allows users to quickly evaluate the impact of different splitting techniques on any sklearn-compatible model's performance.

All results are stored in a nested dictionary ({sampler:{metric:{split:score}}}) format and can be displayed in a neatly formatted table using the optional print_results argument.

```python from sklearn.svm import LinearSVR

from astartes.utils import generateregressionresults_dict as grrd

sklearnmodel = LinearSVR() resultsdict = grrd( sklearnmodel, X, y, printresults=True, )

Train Val Test

MAE 1.41522 3.13435 2.17091 RMSE 2.03062 3.73721 2.40041 R2 0.90745 0.80787 0.78412

```

Additional metrics can be passed to generate_regression_results_dict via the additional_metrics argument, which should be a dictionary mapping the name of the metric (as a string) to the function itself, like this:

```python from sklearn.metrics import meanabsolutepercentage_error

addmet = {"mape": meanabsolutepercentageerror}

grrd(sklearnmodel, X, y, additionalmetric=add_met) ```

See the docstring for generate_regression_results_dict (with help(generate_regression_results_dict)) for more information.

Using astartes with Categorical Data

Any of the implemented sampling algorithms whose hyperparameters allow specifying the metric or distance_metric (effectively 1-metric) can be co-opted to work with categorical data.

Simply encode the data in a format compatible with the sklearn metric of choice and then call astartes with that metric specified:

```python

from sklearn.metrics import jaccard_score

Xtrain, Xtest, ytrain, ytest = traintestsplit( X, y, sampler='kennardstone', hopts={"metric": jaccardscore}, ) ```

Other samplers which do not allow specifying a categorical distance metric did not provide a method for doing so in their original inception, though it is possible that they can be adapted for this application. If you are interested in adding support for categorical metrics to an existing sampler, consider opening a Feature Request!

Access Sampling Algorithms Directly

The sampling algorithms implemented in astartes can also be directly accessed and run if it is more useful for your applications.

In the below example, we import the Kennard Stone sampler, use it to partition a simple array, and then retrieve a sample.

```python

from astartes.samplers.interpolation import KennardStone

kennardstone = KennardStone([[1, 2], [3, 4], [5, 6]])

first2samples = kennardstone.getsampleidxs(2)

``

All samplers inastartesimplement asample()method that is called by the constructor (i.e. greedily) and either agetsampleridxsorgetcluster_idxsfor interpolative and extrapolative samplers, respectively.

For more detail on the implementaiton and design of samplers inastartes`, see the Developer Notes section.

Theory and Application of astartes

This section of the README details some of the theory behind why the algorithms implemented in astartes are important and some motivating examples.

For a comprehensive walkthrough of the theory and implementation of astartes, follow this link to read the companion paper (freely available and hosted here on GitHub).

Note We reference open-access publications wherever possible. For articles locked behind a paywall (denoted with :smallbluediamond:), we instead suggest reading this Wikipedia page and absolutely not attempting to bypass the paywall.

Rational Splitting Algorithms

While much machine learning is done with a random choice between training/validation/test data, an alternative is the use of so-called "rational" splitting algorithms. These approaches use some similarity-based algorithm to divide data into sets. Some of these algorithms include Kennard-Stone (Kennard & Stone :smallbluediamond:), Sphere Exclusion (Tropsha et. al :smallbluediamond:),as well as the OptiSim as discussed in Applied Chemoinformatics: Achievements and Future Opportunities :smallbluediamond:. Some clustering-based splitting techniques have also been incorporated, such as DBSCAN.

There are two broad categories of sampling algorithms implemented in astartes: extrapolative and interpolative.

The former will force your model to predict on out-of-sample data, which creates a more challenging task than interpolative sampling.

See the table below for all of the sampling approaches currently implemented in astartes, as well as the hyperparameters that each algorithm accepts (which are passed in with hopts) and a helpful reference for understanding how the hyperparameters work.

Note that random_state is defined as a keyword argument in train_test_split itself, even though these algorithms will use the random_state in their own work.

Do not provide a random_state in the hopts dictionary - it will be overwritten by the random_state you provide for train_test_split (or the default if none is provided).

Implemented Sampling Algorithms

| Sampler Name | Usage String | Type | Hyperparameters | Reference | Notes |

|:---:|---|---|---|---|---|

| Random | 'random' | Interpolative | shuffle | sklearn traintestsplit Documentation | This sampler is a direct passthrough to sklearn's train_test_split. |

| Kennard-Stone | 'kennardstone' | Interpolative | metric | Original Paper by Kennard & Stone :smallbluediamond: | Euclidian distance is used by default, as described in the original paper. |

| Sample set Partitioning based on joint X-Y distances (SPXY) | 'spxy' | Interpolative | `distancemetric,distancemetricX,distancemetricy(*) | Saldhana et. al [original paper](https://www.sciencedirect.com/science/article/abs/pii/S003991400500192X) :small_blue_diamond: | Extension of Kennard Stone that also includes the response when sampling distances. |

| Mahalanobis Distance Kennard Stone (MDKS) | 'spxy' _(MDKS is derived from SPXY)_ | Interpolative | _none, see Notes_ | Saptoro et. al [original paper](https://espace.curtin.edu.au/bitstream/handle/20.500.11937/45101/217844_70585_PUB-SE-DCE-FM-71008.pdf?sequence=2&isAllowed=y) | MDKS is SPXY using Mahalanobis distance and can be called by using SPXY withdistancemetric="mahalanobis"|

| Scaffold | 'scaffold' | Extrapolative |includechirality| [Bemis-Murcko Scaffold](https://pubs.acs.org/doi/full/10.1021/jm9602928) :small_blue_diamond: as implemented in RDKit | This sampler requires SMILES strings as input (use themoleculessubpackage) |

| Molecular Weight| 'molecular_weight' | Extrapolative | _none_ | ~ | Sorts molecules by molecular weight as calculated by RDKit |

| Sphere Exclusion | 'sphere_exclusion' | Extrapolative |metric,distancecutoff` | _custom implementation | Variation on Sphere Exclusion for arbitrary-valued vectors. |

| Time Based | 'timebased' | Extrapolative | _none | Papers using Time based splitting: Chen et al. :smallbluediamond:, Sheridan, R. P :smallbluediamond:, Feinberg et al. :smallbluediamond:, Struble et al. | This sampler requires labels to be an iterable of either date or datetime objects. |

| Target Property | 'targetproperty' | Extrapolative | descending | ~ | Sorts data by regression target y |

| Optimizable K-Dissimilarity Selection (OptiSim) | 'optisim' | Extrapolative | `nclusters,maxsubsamplesize,distancecutoff` | _custom implementation | Variation on OptiSim for arbitrary-valued vectors. |

| K-Means | 'kmeans' | Extrapolative | n_clusters, n_init | sklearn KMeans | Passthrough to sklearn's KMeans. |

| Density-Based Spatial Clustering of Applications with Noise (DBSCAN) | 'dbscan' | Extrapolative | eps, min_samples, algorithm, metric, leaf_size | sklearn DBSCAN Documentation| Passthrough to sklearn's DBSCAN. |

| Minimum Test Set Dissimilarity (MTSD) | ~ | ~ | upcoming in astartes v1.x | ~ | ~ |

| Restricted Boltzmann Machine (RBM) | ~ | ~ | upcoming in astartes v1.x | ~ | ~ |

| Kohonen Self-Organizing Map (SOM) | ~ | ~ | upcoming in astartes v1.x | ~ | ~ |

| SPlit Method | ~ | ~ | upcoming in astartes v1.x | ~ | ~ |

(*) specifying distance_metric_X or distance_metric_y will override the choice of distance_metric

Domain-Specific Applications

Below are some field specific applications of astartes. Interested in adding a new sampling algorithm or featurization approach? See CONTRIBUTING.md.

Chemical Data and the astartes.molecules Subpackage

Machine Learning is enormously useful in chemistry-related fields due to the high-dimensional feature space of chemical data.

To properly apply ML to chemical data for inference or discovery, it is important to know a model's accuracy under the two domains.

To simplify the process of partitioning chemical data, astartes implements a pre-built featurizer for common chemistry data formats.

After installing with pip install astartes[molecules] one can import the new train/test splitting function like this: from astartes.molecules import train_test_split_molecules

The usage of this function is identical to train_test_split but with the addition of new arguments to control how the molecules are featurized:

python

train_test_split_molecules(

molecules=smiles,

y=y,

test_size=0.2,

train_size=0.8,

fingerprint="daylight_fingerprint",

fprints_hopts={

"fpSize": 200,

"numBitsPerFeature": 4,

"useHs": True,

},

sampler="random",

random_state=42,

hopts={

"shuffle": True,

},

)

To see a complete example of using train_test_split_molecules with actual chemical data, take a look in the examples directory and the brief companion paper.

Configuration options for the featurization scheme can be found in the documentation for AIMSim though most of the critical configuration options are shown above.

Reproducibility

astartes aims to be completely reproducible across different platforms, Python versions, and dependency configurations - any version of astartes v1.x should result in the exact same splits, always.

To that end, the default behavior of astartes is to use 42 as the random seed and always set it.

Running astartes with the default settings will always produce the exact same results.

We have verified this behavior on Debian Ubuntu, Windows, and Intel Macs from Python versions 3.7 through 3.11 (with appropriate dependencies for each version).

Known Reproducibility Limitations

Inevitably external dependencies of astartes will introduce backwards-incompatible changes.

We continually run regression tests to catch these, and will list all known limitations here:

- sklearn v1.3.0 introduced backwards-incompatible changes in the KMeans sampler that changed how the random initialization affects the results, even given the same random seed. Different version of sklearn will affect the performance of astartes and we recommend including the exact version of scikit-learn and astartes used, when applicable.

Note We are limited in our ability to test on M1 Macs, but from our limited manual testing we achieve perfect reproducbility in all cases except occasionally with

KMeanson Apple silicon.astartesis still consistent between runs on the same platform in all cases, and other samplers are not impacted by this apparent bug.

How to Cite

If you use astartes in your work please follow the link below to our (Open Access!) paper in the Journal of Open Source Software or use the "Cite this repository" button on GitHub.

Machine Learning Validation via Rational Dataset Sampling with astartes

Contributing & Developer Notes

See CONTRIBUTING.md for instructions on installing astartes for development, making a contribution, and general guidance on the design of astartes.

Owner

- Name: Jackson Burns

- Login: JacksonBurns

- Kind: user

- Location: MIT

- Website: jacksonwarnerburns.com

- Twitter: WowThatBurns

- Repositories: 41

- Profile: https://github.com/JacksonBurns

Chemical Engineering and Computation Researcher @ MIT

JOSS Publication

Machine Learning Validation via Rational Dataset Sampling with astartes

Authors

Center for Computational Science and Engineering, Massachusetts Institute of Technology, Department of Chemical Engineering, Massachusetts Institute of Technology, United States

Department of Chemical Engineering, Massachusetts Institute of Technology, United States

Department of Chemical and Biomolecular Engineering, University of Delaware, United States

Tags

machine learning sampling interpolation extrapolation data splits cheminformaticsCitation (CITATION.cff)

cff-version: "1.2.0"

authors:

- family-names: Burns

given-names: Jackson W.

orcid: "https://orcid.org/0000-0002-0657-9426"

- family-names: Spiekermann

given-names: Kevin A.

orcid: "https://orcid.org/0000-0002-9484-9253"

- family-names: Bhattacharjee

given-names: Himaghna

orcid: "https://orcid.org/0000-0002-6598-3939"

- family-names: Vlachos

given-names: Dionisios G.

orcid: "https://orcid.org/0000-0002-6795-8403"

- family-names: Green

given-names: William H.

orcid: "https://orcid.org/0000-0003-2603-9694"

contact:

- family-names: Burns

given-names: Jackson W.

orcid: "https://orcid.org/0000-0002-0657-9426"

doi: 10.5281/zenodo.8147205

message: If you use this software, please cite our article in the

Journal of Open Source Software.

preferred-citation:

authors:

- family-names: Burns

given-names: Jackson W.

orcid: "https://orcid.org/0000-0002-0657-9426"

- family-names: Spiekermann

given-names: Kevin A.

orcid: "https://orcid.org/0000-0002-9484-9253"

- family-names: Bhattacharjee

given-names: Himaghna

orcid: "https://orcid.org/0000-0002-6598-3939"

- family-names: Vlachos

given-names: Dionisios G.

orcid: "https://orcid.org/0000-0002-6795-8403"

- family-names: Green

given-names: William H.

orcid: "https://orcid.org/0000-0003-2603-9694"

date-published: 2023-11-05

doi: 10.21105/joss.05996

issn: 2475-9066

issue: 91

journal: Journal of Open Source Software

publisher:

name: Open Journals

start: 5996

title: Machine Learning Validation via Rational Dataset Sampling with

astartes

type: article

url: "https://joss.theoj.org/papers/10.21105/joss.05996"

volume: 8

title: Machine Learning Validation via Rational Dataset Sampling with

`astartes`

GitHub Events

Total

- Create event: 4

- Release event: 2

- Issues event: 5

- Watch event: 20

- Delete event: 3

- Issue comment event: 1

- Push event: 15

- Pull request review event: 1

- Pull request event: 4

- Fork event: 3

Last Year

- Create event: 4

- Release event: 2

- Issues event: 5

- Watch event: 20

- Delete event: 3

- Issue comment event: 1

- Push event: 15

- Pull request review event: 1

- Pull request event: 4

- Fork event: 3

Committers

Last synced: 6 months ago

Top Committers

| Name | Commits | |

|---|---|---|

| Jackson Burns | j****s@m****u | 476 |

| Kevin Spiekermann | k****r@m****u | 81 |

| github-actions | g****s@g****m | 15 |

| himaghna | h****a@u****u | 12 |

Committer Domains (Top 20 + Academic)

Issues and Pull Requests

Last synced: 6 months ago

All Time

- Total issues: 45

- Total pull requests: 79

- Average time to close issues: 4 months

- Average time to close pull requests: 10 days

- Total issue authors: 9

- Total pull request authors: 2

- Average comments per issue: 1.53

- Average comments per pull request: 0.7

- Merged pull requests: 74

- Bot issues: 0

- Bot pull requests: 0

Past Year

- Issues: 3

- Pull requests: 5

- Average time to close issues: 20 days

- Average time to close pull requests: about 11 hours

- Issue authors: 3

- Pull request authors: 1

- Average comments per issue: 0.33

- Average comments per pull request: 0.0

- Merged pull requests: 4

- Bot issues: 0

- Bot pull requests: 0

Top Authors

Issue Authors

- JacksonBurns (27)

- ericchen384 (4)

- KnathanM (2)

- kspieks (2)

- pikakolendo02 (2)

- kevingreenman (2)

- Estefano13 (1)

- PatWalters (1)

- himaghna (1)

Pull Request Authors

- JacksonBurns (58)

- kspieks (27)

Top Labels

Issue Labels

Pull Request Labels

Packages

- Total packages: 1

-

Total downloads:

- pypi 17,191 last-month

- Total dependent packages: 0

- Total dependent repositories: 1

- Total versions: 23

- Total maintainers: 1

pypi.org: astartes

Train:Test Algorithmic Sampling for Molecules and Arbitrary Arrays

- Homepage: https://github.com/JacksonBurns/astartes

- Documentation: https://astartes.readthedocs.io/

- License: MIT

-

Latest release: 1.3.2

published 7 months ago

Rankings

Maintainers (1)

Dependencies

- actions/checkout v3 composite

- actions/setup-python v4 composite

- actions/checkout v3 composite

- andrewmusgrave/automatic-pull-request-review 0.0.5 composite

- conda-incubator/setup-miniconda v2 composite

- actions/checkout v3 composite

- actions/setup-python v4 composite

- actions/checkout v3 composite

- conda-incubator/setup-miniconda v2 composite

- actions/checkout v3 composite

- mamba-org/setup-micromamba main composite

- actions/checkout master composite

- actions/setup-python v3 composite

- pypa/gh-action-pypi-publish release/v1 composite

- actions/checkout v3 composite

- actions/upload-artifact v3 composite

- mamba-org/setup-micromamba main composite

- actions/checkout v3 composite

- mamba-org/setup-micromamba main composite

- actions/checkout master composite

- devmasx/merge-branch master composite

- numpy *

- pandas *

- scikit_learn *

- scipy *

- tabulate *