pytorch-lightning

Pretrain, finetune ANY AI model of ANY size on multiple GPUs, TPUs with zero code changes.

Science Score: 54.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

✓CITATION.cff file

Found CITATION.cff file -

✓codemeta.json file

Found codemeta.json file -

✓.zenodo.json file

Found .zenodo.json file -

○DOI references

-

○Academic publication links

-

✓Committers with academic emails

48 of 977 committers (4.9%) from academic institutions -

○Institutional organization owner

-

○JOSS paper metadata

-

○Scientific vocabulary similarity

Low similarity (14.5%) to scientific vocabulary

Keywords

Keywords from Contributors

Repository

Pretrain, finetune ANY AI model of ANY size on multiple GPUs, TPUs with zero code changes.

Basic Info

- Host: GitHub

- Owner: Lightning-AI

- License: apache-2.0

- Language: Python

- Default Branch: master

- Homepage: https://lightning.ai/pytorch-lightning

- Size: 125 MB

Statistics

- Stars: 30,048

- Watchers: 254

- Forks: 3,556

- Open Issues: 975

- Releases: 0

Topics

Metadata Files

README.md

**The deep learning framework to pretrain, finetune and deploy AI models.** **NEW- Deploying models? Check out [LitServe](https://github.com/Lightning-AI/litserve), the PyTorch Lightning for model serving** ______________________________________________________________________

Quick start • Examples • PyTorch Lightning • Fabric • Lightning AI • Community • Docs

[](https://pypi.org/project/pytorch-lightning/) [](https://badge.fury.io/py/pytorch-lightning) [](https://pepy.tech/project/pytorch-lightning) [](https://anaconda.org/conda-forge/lightning) [](https://codecov.io/gh/Lightning-AI/pytorch-lightning) [](https://discord.gg/VptPCZkGNa)  [](https://github.com/Lightning-AI/pytorch-lightning/blob/master/LICENSE)

Why PyTorch Lightning?

Training models in plain PyTorch is tedious and error-prone - you have to manually handle things like backprop, mixed precision, multi-GPU, and distributed training, often rewriting code for every new project. PyTorch Lightning organizes PyTorch code to automate those complexities so you can focus on your model and data, while keeping full control and scaling from CPU to multi-node without changing your core code. But if you want control of those things, you can still opt into more DIY.

Fun analogy: If PyTorch is Javascript, PyTorch Lightning is ReactJS or NextJS.

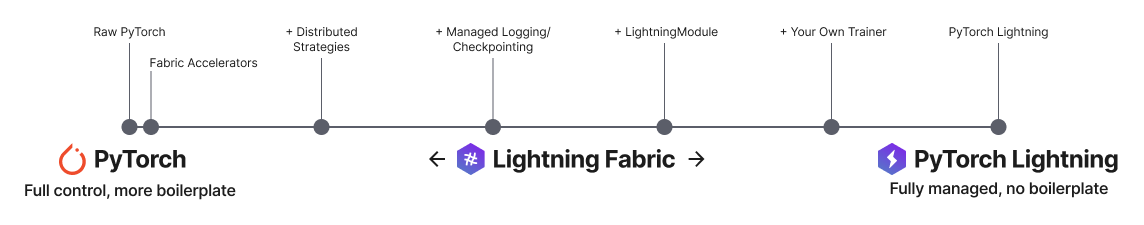

Lightning has 2 core packages

PyTorch Lightning: Train and deploy PyTorch at scale.

Lightning Fabric: Expert control.

Lightning gives you granular control over how much abstraction you want to add over PyTorch.

Quick start

Install Lightning:

bash

pip install lightning

Advanced install options

#### Install with optional dependencies ```bash pip install lightning['extra'] ``` #### Conda ```bash conda install lightning -c conda-forge ``` #### Install stable version Install future release from the source ```bash pip install https://github.com/Lightning-AI/lightning/archive/refs/heads/release/stable.zip -U ``` #### Install bleeding-edge Install nightly from the source (no guarantees) ```bash pip install https://github.com/Lightning-AI/lightning/archive/refs/heads/master.zip -U ``` or from testing PyPI ```bash pip install -iU https://test.pypi.org/simple/ pytorch-lightning ```PyTorch Lightning example

Define the training workflow. Here's a toy example (explore real examples):

```python

main.py

! pip install torchvision

import torch, torch.nn as nn, torch.utils.data as data, torchvision as tv, torch.nn.functional as F import lightning as L

--------------------------------

Step 1: Define a LightningModule

--------------------------------

A LightningModule (nn.Module subclass) defines a full system

(ie: an LLM, diffusion model, autoencoder, or simple image classifier).

class LitAutoEncoder(L.LightningModule): def init(self): super().init() self.encoder = nn.Sequential(nn.Linear(28 * 28, 128), nn.ReLU(), nn.Linear(128, 3)) self.decoder = nn.Sequential(nn.Linear(3, 128), nn.ReLU(), nn.Linear(128, 28 * 28))

def forward(self, x):

# in lightning, forward defines the prediction/inference actions

embedding = self.encoder(x)

return embedding

def training_step(self, batch, batch_idx):

# training_step defines the train loop. It is independent of forward

x, _ = batch

x = x.view(x.size(0), -1)

z = self.encoder(x)

x_hat = self.decoder(z)

loss = F.mse_loss(x_hat, x)

self.log("train_loss", loss)

return loss

def configure_optimizers(self):

optimizer = torch.optim.Adam(self.parameters(), lr=1e-3)

return optimizer

-------------------

Step 2: Define data

-------------------

dataset = tv.datasets.MNIST(".", download=True, transform=tv.transforms.ToTensor()) train, val = data.random_split(dataset, [55000, 5000])

-------------------

Step 3: Train

-------------------

autoencoder = LitAutoEncoder() trainer = L.Trainer() trainer.fit(autoencoder, data.DataLoader(train), data.DataLoader(val)) ```

Run the model on your terminal

bash

pip install torchvision

python main.py

Why PyTorch Lightning?

PyTorch Lightning is just organized PyTorch - Lightning disentangles PyTorch code to decouple the science from the engineering.

Examples

Explore various types of training possible with PyTorch Lightning. Pretrain and finetune ANY kind of model to perform ANY task like classification, segmentation, summarization and more:

| Task | Description | Run |

|-------------------------------------------------------------------------------------------------------------|----------------------------------------------------------------|---|

| Hello world | Pretrain - Hello world example |

| Image segmentation | Finetune - ResNet-50 model to segment images |

| Object detection | Finetune - Faster R-CNN model to detect objects |

| Text summarization | Finetune - text summarization (Hugging Face transformer model) |

| Audio generation | Finetune - audio generator (transformer model) |

| LLM finetuning | Finetune - LLM (Meta Llama 3.1 8B) |

Advanced features

Lightning has over 40+ advanced features designed for professional AI research at scale.

Here are some examples:

Train on 1000s of GPUs without code changes

```python # 8 GPUs # no code changes needed trainer = Trainer(accelerator="gpu", devices=8) # 256 GPUs trainer = Trainer(accelerator="gpu", devices=8, num_nodes=32) ```Train on other accelerators like TPUs without code changes

```python # no code changes needed trainer = Trainer(accelerator="tpu", devices=8) ```16-bit precision

```python # no code changes needed trainer = Trainer(precision=16) ```Experiment managers

```python from lightning import loggers # tensorboard trainer = Trainer(logger=TensorBoardLogger("logs/")) # weights and biases trainer = Trainer(logger=loggers.WandbLogger()) # comet trainer = Trainer(logger=loggers.CometLogger()) # mlflow trainer = Trainer(logger=loggers.MLFlowLogger()) # neptune trainer = Trainer(logger=loggers.NeptuneLogger()) # ... and dozens more ```Early Stopping

```python es = EarlyStopping(monitor="val_loss") trainer = Trainer(callbacks=[es]) ```Checkpointing

```python checkpointing = ModelCheckpoint(monitor="val_loss") trainer = Trainer(callbacks=[checkpointing]) ```Export to torchscript (JIT) (production use)

```python # torchscript autoencoder = LitAutoEncoder() torch.jit.save(autoencoder.to_torchscript(), "model.pt") ```Export to ONNX (production use)

```python # onnx with tempfile.NamedTemporaryFile(suffix=".onnx", delete=False) as tmpfile: autoencoder = LitAutoEncoder() input_sample = torch.randn((1, 64)) autoencoder.to_onnx(tmpfile.name, input_sample, export_params=True) os.path.isfile(tmpfile.name) ```

Lightning Fabric: Expert control

Run on any device at any scale with expert-level control over PyTorch training loop and scaling strategy. You can even write your own Trainer.

Fabric is designed for the most complex models like foundation model scaling, LLMs, diffusion, transformers, reinforcement learning, active learning. Of any size.

| What to change | Resulting Fabric Code (copy me!) |

|---|---|

| ```diff + import lightning as L import torch; import torchvision as tv dataset = tv.datasets.CIFAR10("data", download=True, train=True, transform=tv.transforms.ToTensor()) + fabric = L.Fabric() + fabric.launch() model = tv.models.resnet18() optimizer = torch.optim.SGD(model.parameters(), lr=0.001) - device = "cuda" if torch.cuda.is_available() else "cpu" - model.to(device) + model, optimizer = fabric.setup(model, optimizer) dataloader = torch.utils.data.DataLoader(dataset, batch_size=8) + dataloader = fabric.setup_dataloaders(dataloader) model.train() num_epochs = 10 for epoch in range(num_epochs): for batch in dataloader: inputs, labels = batch - inputs, labels = inputs.to(device), labels.to(device) optimizer.zero_grad() outputs = model(inputs) loss = torch.nn.functional.cross_entropy(outputs, labels) - loss.backward() + fabric.backward(loss) optimizer.step() print(loss.data) ``` | ```Python import lightning as L import torch; import torchvision as tv dataset = tv.datasets.CIFAR10("data", download=True, train=True, transform=tv.transforms.ToTensor()) fabric = L.Fabric() fabric.launch() model = tv.models.resnet18() optimizer = torch.optim.SGD(model.parameters(), lr=0.001) model, optimizer = fabric.setup(model, optimizer) dataloader = torch.utils.data.DataLoader(dataset, batch_size=8) dataloader = fabric.setup_dataloaders(dataloader) model.train() num_epochs = 10 for epoch in range(num_epochs): for batch in dataloader: inputs, labels = batch optimizer.zero_grad() outputs = model(inputs) loss = torch.nn.functional.cross_entropy(outputs, labels) fabric.backward(loss) optimizer.step() print(loss.data) ``` |

Key features

Easily switch from running on CPU to GPU (Apple Silicon, CUDA, …), TPU, multi-GPU or even multi-node training

```python # Use your available hardware # no code changes needed fabric = Fabric() # Run on GPUs (CUDA or MPS) fabric = Fabric(accelerator="gpu") # 8 GPUs fabric = Fabric(accelerator="gpu", devices=8) # 256 GPUs, multi-node fabric = Fabric(accelerator="gpu", devices=8, num_nodes=32) # Run on TPUs fabric = Fabric(accelerator="tpu") ```Use state-of-the-art distributed training strategies (DDP, FSDP, DeepSpeed) and mixed precision out of the box

```python # Use state-of-the-art distributed training techniques fabric = Fabric(strategy="ddp") fabric = Fabric(strategy="deepspeed") fabric = Fabric(strategy="fsdp") # Switch the precision fabric = Fabric(precision="16-mixed") fabric = Fabric(precision="64") ```All the device logic boilerplate is handled for you

```diff # no more of this! - model.to(device) - batch.to(device) ```Build your own custom Trainer using Fabric primitives for training checkpointing, logging, and more

```python import lightning as L class MyCustomTrainer: def __init__(self, accelerator="auto", strategy="auto", devices="auto", precision="32-true"): self.fabric = L.Fabric(accelerator=accelerator, strategy=strategy, devices=devices, precision=precision) def fit(self, model, optimizer, dataloader, max_epochs): self.fabric.launch() model, optimizer = self.fabric.setup(model, optimizer) dataloader = self.fabric.setup_dataloaders(dataloader) model.train() for epoch in range(max_epochs): for batch in dataloader: input, target = batch optimizer.zero_grad() output = model(input) loss = loss_fn(output, target) self.fabric.backward(loss) optimizer.step() ``` You can find a more extensive example in our [examples](examples/fabric/build_your_own_trainer)

Examples

Self-supervised Learning

Convolutional Architectures

Reinforcement Learning

GANs

Classic ML

Continuous Integration

Lightning is rigorously tested across multiple CPUs, GPUs and TPUs and against major Python and PyTorch versions.

*Codecov is > 90%+ but build delays may show less

Current build statuses

Community

The lightning community is maintained by

- 10+ core contributors who are all a mix of professional engineers, Research Scientists, and Ph.D. students from top AI labs.

- 800+ community contributors.

Want to help us build Lightning and reduce boilerplate for thousands of researchers? Learn how to make your first contribution here

Lightning is also part of the PyTorch ecosystem which requires projects to have solid testing, documentation and support.

Asking for help

If you have any questions please:

Owner

- Name: ⚡️ Lightning AI

- Login: Lightning-AI

- Kind: organization

- Location: United States of America

- Website: https://lightning.ai/

- Twitter: LightningAI

- Repositories: 38

- Profile: https://github.com/Lightning-AI

Turn ideas into AI, Lightning fast. Creators of PyTorch Lightning, Lightning AI Studio, TorchMetrics, Fabric, Lit-GPT, Lit-LLaMA

Citation (CITATION.cff)

cff-version: 1.2.0

message: "If you want to cite the framework, feel free to use this (but only if you loved it 😊)"

title: "PyTorch Lightning"

abstract: "The lightweight PyTorch wrapper for high-performance AI research. Scale your models, not the boilerplate."

date-released: 2019-03-30

authors:

- family-names: "Falcon"

given-names: "William"

- name: "The PyTorch Lightning team"

version: 1.4

doi: 10.5281/zenodo.3828935

license: "Apache-2.0"

url: "https://www.pytorchlightning.ai"

repository-code: "https://github.com/Lightning-AI/lightning"

keywords:

- machine learning

- deep learning

- artificial intelligence

Committers

Last synced: 9 months ago

Top Committers

| Name | Commits | |

|---|---|---|

| William Falcon | w****7@c****u | 2,238 |

| Jirka Borovec | B****a | 1,339 |

| Adrian Wälchli | a****i@g****m | 1,218 |

| Carlos Mocholí | c****i@g****m | 1,014 |

| thomas chaton | t****s@g****i | 412 |

| dependabot[bot] | 4****] | 340 |

| Rohit Gupta | r****8@g****m | 306 |

| Kaushik B | 4****1 | 218 |

| Sean Naren | s****n@g****i | 151 |

| ananthsub | a****m@g****m | 149 |

| Akihiro Nitta | n****a@a****m | 144 |

| Ethan Harris | e****s@g****m | 113 |

| Justus Schock | 1****k | 101 |

| Danielle Pintz | 3****z | 83 |

| Nicki Skafte | s****i@g****m | 76 |

| edenlightning | 6****g | 65 |

| Sean Naren | s****n@g****m | 61 |

| Mauricio Villegas | m****e@y****m | 58 |

| Luca Antiga | l****a@g****m | 45 |

| otaj | 6****j | 44 |

| Jeff Yang | y****f@o****m | 43 |

| four4fish | 8****h | 42 |

| Adrian Wälchli | a****i@s****h | 33 |

| PL Ghost | 7****t | 31 |

| Victor Prins | v****s@o****m | 24 |

| jjenniferdai | 8****i | 24 |

| Nic Eggert | n****c@e****o | 24 |

| Sherin Thomas | s****n@l****i | 24 |

| Kushashwa Ravi Shrimali | k****i@g****m | 23 |

| Krishna Kalyan | k****3@g****m | 22 |

| and 947 more... | ||

Committer Domains (Top 20 + Academic)

Issues and Pull Requests

Last synced: 6 months ago

All Time

- Total issues: 1,082

- Total pull requests: 1,427

- Average time to close issues: 5 months

- Average time to close pull requests: 24 days

- Total issue authors: 832

- Total pull request authors: 237

- Average comments per issue: 3.34

- Average comments per pull request: 1.7

- Merged pull requests: 974

- Bot issues: 0

- Bot pull requests: 207

Past Year

- Issues: 348

- Pull requests: 692

- Average time to close issues: about 1 month

- Average time to close pull requests: 8 days

- Issue authors: 299

- Pull request authors: 118

- Average comments per issue: 0.62

- Average comments per pull request: 1.36

- Merged pull requests: 464

- Bot issues: 0

- Bot pull requests: 175

Top Authors

Issue Authors

- awaelchli (23)

- williamFalcon (14)

- carmocca (11)

- adosar (8)

- clumsy (8)

- heth27 (6)

- Borda (6)

- loretoparisi (5)

- svnv-svsv-jm (5)

- JohnHerry (5)

- tchaton (5)

- profPlum (5)

- YuyaWake (5)

- Peiffap (4)

- Yann-CV (4)

Pull Request Authors

- awaelchli (238)

- Borda (206)

- dependabot[bot] (200)

- tchaton (61)

- pl-ghost (56)

- lantiga (45)

- williamFalcon (27)

- fnhirwa (21)

- carmocca (18)

- mauvilsa (16)

- 01AbhiSingh (15)

- SkafteNicki (15)

- KAVYANSHTYAGI (14)

- clumsy (11)

- matsumotosan (10)

Top Labels

Issue Labels

Pull Request Labels

Packages

- Total packages: 6

-

Total downloads:

- pypi 14,090,710 last-month

- Total docker downloads: 27,942,303

-

Total dependent packages: 834

(may contain duplicates) -

Total dependent repositories: 10,072

(may contain duplicates) - Total versions: 468

- Total maintainers: 4

- Total advisories: 6

pypi.org: pytorch-lightning

PyTorch Lightning is the lightweight PyTorch wrapper for ML researchers. Scale your models. Write less boilerplate.

- Homepage: https://github.com/Lightning-AI/lightning

- Documentation: https://pytorch-lightning.rtfd.io/en/latest/

- License: Apache-2.0

-

Latest release: 2.5.4

published 6 months ago

Rankings

Maintainers (2)

Advisories (4)

pypi.org: lightning

The Deep Learning framework to train, deploy, and ship AI products Lightning fast.

- Homepage: https://github.com/Lightning-AI/lightning

- Documentation: https://lightning.ai/lightning-docs

- License: Apache-2.0

-

Latest release: 2022.10.25

published over 3 years ago

Rankings

Maintainers (3)

Advisories (2)

pypi.org: lightning-fabric

- Homepage: https://github.com/Lightning-AI/lightning

- Documentation: https://pytorch-lightning.rtfd.io/en/latest/

- License: Apache-2.0

-

Latest release: 2.5.4

published 6 months ago

Rankings

spack.io: py-lightning

The deep learning framework to pretrain, finetune and deploy AI models.

- Homepage: https://github.com/Lightning-AI/pytorch-lightning

- License: []

-

Latest release: 2.5.2

published 8 months ago

Rankings

Maintainers (1)

anaconda.org: lightning-cloud

Lightning AI Command Line Interface

- Homepage: https://github.com/Lightning-AI/pytorch-lightning

- License: Apache-2.0

-

Latest release: 0.5.57

published about 2 years ago

Rankings

anaconda.org: lightning

Use Lightning Apps to build everything from production-ready, multi-cloud ML systems to simple research demos.

- Homepage: https://github.com/Lightning-AI/pytorch-lightning

- License: Apache-2.0

-

Latest release: 2.3.3

published about 1 year ago