movement_primitives

movement_primitives: Imitation Learning of Cartesian Motion with Movement Primitives - Published in JOSS (2024)

Science Score: 100.0%

This score indicates how likely this project is to be science-related based on various indicators:

-

✓CITATION.cff file

Found CITATION.cff file -

✓codemeta.json file

Found codemeta.json file -

✓.zenodo.json file

Found .zenodo.json file -

✓DOI references

Found 28 DOI reference(s) in README and JOSS metadata -

✓Academic publication links

Links to: springer.com, joss.theoj.org, zenodo.org -

✓Committers with academic emails

6 of 11 committers (54.5%) from academic institutions -

○Institutional organization owner

-

✓JOSS paper metadata

Published in Journal of Open Source Software

Keywords

Scientific Fields

Repository

Dynamical movement primitives (DMPs), probabilistic movement primitives (ProMPs), and spatially coupled bimanual DMPs for imitation learning.

Basic Info

- Host: GitHub

- Owner: dfki-ric

- License: other

- Language: Python

- Default Branch: main

- Homepage: https://dfki-ric.github.io/movement_primitives

- Size: 15.3 MB

Statistics

- Stars: 236

- Watchers: 10

- Forks: 52

- Open Issues: 7

- Releases: 12

Topics

Metadata Files

README.md

Movement Primitives

Dynamical movement primitives (DMPs), probabilistic movement primitives (ProMPs), and spatially coupled bimanual DMPs for imitation learning.

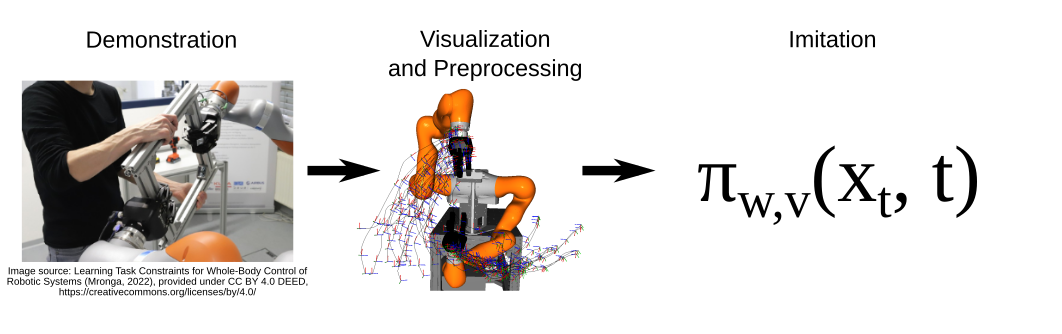

Movement primitives are a common representation of movements in robotics for imitation learning, reinforcement learning, and black-box optimization of behaviors. There are many types and variations. The Python library movement_primitives focuses on imitation learning, generalization, and adaptation of movement primitives in Cartesian space. It implements dynamical movement primitives, probabilistic movement primitives, as well as Cartesian and dual Cartesian movement primitives with coupling terms to constrain relative movements in bimanual manipulation. They are implemented in Cython to speed up online execution and batch processing in an offline setting. In addition, the library provides tools for data analysis and movement evaluation. It can be installed directly from PyPI.

Content

- Statement of Need

- Features

- API Documentation

- Install Library

- Examples

- Build API Documentation

- Test

- Contributing

- Non-public Extensions

- Related Publications

- Citation

- Funding

Statement of Need

Movement primitives are a common group of policy representations in robotics. They are able to represent complex movement patterns, allow temporal and spatial modification, offer stability guarantees, and are suitable for imitation learning without complicated hyperparameter tuning, which are advantages over general function approximators like neural networks. Movement primitives are white-box models for movement generation and allow to control several aspects of the movement. There are types of dynamical movement primitives that allow to directly control the goal in state space, the final velocity, or the relative pose of two robotic end-effectors. Probabilistic movement primitives capture distributions of movements adequately and allow conditioning in state space and blending of multiple movements. The main disadvantage of movement primitives in comparison to general function approximators is that they are limited in their capacity to represent behavior that takes into account complex sensor data during execution. Nevertheless, various types of movement primitives have proven to be a reliable and effective tool in robot learning. A reliable tool deserves a similarly reliable open source implementation. However, there are only a few actively maintained, documented, and easy to use implementations. One of these is the library movement_primitives. It combines several types of dynamical movement primitives and probabilistic movement primitives in a single library with a focus on Cartesian and bimanual movements.

Features

- Dynamical Movement Primitives (DMPs) for

- positions (with fast Runge-Kutta integration)

- Cartesian position and orientation (with fast Cython implementation)

- Dual Cartesian position and orientation (with fast Cython implementation)

- Coupling terms for synchronization of position and/or orientation of dual Cartesian DMPs

- Propagation of DMP weight distribution to state space distribution

- Probabilistic Movement Primitives (ProMPs)

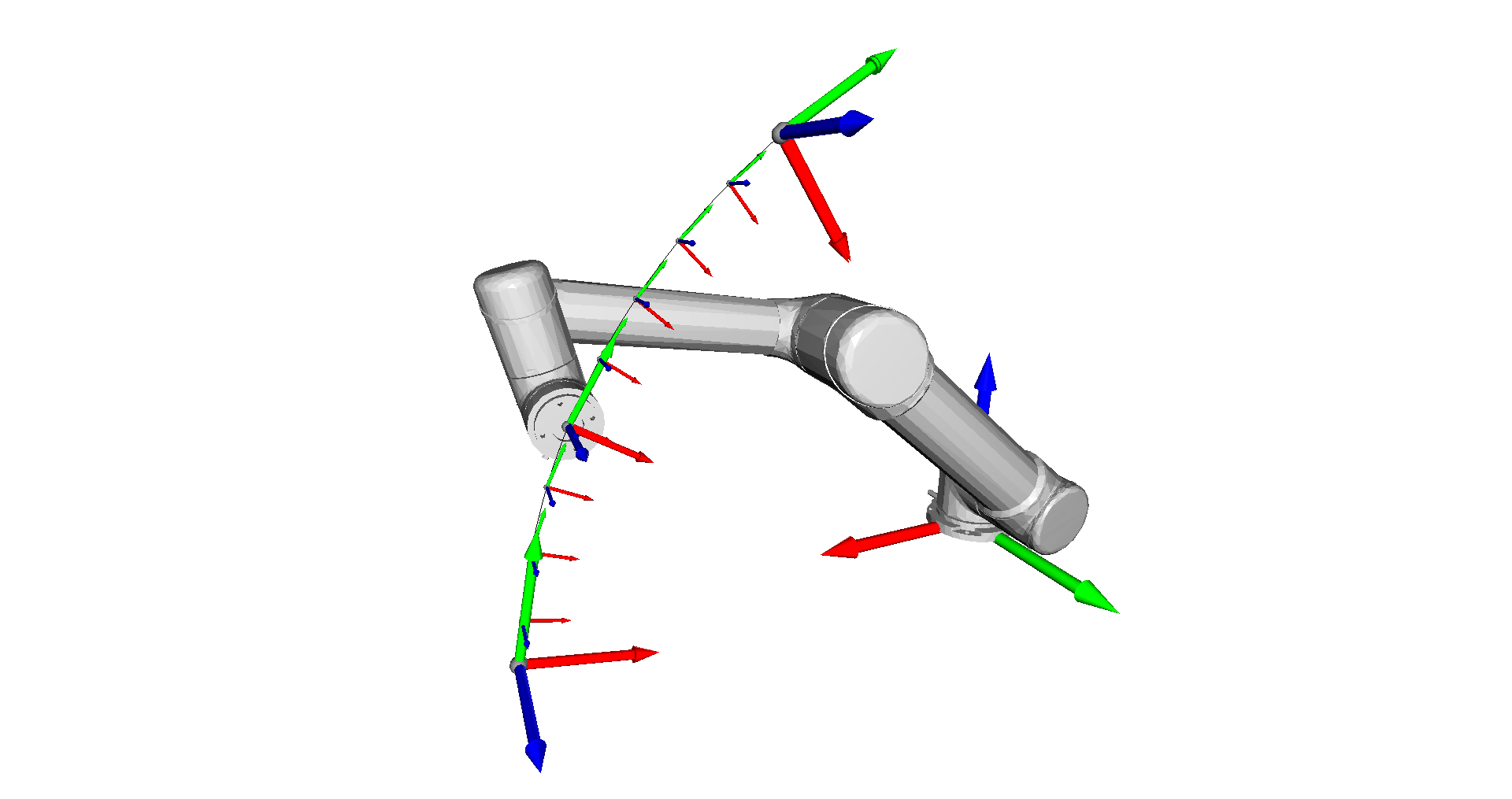

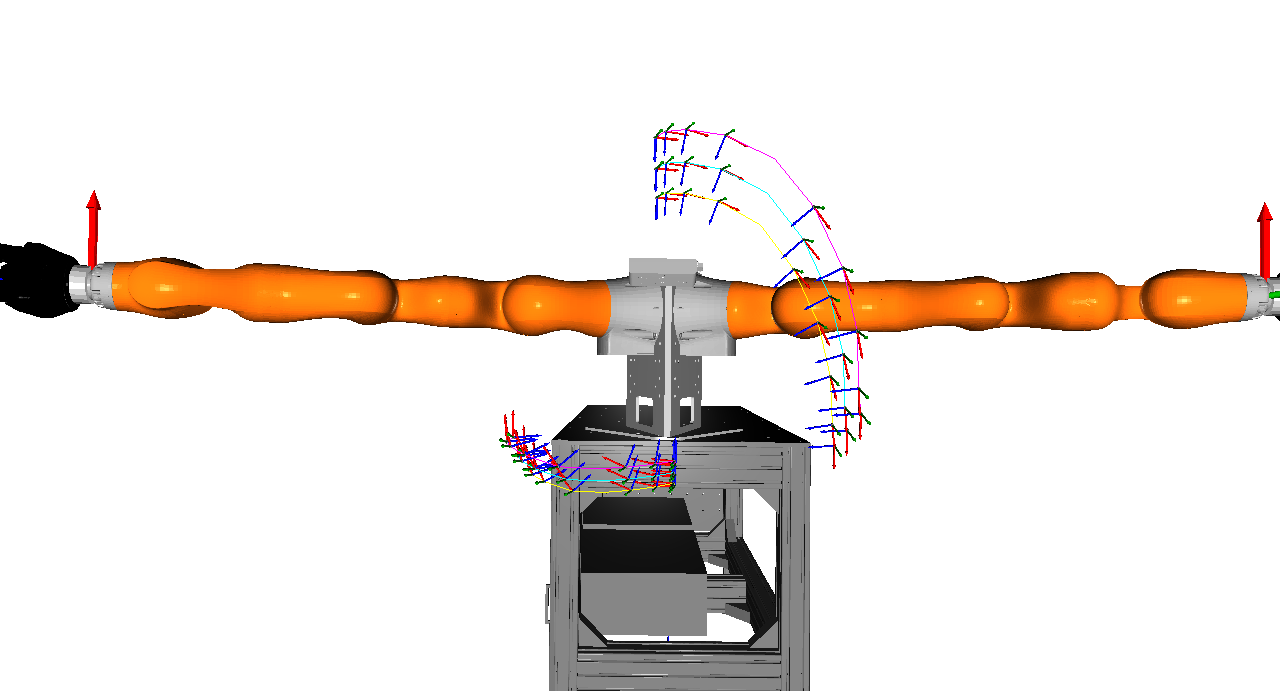

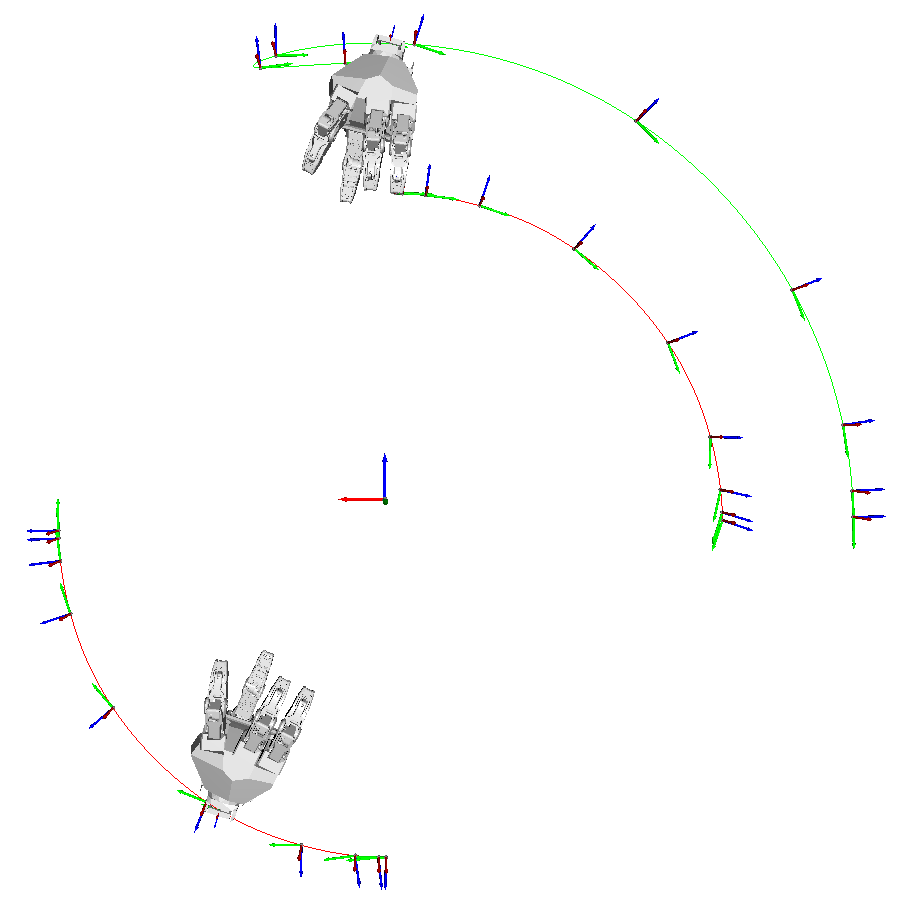

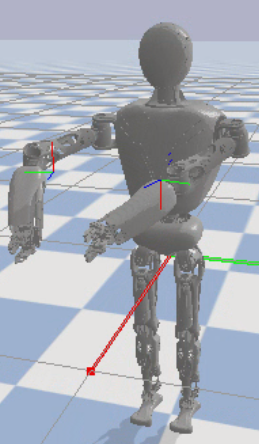

Left: Example of dual Cartesian DMP with RH5 Manus. Right: Example of joint space DMP with UR5.

API Documentation

The API documentation is available here.

Install Library

This library requires Python 3.6 or later and pip is recommended for the

installation. In the following instructions, we assume that the command

python refers to Python 3. If you use the system's Python version, you

might have to add the flag --user to any installation command.

PyPI (Recommended)

I recommend to install the library via pip from the Python package index (PyPI):

bash

python -m pip install movement_primitives[all]

If you don't want to have all dependencies installed, just omit [all].

This will install the latest release. If you want to install the latest development version, you have to install from git.

Git + Editable Mode

Editable mode means that you don't have to install the library after editing the source code. Changes will be directly available in the installed library since pip creates a symlink.

You can clone the git repository and install it in editable mode with pip:

bash

git clone https://github.com/dfki-ric/movement_primitives.git

python -m pip install -e .[all]

If you don't want to have all dependencies installed, just omit [all].

Git

Alternatively, you can install the library and its dependencies without pip from the git repository:

bash

git clone https://github.com/dfki-ric/movement_primitives.git

python setup.py install

Build Cython Extension

You could also just build the Cython extension with

bash

python setup.py build_ext --inplace

Dependencies

An alternative way to install dependencies is the requirements.txt file in the main folder of the git repository:

bash

python -m pip install -r requirements.txt

Examples

You will find a lot of examples in the subfolder

examples/.

Here are just some highlights to showcase the library.

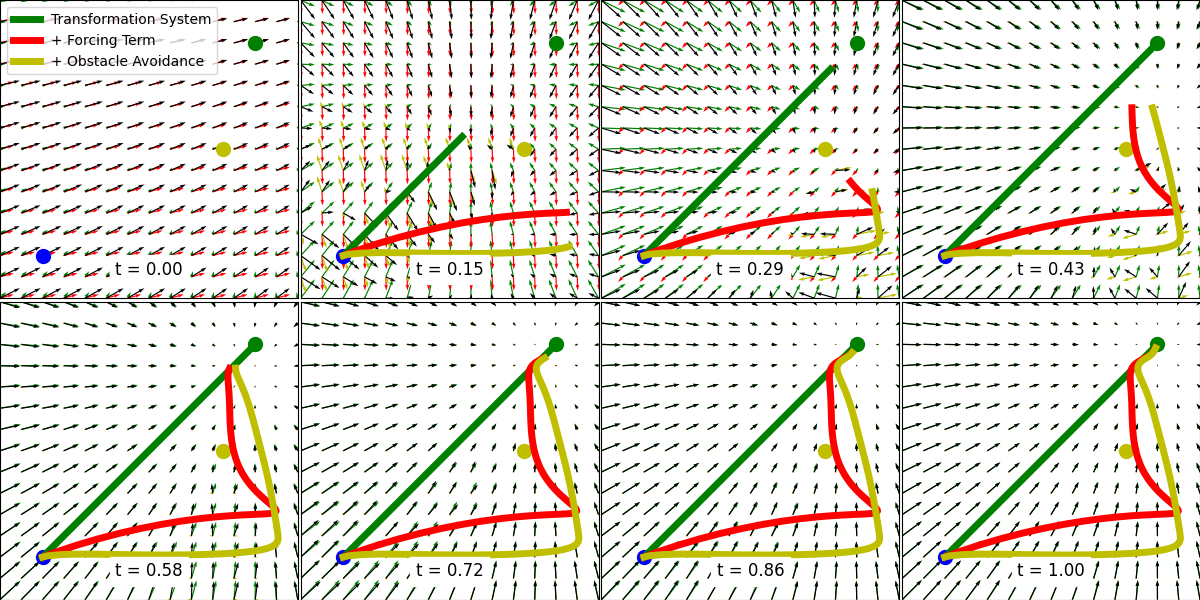

Potential Field of 2D DMP

A Dynamical Movement Primitive defines a potential field that superimposes several components: transformation system (goal-directed movement), forcing term (learned shape), and coupling terms (e.g., obstacle avoidance).

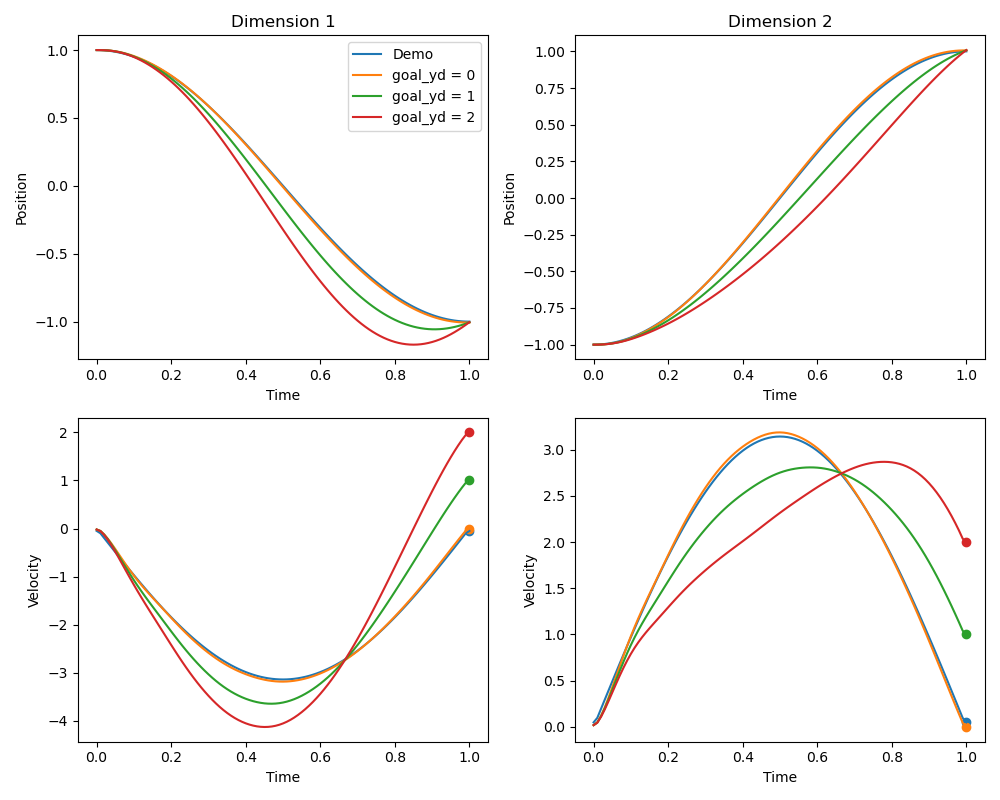

DMP with Final Velocity

Not all DMPs allow a final velocity > 0. In this case we analyze the effect of changing final velocities in an appropriate variation of the DMP formulation that allows to set the final velocity.

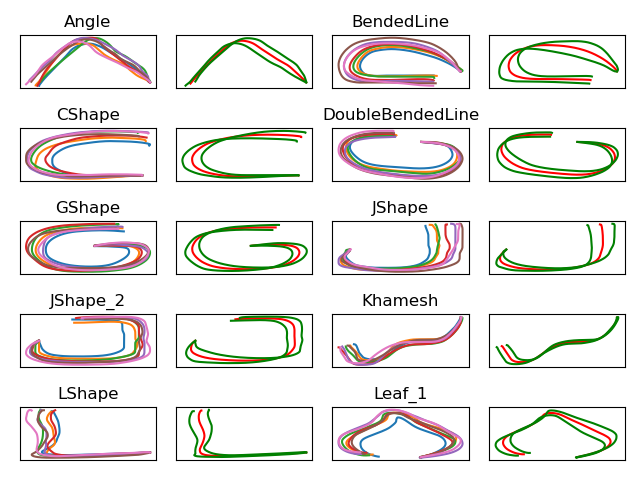

ProMPs

The LASA Handwriting dataset learned with ProMPs. The dataset consists of 2D handwriting motions. The first and third column of the plot represent demonstrations and the second and fourth column show the imitated ProMPs with 1-sigma interval.

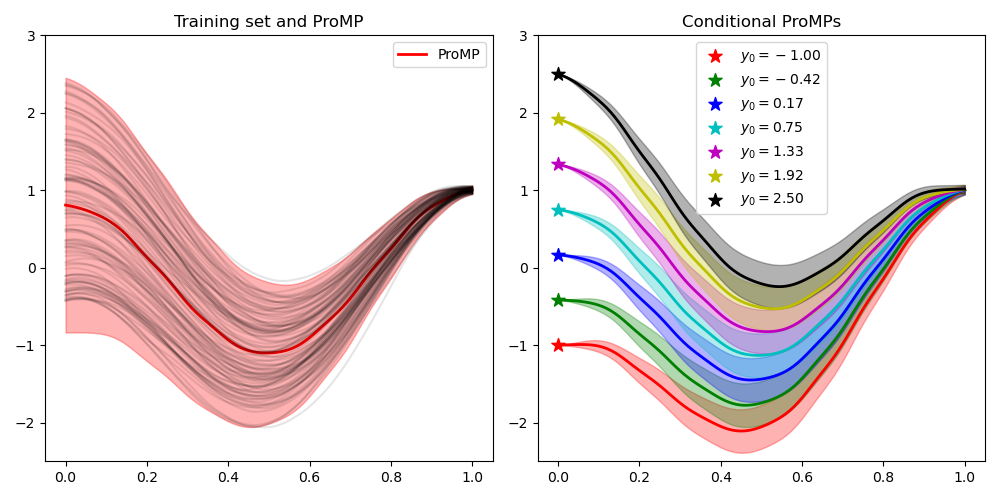

Conditional ProMPs

Probabilistic Movement Primitives (ProMPs) define distributions over trajectories that can be conditioned on viapoints. In this example, we plot the resulting posterior distribution after conditioning on varying start positions.

Cartesian DMPs

A trajectory is created manually, imitated with a Cartesian DMP, converted to a joint trajectory by inverse kinematics, and executed with a UR5.

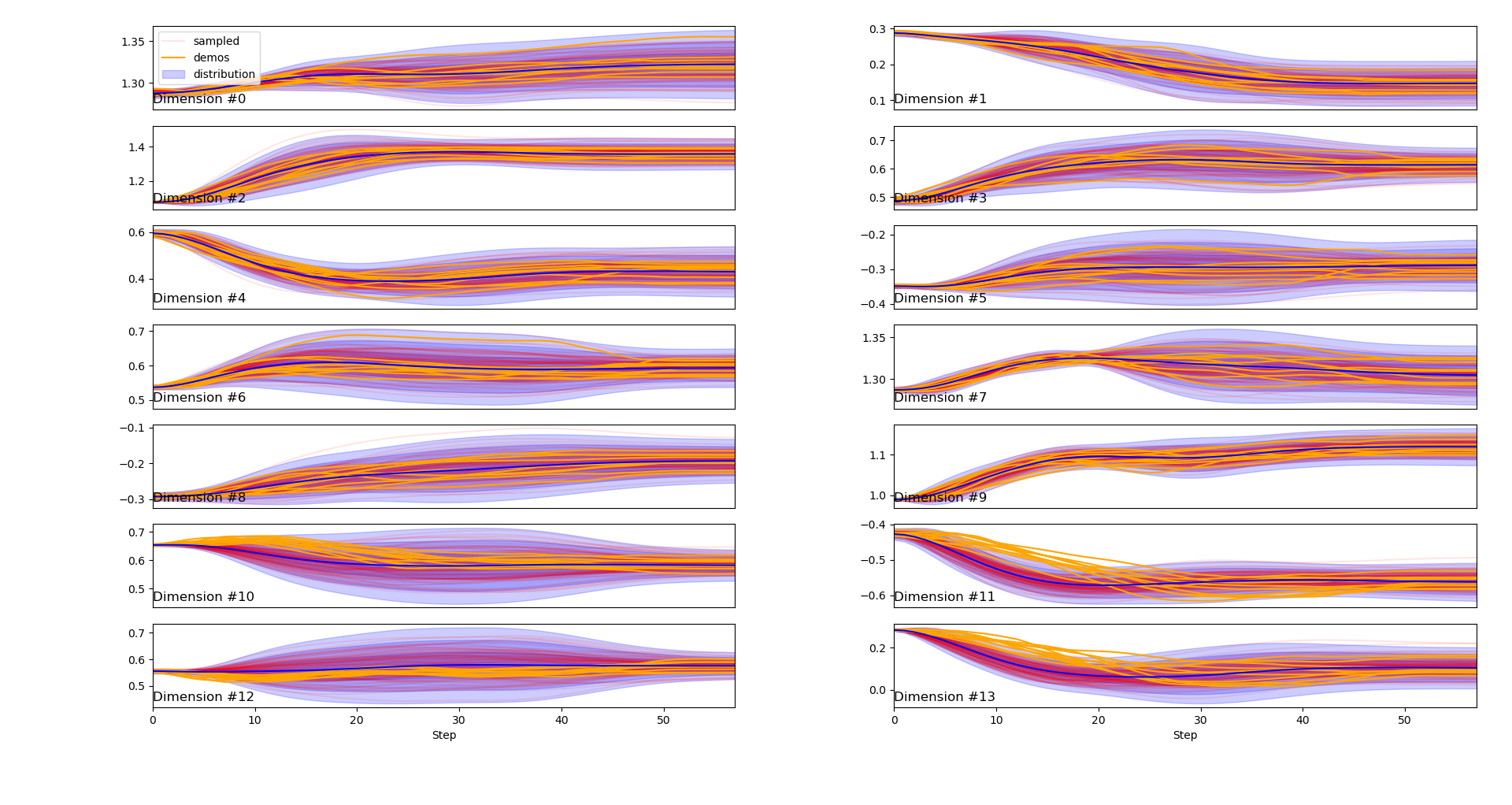

Contextual ProMPs

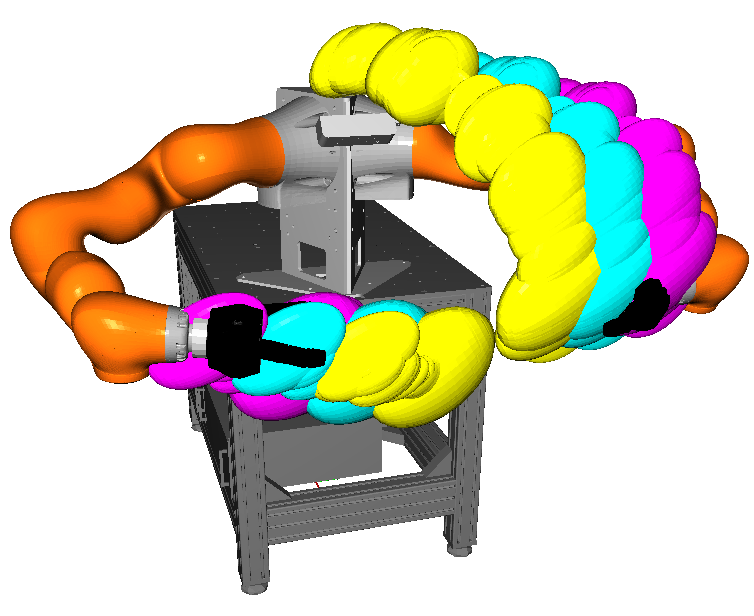

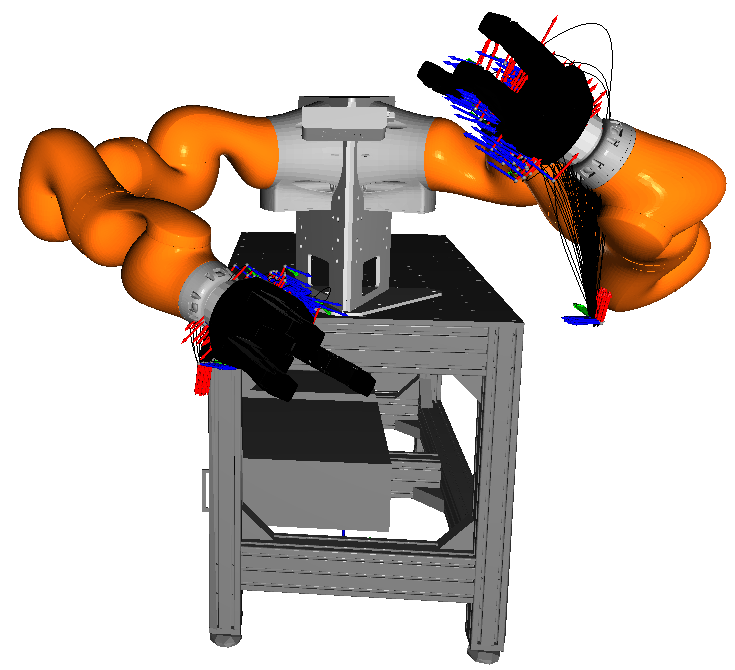

We use a dataset of Mronga and Kirchner (2021), in which a dual-arm robot rotates panels of varying widths. 10 demonstrations were recorded for 3 different panel widths through kinesthetic teaching. The panel width is the context over which we generalize with contextual ProMPs. We learn a joint distribution of contexts and ProMP weights, and then condition the distribution on the contexts to obtain a ProMP adapted to the context. Each color in the above visualizations corresponds to a ProMP for a different context.

Dependencies that are not publicly available:

- Dataset: panel rotation dataset of Mronga and Kirchner (2021)

- MoCap library

- URDF of dual arm Kuka system from

DFKI RIC's HRC lab:

bash git clone git@git.hb.dfki.de:models-robots/kuka_lbr.git

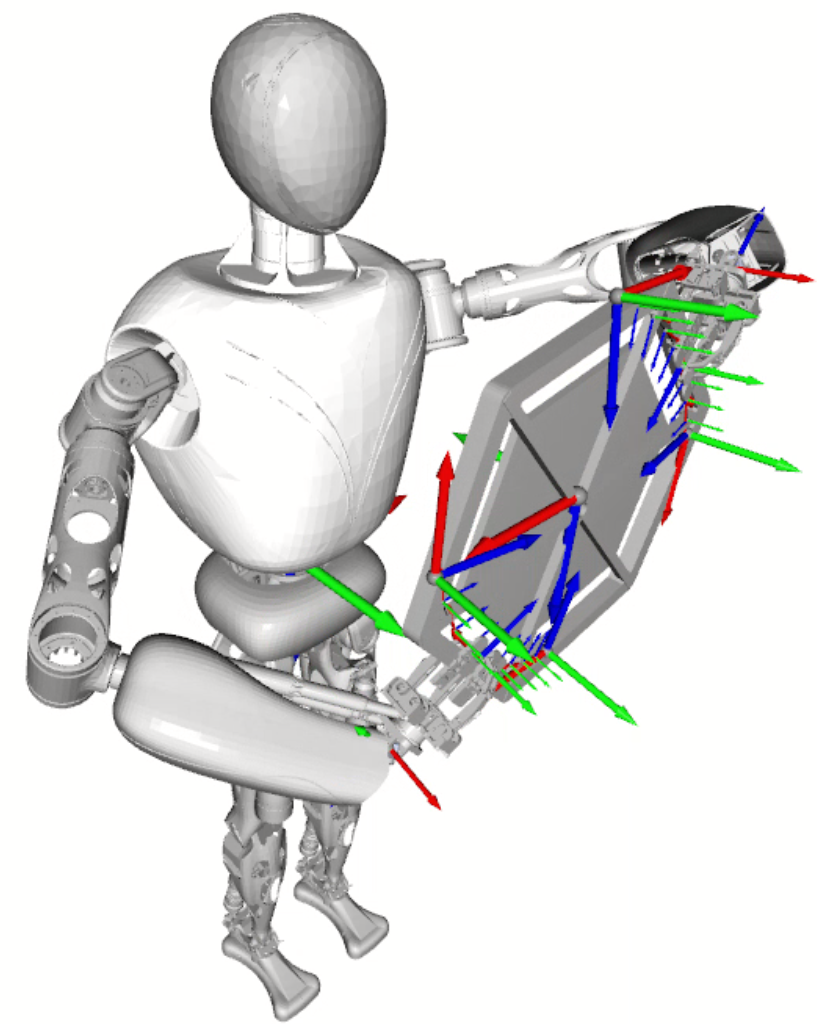

Dual Cartesian DMP

This library implements specific dual Cartesian DMPs to control dual-arm robotic systems like humanoid robots.

Dependencies that are not publicly available:

- MoCap library

- URDF of DFKI RIC's RH5 robot:

bash git clone git@git.hb.dfki.de:models-robots/rh5_models/pybullet-only-arms-urdf.git --recursive - URDF of solar panel:

bash git clone git@git.hb.dfki.de:models-objects/solar_panels.git

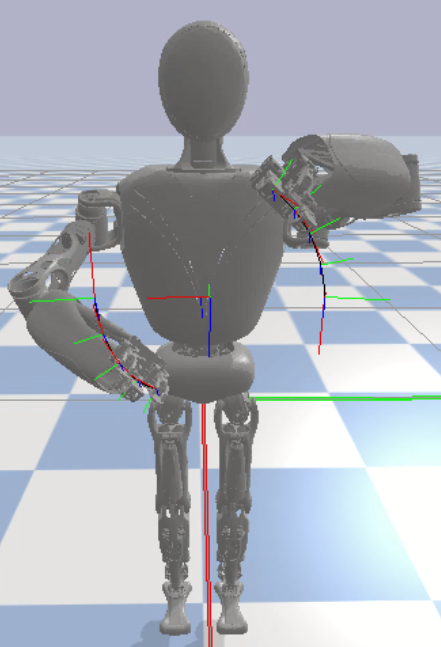

Coupled Dual Cartesian DMP

We can introduce a coupling term in a dual Cartesian DMP to constrain the relative position, orientation, or pose of two end-effectors of a dual-arm robot.

Dependencies that are not publicly available:

- URDF of DFKI RIC's gripper:

bash git clone git@git.hb.dfki.de:motto/abstract-urdf-gripper.git --recursive - URDF of DFKI RIC's RH5 robot:

bash git clone git@git.hb.dfki.de:models-robots/rh5_models/pybullet-only-arms-urdf.git --recursive

Propagation of DMP Distribution to State Space

If we have a distribution over DMP parameters, we can propagate them to state space through an unscented transform. On the left we see the original demonstration of a dual-arm movement in state space (two 3D positions and two quaternions) and the distribution of several DMP weight vectors projected to the state space. On the right side we see several dual-arm trajectories sampled from the distribution in state space.

Dependencies that are not publicly available:

- Dataset: panel rotation dataset of Mronga and Kirchner (2021)

- MoCap library

- URDF of dual arm

Kuka system

from

DFKI RIC's HRC lab:

bash git clone git@git.hb.dfki.de:models-robots/kuka_lbr.git

Build API Documentation

You can build an API documentation with sphinx. You can install all dependencies with

bash

python -m pip install movement_primitives[doc]

... and build the documentation from the folder doc/ with

bash

make html

It will be located at doc/build/html/index.html.

Test

To run the tests some python libraries are required:

bash

python -m pip install -e .[test]

The tests are located in the folder test/ and can be executed with:

python -m pytest

This command searches for all files with test and executes the functions

with test_*. You will find a test coverage report at htmlcov/index.html.

Contributing

You can report bugs in the issue tracker. If you have questions about the software, please use the discussions section. To add new features, documentation, or fix bugs you can open a pull request on GitHub. Directly pushing to the main branch is not allowed.

The recommended workflow to add a new feature, add documentation, or fix a bug is the following:

- Push your changes to a branch (e.g., feature/x, doc/y, or fix/z) of your fork of the repository.

- Open a pull request to the main branch of the main repository.

This is a checklist for new features:

- are there unit tests?

- does it have docstrings?

- is it included in the API documentation?

- run flake8 and pylint

- should it be part of the readme?

- should it be included in any example script?

Non-public Extensions

Scripts from the subfolder examples/external_dependencies/ require access to

git repositories (URDF files or optional dependencies) and datasets that are

not publicly available. They are available on request (email

alexander.fabisch@dfki.de).

Note that the library does not have any non-public dependencies! They are only required to run all examples.

MoCap Library

```bash

untested: pip install git+https://git.hb.dfki.de/dfki-interaction/mocap.git

git clone git@git.hb.dfki.de:dfki-interaction/mocap.git cd mocap python -m pip install -e . cd .. ```

Get URDFs

```bash

RH5

git clone git@git.hb.dfki.de:models-robots/rh5_models/pybullet-only-arms-urdf.git --recursive

RH5v2

git clone git@git.hb.dfki.de:models-robots/rh5v2_models/pybullet-urdf.git --recursive

Kuka

git clone git@git.hb.dfki.de:models-robots/kuka_lbr.git

Solar panel

git clone git@git.hb.dfki.de:models-objects/solar_panels.git

RH5 Gripper

git clone git@git.hb.dfki.de:motto/abstract-urdf-gripper.git --recursive ```

Data

I assume that your data is located in the folder data/ in most scripts.

You should put a symlink there to point to your actual data folder.

Related Publications

This library implements several types of dynamical movement primitives and probabilistic movement primitives. These are described in detail in the following papers.

[1] Ijspeert, A. J., Nakanishi, J., Hoffmann, H., Pastor, P., Schaal, S. (2013). Dynamical Movement Primitives: Learning Attractor Models for Motor Behaviors, Neural Computation 25 (2), 328-373. DOI: 10.1162/NECOa00393, https://homes.cs.washington.edu/~todorov/courses/amath579/reading/DynamicPrimitives.pdf

[2] Pastor, P., Hoffmann, H., Asfour, T., Schaal, S. (2009). Learning and Generalization of Motor Skills by Learning from Demonstration. In 2009 IEEE International Conference on Robotics and Automation, (pp. 763-768). DOI: 10.1109/ROBOT.2009.5152385, https://h2t.iar.kit.edu/pdf/Pastor2009.pdf

[3] Muelling, K., Kober, J., Kroemer, O., Peters, J. (2013). Learning to Select and Generalize Striking Movements in Robot Table Tennis. International Journal of Robotics Research 32 (3), 263-279. https://www.ias.informatik.tu-darmstadt.de/uploads/Publications/MuellingIJRR2013.pdf

[4] Ude, A., Nemec, B., Petric, T., Morimoto, J. (2014). Orientation in Cartesian space dynamic movement primitives. In IEEE International Conference on Robotics and Automation (ICRA) (pp. 2997-3004). DOI: 10.1109/ICRA.2014.6907291, https://acat-project.eu/modules/BibtexModule/uploads/PDF/udenemecpetric2014.pdf

[5] Gams, A., Nemec, B., Zlajpah, L., Wächter, M., Asfour, T., Ude, A. (2013). Modulation of Motor Primitives using Force Feedback: Interaction with the Environment and Bimanual Tasks (2013), In 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems (pp. 5629-5635). DOI: 10.1109/IROS.2013.6697172, https://h2t.anthropomatik.kit.edu/pdf/Gams2013.pdf

[6] Vidakovic, J., Jerbic, B., Sekoranja, B., Svaco, M., Suligoj, F. (2019). Task Dependent Trajectory Learning from Multiple Demonstrations Using Movement Primitives (2019), In International Conference on Robotics in Alpe-Adria Danube Region (RAAD) (pp. 275-282). DOI: 10.1007/978-3-030-19648-632, https://link.springer.com/chapter/10.1007/978-3-030-19648-632

[7] Paraschos, A., Daniel, C., Peters, J., Neumann, G. (2013). Probabilistic movement primitives, In C.J. Burges and L. Bottou and M. Welling and Z. Ghahramani and K.Q. Weinberger (Eds.), Advances in Neural Information Processing Systems, 26, https://papers.nips.cc/paper/2013/file/e53a0a2978c28872a4505bdb51db06dc-Paper.pdf

[8] Maeda, G. J., Neumann, G., Ewerton, M., Lioutikov, R., Kroemer, O., Peters, J. (2017). Probabilistic movement primitives for coordination of multiple human–robot collaborative tasks. Autonomous Robots, 41, 593-612. DOI: 10.1007/s10514-016-9556-2, https://link.springer.com/article/10.1007/s10514-016-9556-2

[9] Paraschos, A., Daniel, C., Peters, J., Neumann, G. (2018). Using probabilistic movement primitives in robotics. Autonomous Robots, 42, 529-551. DOI: 10.1007/s10514-017-9648-7, https://www.ias.informatik.tu-darmstadt.de/uploads/Team/AlexandrosParaschos/promps_auro.pdf

[10] Lazaric, A., Ghavamzadeh, M. (2010). Bayesian Multi-Task Reinforcement Learning. In Proceedings of the 27th International Conference on International Conference on Machine Learning (ICML'10) (pp. 599-606). https://hal.inria.fr/inria-00475214/document

Citation

If you use movement_primitives for a scientific publication, I would

appreciate citation of the following paper:

Fabisch, A., (2024). movement_primitives: Imitation Learning of Cartesian

Motion with Movement Primitives. Journal of Open Source Software, 9(97), 6695,

Bibtex entry:

bibtex

@article{Fabisch2024,

doi = {10.21105/joss.06695},

url = {https://doi.org/10.21105/joss.06695},

year = {2024},

publisher = {The Open Journal},

volume = {9},

number = {97},

pages = {6695},

author = {Alexander Fabisch},

title = {movement_primitives: Imitation Learning of Cartesian Motion with Movement Primitives},

journal = {Journal of Open Source Software}

}

Funding

This library has been developed initially at the Robotics Innovation Center of the German Research Center for Artificial Intelligence (DFKI GmbH) in Bremen. At this phase the work was supported through a grant of the German Federal Ministry of Economic Affairs and Energy (BMWi, FKZ 50 RA 1701).

{toctree}

:hidden:

api

Owner

- Name: DFKI GmbH, Robotics Innovation Center

- Login: dfki-ric

- Kind: organization

- Location: Bremen

- Website: https://robotik.dfki-bremen.de/en/

- Twitter: DFKI

- Repositories: 44

- Profile: https://github.com/dfki-ric

Research group at the German Research Center for Artificial Intelligence GmbH.

JOSS Publication

movement_primitives: Imitation Learning of Cartesian Motion with Movement Primitives

Authors

Tags

robotics imitation learning dynamical movement primitive probabilistic movement primitiveCitation (citation.cff)

cff-version: 1.2.0

message: "If you use this software, please cite it as below."

authors:

- family-names: Fabisch

given-names: Alexander

title: "movement_primitives"

url: https://github.com/dfki-ric/movement_primitives

doi: 10.5281/zenodo.6491361

preferred-citation:

type: article

authors:

- family-names: Fabisch

given-names: Alexander

title: "movement_primitives: Imitation Learning of Cartesian Motion with Movement Primitives"

doi: 10.21105/joss.06695

journal: Journal of Open Source Software

start: 6695

issue: 97

volume: 9

month: 5

year: 2024

GitHub Events

Total

- Create event: 1

- Issues event: 5

- Release event: 2

- Watch event: 49

- Issue comment event: 8

- Push event: 8

- Pull request event: 6

- Fork event: 9

Last Year

- Create event: 1

- Issues event: 5

- Release event: 2

- Watch event: 49

- Issue comment event: 8

- Push event: 8

- Pull request event: 6

- Fork event: 9

Committers

Last synced: 7 months ago

Top Committers

| Name | Commits | |

|---|---|---|

| Alexander Fabisch | a****h@g****m | 694 |

| jklemm | j****m@u****e | 17 |

| Christopher E. Mower | c****r@k****k | 15 |

| Andrea Pierré | a****e@b****u | 4 |

| Shimon Wang | 3****2@q****m | 3 |

| Marc Otto | m****o@d****e | 3 |

| wood | J****2@1****m | 2 |

| HiroIshida | h****a@j****p | 1 |

| Enrico Eberhard | 3****d | 1 |

| Baptiste Busch | b****h@g****m | 1 |

| mulo01 | m****1@d****e | 1 |

Committer Domains (Top 20 + Academic)

Issues and Pull Requests

Last synced: 6 months ago

All Time

- Total issues: 33

- Total pull requests: 30

- Average time to close issues: 3 months

- Average time to close pull requests: 3 days

- Total issue authors: 14

- Total pull request authors: 10

- Average comments per issue: 1.82

- Average comments per pull request: 1.23

- Merged pull requests: 27

- Bot issues: 0

- Bot pull requests: 0

Past Year

- Issues: 7

- Pull requests: 8

- Average time to close issues: 18 days

- Average time to close pull requests: about 11 hours

- Issue authors: 4

- Pull request authors: 4

- Average comments per issue: 0.86

- Average comments per pull request: 1.25

- Merged pull requests: 7

- Bot issues: 0

- Bot pull requests: 0

Top Authors

Issue Authors

- AlexanderFabisch (12)

- gautam-sharma1 (5)

- jqloh (3)

- ilska (3)

- cmower (3)

- buschbapti (1)

- juanheliosg (1)

- HongminWu (1)

- mengqiDK (1)

- CodingCatMountain (1)

- vhwanger (1)

- famora2 (1)

- perfectism13 (1)

Pull Request Authors

- AlexanderFabisch (19)

- cmower (4)

- ShimonWang (4)

- HiroIshida (2)

- kir0ul (1)

- buschbapti (1)

- eeberhard (1)

- CodingCatMountain (1)

- maotto (1)

- haider8645 (1)

Top Labels

Issue Labels

Pull Request Labels

Packages

- Total packages: 1

-

Total downloads:

- pypi 157 last-month

- Total dependent packages: 0

- Total dependent repositories: 1

- Total versions: 10

- Total maintainers: 2

pypi.org: movement-primitives

Movement primitives

- Homepage: https://github.com/dfki-ric/movement_primitives

- Documentation: https://movement-primitives.readthedocs.io/

- License: BSD-3-clause

-

Latest release: 0.9.1

published 9 months ago

Rankings

Maintainers (2)

Dependencies

- PyYAML *

- cython *

- gmr *

- matplotlib *

- numba *

- numpy *

- open3d *

- pybullet *

- pytransform3d *

- scipy *

- tqdm *

- actions/checkout v2 composite

- actions/setup-python v2 composite

- codecov/codecov-action v1.3.2 composite

- continuumio/miniconda3 latest build